Partoo Vafaeikia

Minimizing the Effect of Noise and Limited Dataset Size in Image Classification Using Depth Estimation as an Auxiliary Task with Deep Multitask Learning

Aug 22, 2022

Abstract:Generalizability is the ultimate goal of Machine Learning (ML) image classifiers, for which noise and limited dataset size are among the major concerns. We tackle these challenges through utilizing the framework of deep Multitask Learning (dMTL) and incorporating image depth estimation as an auxiliary task. On a customized and depth-augmented derivation of the MNIST dataset, we show a) multitask loss functions are the most effective approach of implementing dMTL, b) limited dataset size primarily contributes to classification inaccuracy, and c) depth estimation is mostly impacted by noise. In order to further validate the results, we manually labeled the NYU Depth V2 dataset for scene classification tasks. As a contribution to the field, we have made the data in python native format publicly available as an open-source dataset and provided the scene labels. Our experiments on MNIST and NYU-Depth-V2 show dMTL improves generalizability of the classifiers when the dataset is noisy and the number of examples is limited.

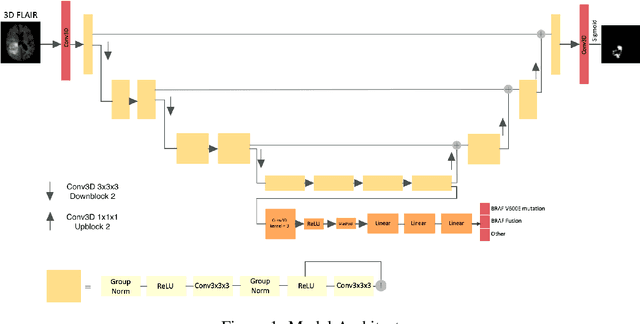

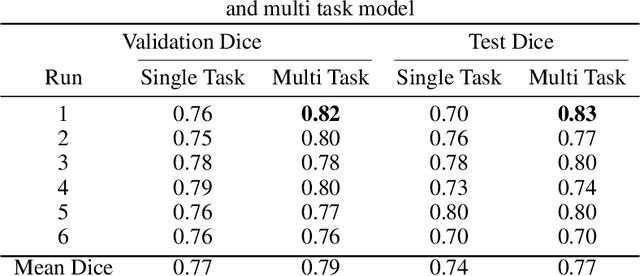

Improving the Segmentation of Pediatric Low-Grade Gliomas through Multitask Learning

Nov 29, 2021

Abstract:Brain tumor segmentation is a critical task for tumor volumetric analyses and AI algorithms. However, it is a time-consuming process and requires neuroradiology expertise. While there has been extensive research focused on optimizing brain tumor segmentation in the adult population, studies on AI guided pediatric tumor segmentation are scarce. Furthermore, MRI signal characteristics of pediatric and adult brain tumors differ, necessitating the development of segmentation algorithms specifically designed for pediatric brain tumors. We developed a segmentation model trained on magnetic resonance imaging (MRI) of pediatric patients with low-grade gliomas (pLGGs) from The Hospital for Sick Children (Toronto, Ontario, Canada). The proposed model utilizes deep Multitask Learning (dMTL) by adding tumor's genetic alteration classifier as an auxiliary task to the main network, ultimately improving the accuracy of the segmentation results.

A Brief Review of Deep Multi-task Learning and Auxiliary Task Learning

Jul 02, 2020

Abstract:Multi-task learning (MTL) optimizes several learning tasks simultaneously and leverages their shared information to improve generalization and the prediction of the model for each task. Auxiliary tasks can be added to the main task to ultimately boost the performance. In this paper, we provide a brief review on the recent deep multi-task learning (dMTL) approaches followed by methods on selecting useful auxiliary tasks that can be used in dMTL to improve the performance of the model for the main task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge