Paolo Baracca

Distributed MIMO for 6G sub-Networks in the Unlicensed Spectrum

Oct 23, 2023Abstract:In this paper, we consider the sixth generation (6G) sub-networks, where hyper reliable low latency communications (HRLLC) requirements are expected to be met. We focus on a scenario where multiple sub-networks are active in the service area and assess the feasibility of using the 6 GHz unlicensed spectrum to operate such deployment, evaluating the impact of listen before talk (LBT). Then, we explore the benefits of using distributed multiple input multiple output (MIMO), where the available antennas in every sub-network are distributed over a number of access points (APs). Specifically, we compare different configurations of distributed MIMO with respect to centralized MIMO, where a single AP with all antennas is located at the center of every sub-network.

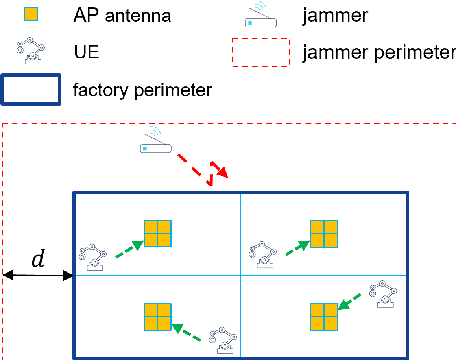

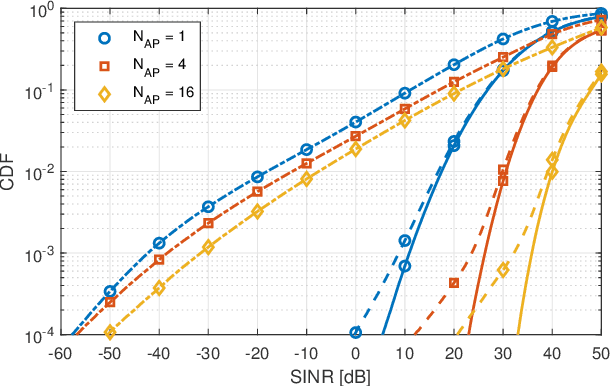

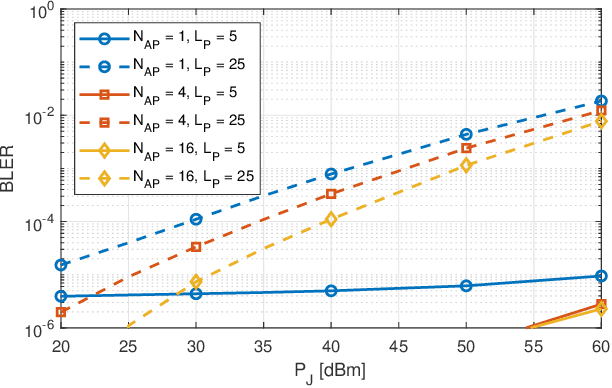

Jamming Resilient Indoor Factory Deployments: Design and Performance Evaluation

Feb 02, 2022

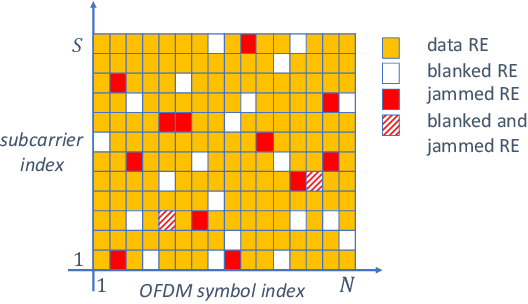

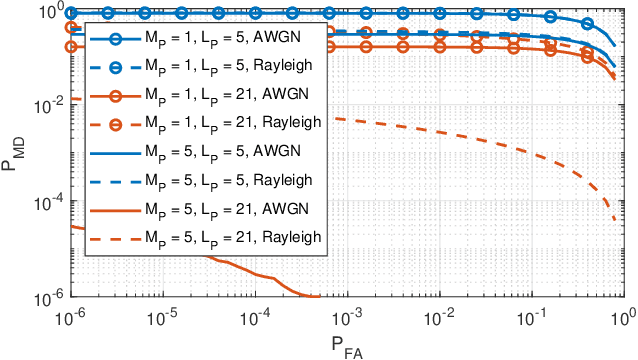

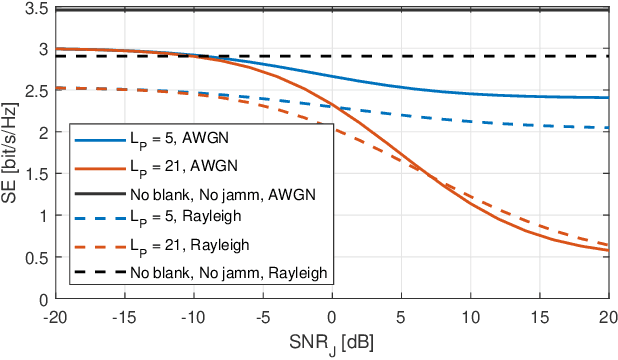

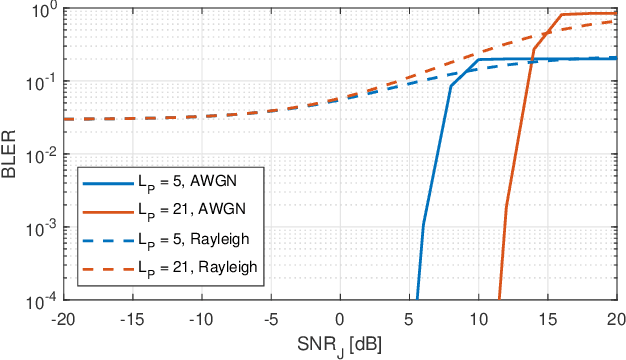

Abstract:In the framework of 5G-and-beyond Industry 4.0, jamming attacks for denial of service are a rising threat which can severely compromise the system performance. Therefore, in this paper we deal with the problem of jamming detection and mitigation in indoor factory deployments. We design two jamming detectors based on pseudo-random blanking of subcarriers with orthogonal frequency division multiplexing and consider jamming mitigation with frequency hopping and random scheduling of the user equipments. We then evaluate the performance of the system in terms of achievable BLER with ultra-reliable low-latency communications traffic and jamming missed detection probability. Simulations are performed considering a 3rd Generation Partnership Project spatial channel model for the factory floor with a jammer stationed outside the plant trying to disrupt the communication inside the factory. Numerical results show that jamming resiliency increases when using a distributed access point deployment and exploiting channel correlation among antennas for jamming detection, while frequency hopping is helpful in jamming mitigation only for strict BLER requirements.

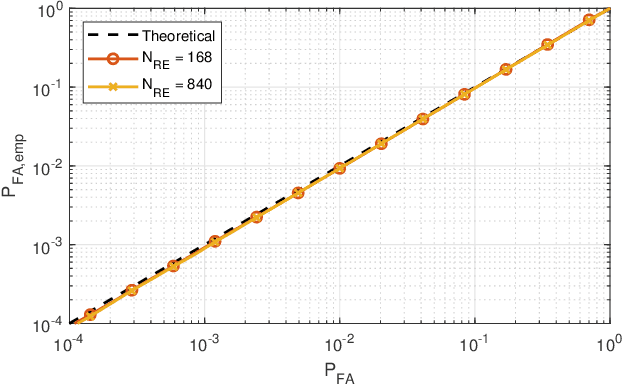

Jamming Detection With Subcarrier Blanking for 5G and Beyond in Industry 4.0 Scenarios

Jun 15, 2021

Abstract:Security attacks at the physical layer, in the form of radio jamming for denial of service, are an increasing threat in the Industry 4.0 scenarios. In this paper, we consider the problem of jamming detection in 5G-and-beyond communication systems and propose a defense mechanism based on pseudo-random blanking of subcarriers with orthogonal frequency division multiplexing (OFDM). We then design a detector by applying the generalized likelihood ratio test (GLRT) on those subcarriers. We finally evaluate the performance of the proposed technique against a smart jammer, which is pursuing one of the following objectives: maximize stealthiness, minimize spectral efficiency (SE) with mobile broadband (MBB) type of traffic, and maximize block error rate (BLER) with ultra-reliable low-latency communications (URLLC). Numerical results show that a smart jammer a) needs to compromise between missed detection (MD) probability and SE reduction with MBB and b) can achieve low detectability and high system performance degradation with URLLC only if it has sufficiently high power.

Interference Distribution Prediction for Link Adaptation in Ultra-Reliable Low-Latency Communications

Jul 01, 2020

Abstract:The strict latency and reliability requirements of ultra-reliable low-latency communications (URLLC) use cases are among the main drivers in fifth generation (5G) network design. Link adaptation (LA) is considered to be one of the bottlenecks to realize URLLC. In this paper, we focus on predicting the signal to interference plus noise ratio at the user to enhance the LA. Motivated by the fact that most of the URLLC use cases with most extreme latency and reliability requirements are characterized by semi-deterministic traffic, we propose to exploit the time correlation of the interference to compute useful statistics needed to predict the interference power in the next transmission. This prediction is exploited in the LA context to maximize the spectral efficiency while guaranteeing reliability at an arbitrary level. Numerical results are compared with state of the art interference prediction techniques for LA. We show that exploiting time correlation of the interference is an important enabler of URLLC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge