Pankaj Rajak

Generative Models for 3D Point Clouds

Feb 26, 2023

Abstract:Point clouds are rich geometric data structures, where their three dimensional structure offers an excellent domain for understanding the representation learning and generative modeling in 3D space. In this work, we aim to improve the performance of point cloud latent-space generative models by experimenting with transformer encoders, latent-space flow models, and autoregressive decoders. We analyze and compare both generation and reconstruction performance of these models on various object types.

Meta-Regularization by Enforcing Mutual-Exclusiveness

Jan 24, 2021

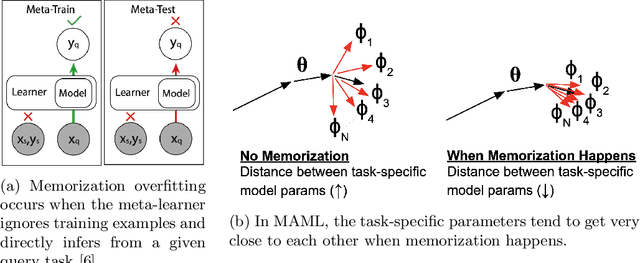

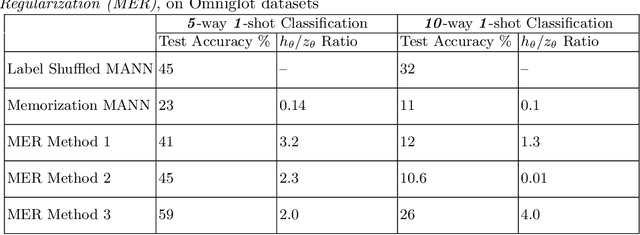

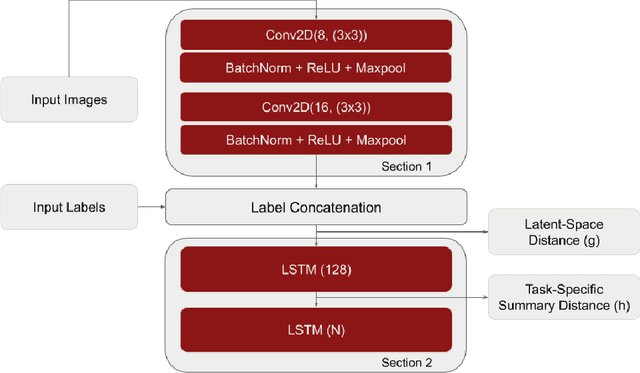

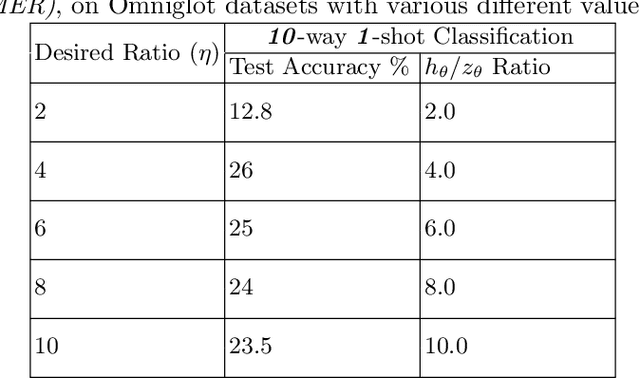

Abstract:Meta-learning models have two objectives. First, they need to be able to make predictions over a range of task distributions while utilizing only a small amount of training data. Second, they also need to adapt to new novel unseen tasks at meta-test time again by using only a small amount of training data from that task. It is the second objective where meta-learning models fail for non-mutually exclusive tasks due to task overfitting. Given that guaranteeing mutually exclusive tasks is often difficult, there is a significant need for regularization methods that can help reduce the impact of task-memorization in meta-learning. For example, in the case of N-way, K-shot classification problems, tasks becomes non-mutually exclusive when the labels associated with each task is fixed. Under this design, the model will simply memorize the class labels of all the training tasks, and thus will fail to recognize a new task (class) at meta-test time. A direct observable consequence of this memorization is that the meta-learning model simply ignores the task-specific training data in favor of directly classifying based on the test-data input. In our work, we propose a regularization technique for meta-learning models that gives the model designer more control over the information flow during meta-training. Our method consists of a regularization function that is constructed by maximizing the distance between task-summary statistics, in the case of black-box models and task specific network parameters in the case of optimization based models during meta-training. Our proposed regularization function shows an accuracy boost of $\sim$ $36\%$ on the Omniglot dataset for 5-way, 1-shot classification using black-box method and for 20-way, 1-shot classification problem using optimization-based method.

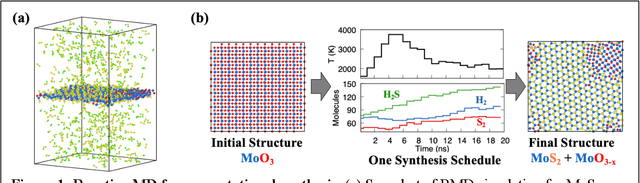

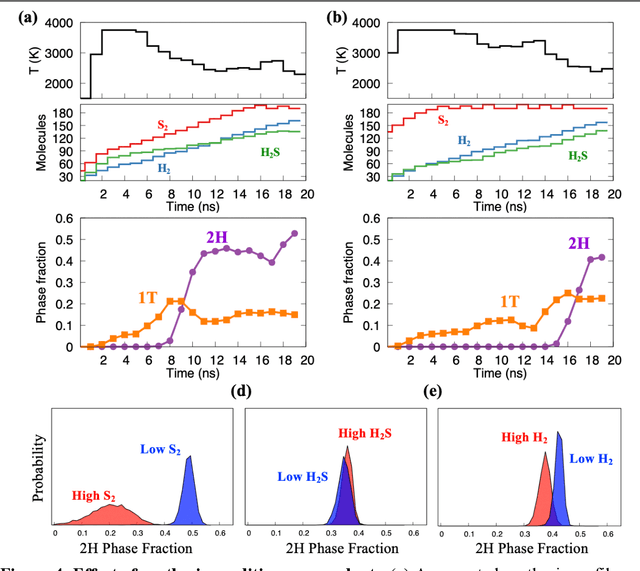

Predictive Synthesis of Quantum Materials by Probabilistic Reinforcement Learning

Sep 14, 2020

Abstract:Predictive materials synthesis is the primary bottleneck in realizing new functional and quantum materials. Strategies for synthesis of promising materials are currently identified by time-consuming trial and error approaches and there are no known predictive schemes to design synthesis parameters for new materials. We use reinforcement learning to predict optimal synthesis schedules, i.e. a time-sequence of reaction conditions like temperatures and reactant concentrations, for the synthesis of a prototypical quantum material, semiconducting monolayer MoS$_{2}$, using chemical vapor deposition. The predictive reinforcement leaning agent is coupled to a deep generative model to capture the crystallinity and phase-composition of synthesized MoS$_{2}$ during CVD synthesis as a function of time-dependent synthesis conditions. This model, trained on 10000 computational synthesis simulations, successfully learned threshold temperatures and chemical potentials for the onset of chemical reactions and predicted new synthesis schedules for producing well-sulfidized crystalline and phase-pure MoS$_{2}$, which were validated by computational synthesis simulations. The model can be extended to predict profiles for synthesis of complex structures including multi-phase heterostructures and can also predict long-time behavior of reacting systems, far beyond the domain of the MD simulations used to train the model, making these predictions directly relevant to experimental synthesis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge