Panfeng Guo

Backdoor Attacks on Federated Learning with Lottery Ticket Hypothesis

Sep 22, 2021

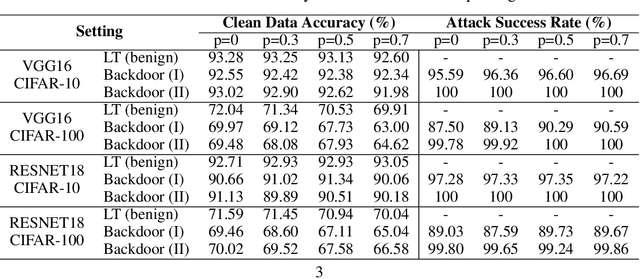

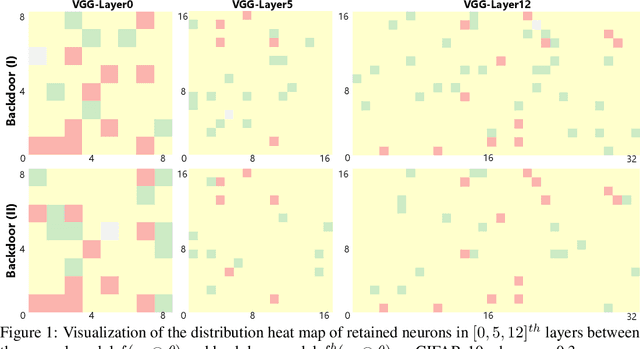

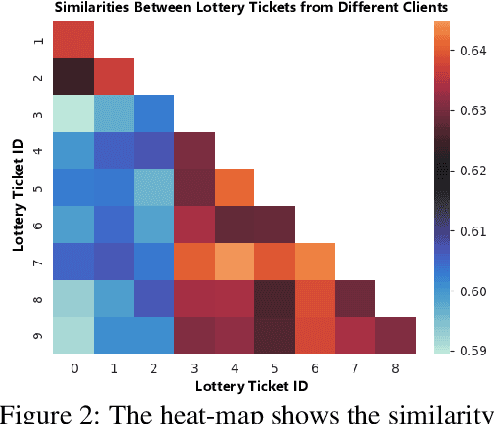

Abstract:Edge devices in federated learning usually have much more limited computation and communication resources compared to servers in a data center. Recently, advanced model compression methods, like the Lottery Ticket Hypothesis, have already been implemented on federated learning to reduce the model size and communication cost. However, Backdoor Attack can compromise its implementation in the federated learning scenario. The malicious edge device trains the client model with poisoned private data and uploads parameters to the center, embedding a backdoor to the global shared model after unwitting aggregative optimization. During the inference phase, the model with backdoors classifies samples with a certain trigger as one target category, while shows a slight decrease in inference accuracy to clean samples. In this work, we empirically demonstrate that Lottery Ticket models are equally vulnerable to backdoor attacks as the original dense models, and backdoor attacks can influence the structure of extracted tickets. Based on tickets' similarities between each other, we provide a feasible defense for federated learning against backdoor attacks on various datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge