P. Zhang

REWAFL: Residual Energy and Wireless Aware Participant Selection for Efficient Federated Learning over Mobile Devices

Sep 24, 2023Abstract:Participant selection (PS) helps to accelerate federated learning (FL) convergence, which is essential for the practical deployment of FL over mobile devices. While most existing PS approaches focus on improving training accuracy and efficiency rather than residual energy of mobile devices, which fundamentally determines whether the selected devices can participate. Meanwhile, the impacts of mobile devices' heterogeneous wireless transmission rates on PS and FL training efficiency are largely ignored. Moreover, PS causes the staleness issue. Prior research exploits isolated functions to force long-neglected devices to participate, which is decoupled from original PS designs. In this paper, we propose a residual energy and wireless aware PS design for efficient FL training over mobile devices (REWAFL). REW AFL introduces a novel PS utility function that jointly considers global FL training utilities and local energy utility, which integrates energy consumption and residual battery energy of candidate mobile devices. Under the proposed PS utility function framework, REW AFL further presents a residual energy and wireless aware local computing policy. Besides, REWAFL buries the staleness solution into its utility function and local computing policy. The experimental results show that REW AFL is effective in improving training accuracy and efficiency, while avoiding "flat battery" of mobile devices.

Energy-Aware Edge Association for Cluster-based Personalized Federated Learning

Feb 06, 2022

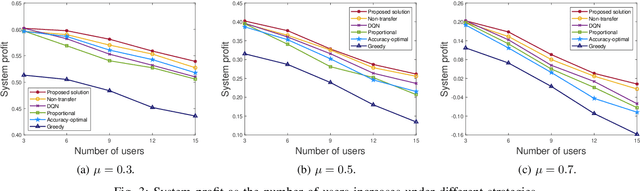

Abstract:Federated Learning (FL) over wireless network enables data-conscious services by leveraging the ubiquitous intelligence at network edge for privacy-preserving model training. As the proliferation of context-aware services, the diversified personal preferences causes disagreeing conditional distributions among user data, which leads to poor inference performance. In this sense, clustered federated learning is proposed to group user devices with similar preference and provide each cluster with a personalized model. This calls for innovative design in edge association that involves user clustering and also resource management optimization. We formulate an accuracy-cost trade-off optimization problem by jointly considering model accuracy, communication resource allocation and energy consumption. To comply with parameter encryption techniques in FL, we propose an iterative solution procedure which employs deep reinforcement learning based approach at cloud server for edge association. The reward function consists of minimized energy consumption at each base station and the averaged model accuracy of all users. Under our proposed solution, multiple edge base station are fully exploited to realize cost efficient personalized federated learning without any prior knowledge on model parameters. Simulation results show that our proposed strategy outperforms existing strategies in achieving accurate learning at low energy cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge