P. Bertok

Local Differential Privacy for Deep Learning

Aug 08, 2019

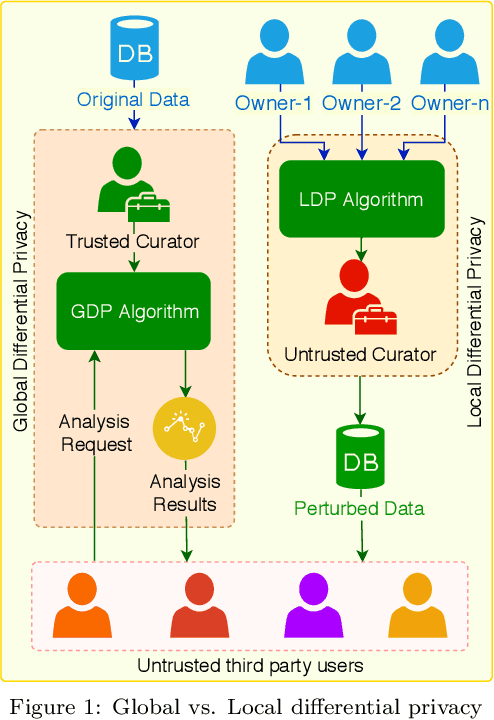

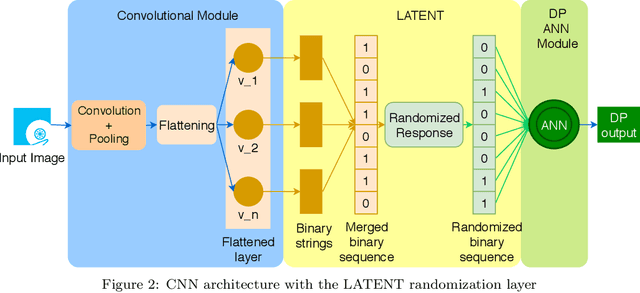

Abstract:Deep learning (DL) is a promising area of machine learning which is becoming popular due to its remarkable accuracy when trained with a massive amount of data. Often, these datasets are highly sensitive crowd-sourced data such as medical data, financial data, or image data, and the DL models trained on these data tend to leak privacy. We propose a new local differentially private (LDP) algorithm (named LATENT) which redesigns the training process in a way that a data owner can add a randomization layer before data leave data owners' devices and reach to a potentially untrusted machine learning service. This way LATENT prevents privacy leaks of DL models, e.g., due to membership inference and memorizing model attacks, while providing excellent accuracy. By not requiring a trusted party, LATENT can be more practical for cloud-based machine learning services in comparison to existing differentially private approaches. Our experimental evaluation of LATENT on convolutional deep neural networks demonstrates excellent accuracy (e.g. 91\%- 96\%) with high model quality even under very low privacy budgets (e.g. $\epsilon=0.5$), outperforming existing differentially private approaches for deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge