Osman Yagan

Cost-aware LLM-based Online Dataset Annotation

May 21, 2025Abstract:Recent advances in large language models (LLMs) have enabled automated dataset labeling with minimal human supervision. While majority voting across multiple LLMs can improve label reliability by mitigating individual model biases, it incurs high computational costs due to repeated querying. In this work, we propose a novel online framework, Cost-aware Majority Voting (CaMVo), for efficient and accurate LLM-based dataset annotation. CaMVo adaptively selects a subset of LLMs for each data instance based on contextual embeddings, balancing confidence and cost without requiring pre-training or ground-truth labels. Leveraging a LinUCB-based selection mechanism and a Bayesian estimator over confidence scores, CaMVo estimates a lower bound on labeling accuracy for each LLM and aggregates responses through weighted majority voting. Our empirical evaluation on the MMLU and IMDB Movie Review datasets demonstrates that CaMVo achieves comparable or superior accuracy to full majority voting while significantly reducing labeling costs. This establishes CaMVo as a practical and robust solution for cost-efficient annotation in dynamic labeling environments.

Bandits with Anytime Knapsacks

Jan 30, 2025Abstract:We consider bandits with anytime knapsacks (BwAK), a novel version of the BwK problem where there is an \textit{anytime} cost constraint instead of a total cost budget. This problem setting introduces additional complexities as it mandates adherence to the constraint throughout the decision-making process. We propose SUAK, an algorithm that utilizes upper confidence bounds to identify the optimal mixture of arms while maintaining a balance between exploration and exploitation. SUAK is an adaptive algorithm that strategically utilizes the available budget in each round in the decision-making process and skips a round when it is possible to violate the anytime cost constraint. In particular, SUAK slightly under-utilizes the available cost budget to reduce the need for skipping rounds. We show that SUAK attains the same problem-dependent regret upper bound of $ O(K \log T)$ established in prior work under the simpler BwK framework. Finally, we provide simulations to verify the utility of SUAK in practical settings.

FedSPD: A Soft-clustering Approach for Personalized Decentralized Federated Learning

Oct 24, 2024

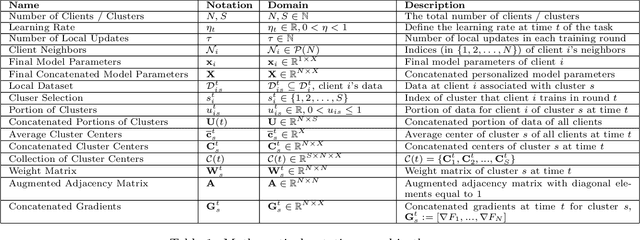

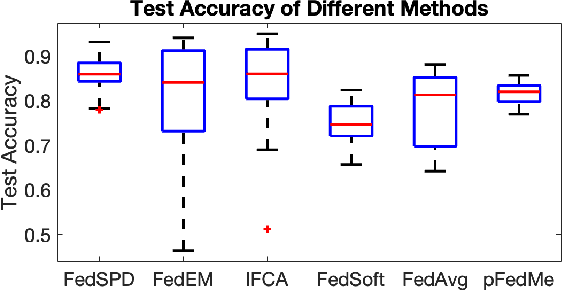

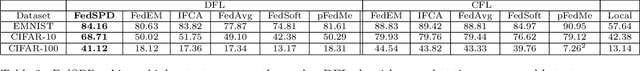

Abstract:Federated learning has recently gained popularity as a framework for distributed clients to collaboratively train a machine learning model using local data. While traditional federated learning relies on a central server for model aggregation, recent advancements adopt a decentralized framework, enabling direct model exchange between clients and eliminating the single point of failure. However, existing decentralized frameworks often assume all clients train a shared model. Personalizing each client's model can enhance performance, especially with heterogeneous client data distributions. We propose FedSPD, an efficient personalized federated learning algorithm for the decentralized setting, and show that it learns accurate models even in low-connectivity networks. To provide theoretical guarantees on convergence, we introduce a clustering-based framework that enables consensus on models for distinct data clusters while personalizing to unique mixtures of these clusters at different clients. This flexibility, allowing selective model updates based on data distribution, substantially reduces communication costs compared to prior work on personalized federated learning in decentralized settings. Experimental results on real-world datasets show that FedSPD outperforms multiple decentralized variants of personalized federated learning algorithms, especially in scenarios with low-connectivity networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge