Orr Dunkelman

Fuzzy Commitments Offer Insufficient Protection to Biometric Templates Produced by Deep Learning

Dec 24, 2020

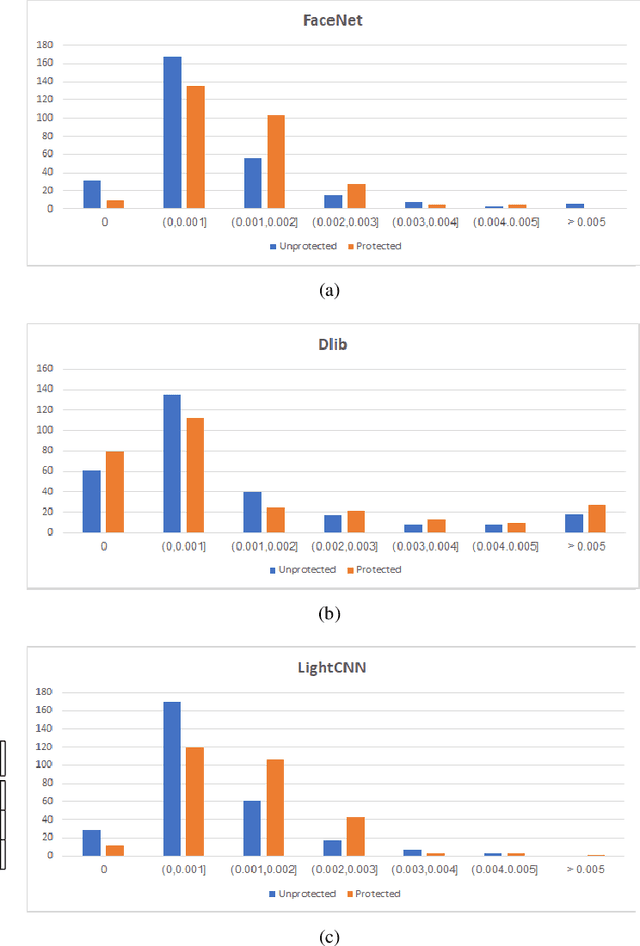

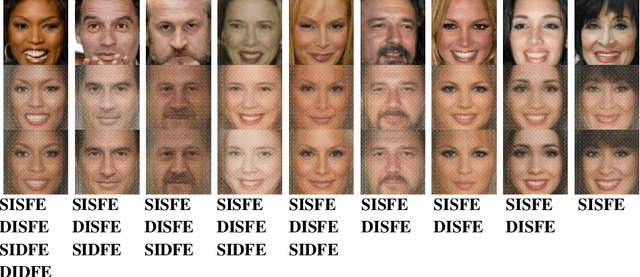

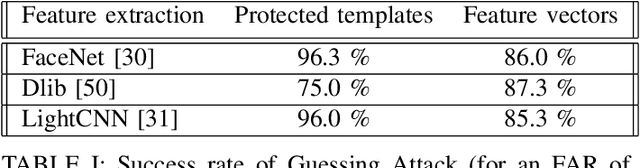

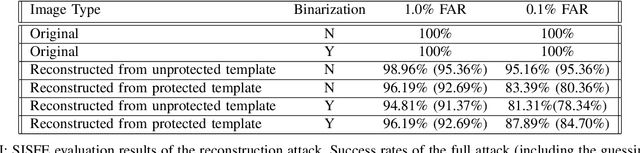

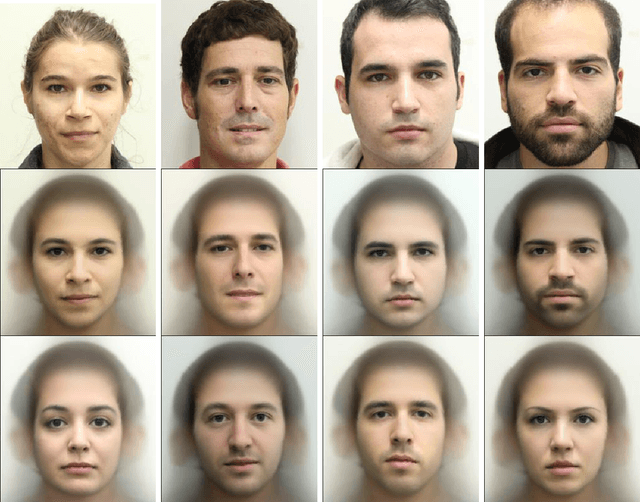

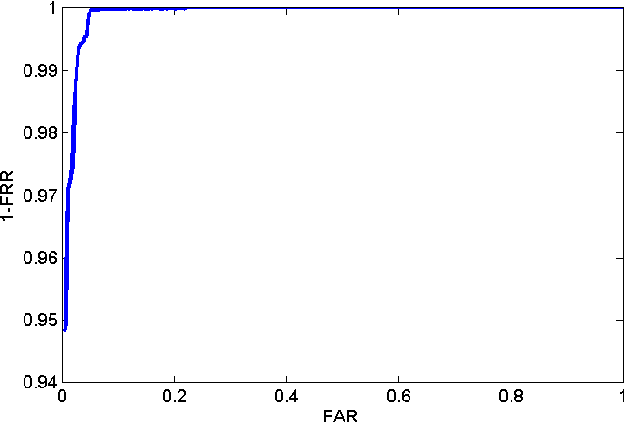

Abstract:In this work, we study the protection that fuzzy commitments offer when they are applied to facial images, processed by the state of the art deep learning facial recognition systems. We show that while these systems are capable of producing great accuracy, they produce templates of too little entropy. As a result, we present a reconstruction attack that takes a protected template, and reconstructs a facial image. The reconstructed facial images greatly resemble the original ones. In the simplest attack scenario, more than 78% of these reconstructed templates succeed in unlocking an account (when the system is configured to 0.1% FAR). Even in the "hardest" settings (in which we take a reconstructed image from one system and use it in a different system, with different feature extraction process) the reconstructed image offers 50 to 120 times higher success rates than the system's FAR.

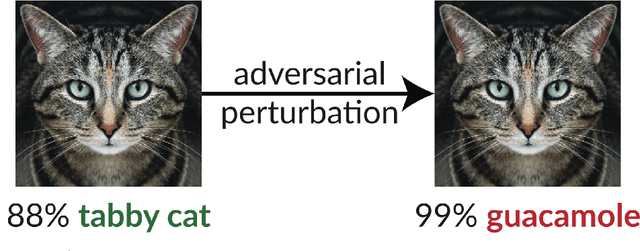

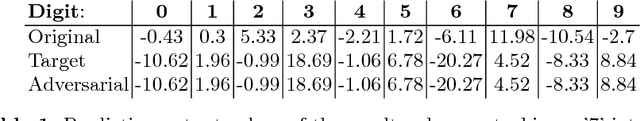

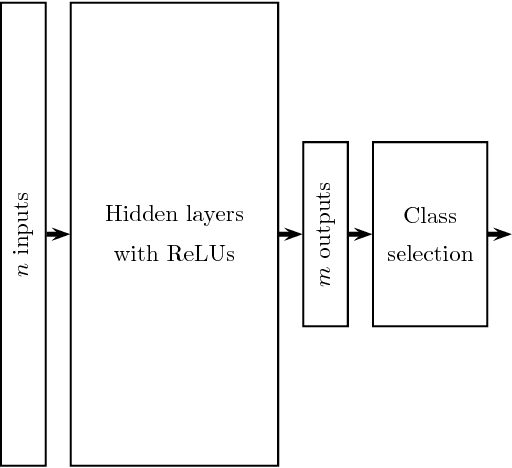

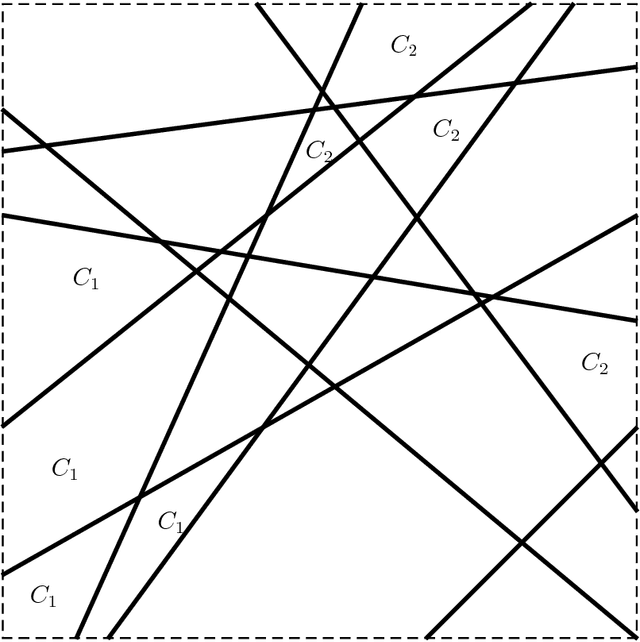

A Simple Explanation for the Existence of Adversarial Examples with Small Hamming Distance

Jan 30, 2019

Abstract:The existence of adversarial examples in which an imperceptible change in the input can fool well trained neural networks was experimentally discovered by Szegedy et al in 2013, who called them "Intriguing properties of neural networks". Since then, this topic had become one of the hottest research areas within machine learning, but the ease with which we can switch between any two decisions in targeted attacks is still far from being understood, and in particular it is not clear which parameters determine the number of input coordinates we have to change in order to mislead the network. In this paper we develop a simple mathematical framework which enables us to think about this baffling phenomenon from a fresh perspective, turning it into a natural consequence of the geometry of $\mathbb{R}^n$ with the $L_0$ (Hamming) metric, which can be quantitatively analyzed. In particular, we explain why we should expect to find targeted adversarial examples with Hamming distance of roughly $m$ in arbitrarily deep neural networks which are designed to distinguish between $m$ input classes.

HoneyFaces: Increasing the Security and Privacy of Authentication Using Synthetic Facial Images

Nov 11, 2016

Abstract:One of the main challenges faced by Biometric-based authentication systems is the need to offer secure authentication while maintaining the privacy of the biometric data. Previous solutions, such as Secure Sketch and Fuzzy Extractors, rely on assumptions that cannot be guaranteed in practice, and often affect the authentication accuracy. In this paper, we introduce HoneyFaces: the concept of adding a large set of synthetic faces (indistinguishable from real) into the biometric "password file". This password inflation protects the privacy of users and increases the security of the system without affecting the accuracy of the authentication. In particular, privacy for the real users is provided by "hiding" them among a large number of fake users (as the distributions of synthetic and real faces are equal). In addition to maintaining the authentication accuracy, and thus not affecting the security of the authentication process, HoneyFaces offer several security improvements: increased exfiltration hardness, improved leakage detection, and the ability to use a Two-server setting like in HoneyWords. Finally, HoneyFaces can be combined with other security and privacy mechanisms for biometric data. We implemented the HoneyFaces system and tested it with a password file composed of 270 real users. The "password file" was then inflated to accommodate up to $2^{36.5}$ users (resulting in a 56.6 TB "password file"). At the same time, the inclusion of additional faces does not affect the true acceptance rate or false acceptance rate which were 93.33\% and 0.01\%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge