Or Raveh

Multi-Player Approaches for Dueling Bandits

May 25, 2024

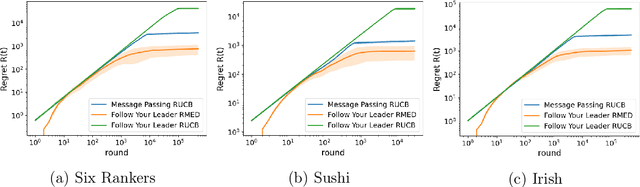

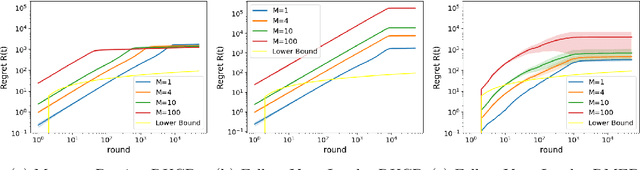

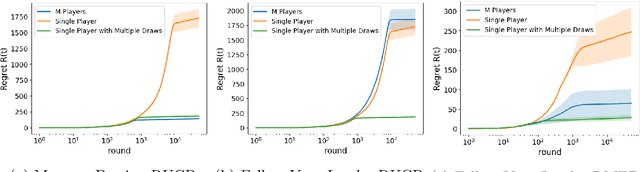

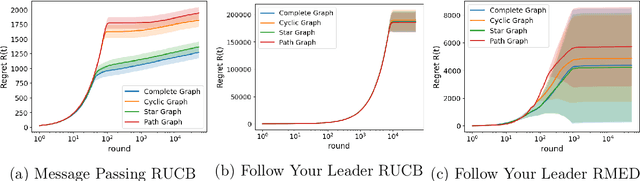

Abstract:Various approaches have emerged for multi-armed bandits in distributed systems. The multiplayer dueling bandit problem, common in scenarios with only preference-based information like human feedback, introduces challenges related to controlling collaborative exploration of non-informative arm pairs, but has received little attention. To fill this gap, we demonstrate that the direct use of a Follow Your Leader black-box approach matches the lower bound for this setting when utilizing known dueling bandit algorithms as a foundation. Additionally, we analyze a message-passing fully distributed approach with a novel Condorcet-winner recommendation protocol, resulting in expedited exploration in many cases. Our experimental comparisons reveal that our multiplayer algorithms surpass single-player benchmark algorithms, underscoring their efficacy in addressing the nuanced challenges of the multiplayer dueling bandit setting.

PAC Guarantees for Concurrent Reinforcement Learning with Restricted Communication

May 23, 2019

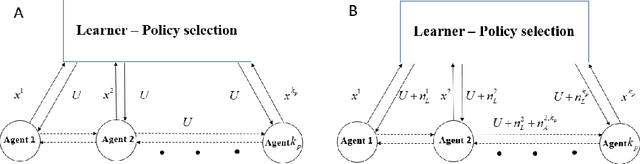

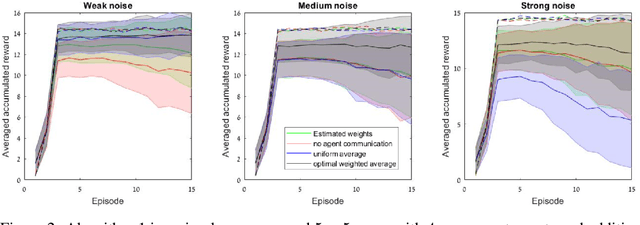

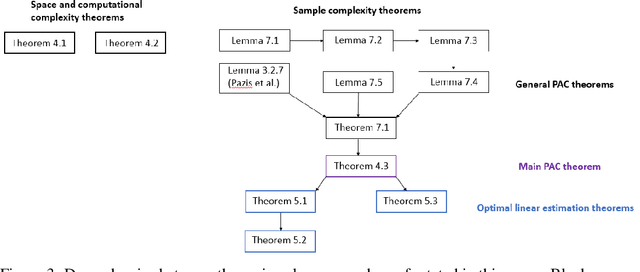

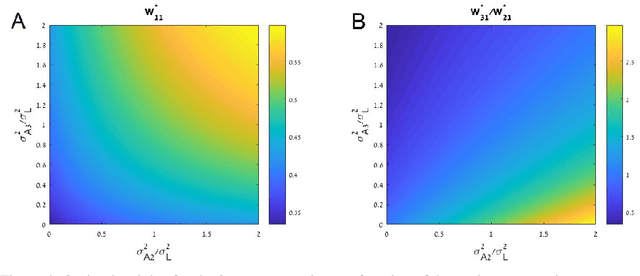

Abstract:We develop model free PAC performance guarantees for multiple concurrent MDPs, extending recent works where a single learner interacts with multiple non-interacting agents in a noise free environment. Our framework allows noisy and resource limited communication between agents, and develops novel PAC guarantees in this extended setting. By allowing communication between the agents themselves, we suggest improved PAC-exploration algorithms that can overcome the communication noise and lead to improved sample complexity bounds. We provide a theoretically motivated algorithm that optimally combines information from the resource limited agents, thereby analyzing the interaction between noise and communication constraints that are ubiquitous in real-world systems. We present empirical results for a simple task that supports our theoretical formulations and improve upon naive information fusion methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge