Omar Elezabi

INRetouch: Context Aware Implicit Neural Representation for Photography Retouching

Dec 05, 2024

Abstract:Professional photo editing remains challenging, requiring extensive knowledge of imaging pipelines and significant expertise. With the ubiquity of smartphone photography, there is an increasing demand for accessible yet sophisticated image editing solutions. While recent deep learning approaches, particularly style transfer methods, have attempted to automate this process, they often struggle with output fidelity, editing control, and complex retouching capabilities. We propose a novel retouch transfer approach that learns from professional edits through before-after image pairs, enabling precise replication of complex editing operations. To facilitate this research direction, we introduce a comprehensive Photo Retouching Dataset comprising 100,000 high-quality images edited using over 170 professional Adobe Lightroom presets. We develop a context-aware Implicit Neural Representation that learns to apply edits adaptively based on image content and context, requiring no pretraining and capable of learning from a single example. Our method extracts implicit transformations from reference edits and adaptively applies them to new images. Through extensive evaluation, we demonstrate that our approach not only surpasses existing methods in photo retouching but also enhances performance in related image reconstruction tasks like Gamut Mapping and Raw Reconstruction. By bridging the gap between professional editing capabilities and automated solutions, our work presents a significant step toward making sophisticated photo editing more accessible while maintaining high-fidelity results. Check the $\href{https://omaralezaby.github.io/inretouch}{Project\ Page}$ for more Results and information about Code and Dataset availability.

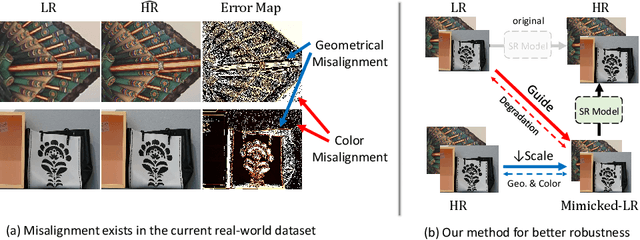

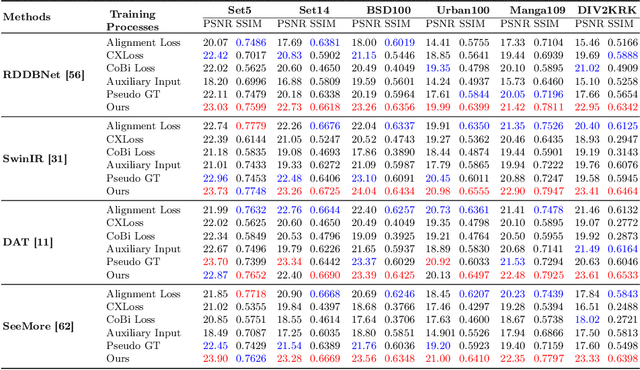

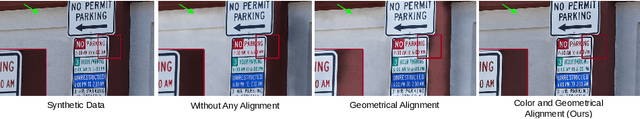

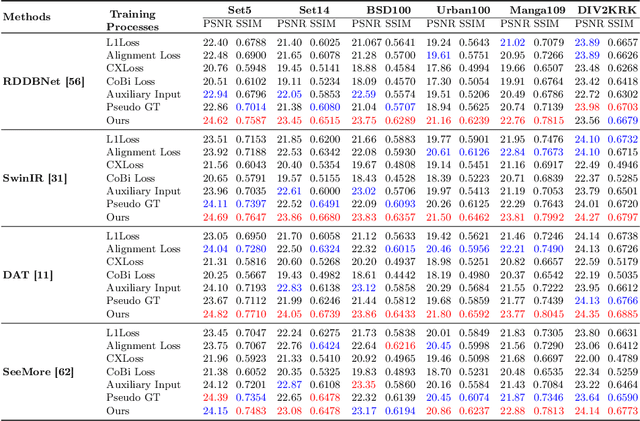

Enhanced Super-Resolution Training via Mimicked Alignment for Real-World Scenes

Oct 07, 2024

Abstract:Image super-resolution methods have made significant strides with deep learning techniques and ample training data. However, they face challenges due to inherent misalignment between low-resolution (LR) and high-resolution (HR) pairs in real-world datasets. In this study, we propose a novel plug-and-play module designed to mitigate these misalignment issues by aligning LR inputs with HR images during training. Specifically, our approach involves mimicking a novel LR sample that aligns with HR while preserving the degradation characteristics of the original LR samples. This module seamlessly integrates with any SR model, enhancing robustness against misalignment. Importantly, it can be easily removed during inference, therefore without introducing any parameters on the conventional SR models. We comprehensively evaluate our method on synthetic and real-world datasets, demonstrating its effectiveness across a spectrum of SR models, including traditional CNNs and state-of-the-art Transformers. The source codes will be publicly made available at https://github.com/omarAlezaby/Mimicked_Ali .

Simple Image Signal Processing using Global Context Guidance

Apr 17, 2024

Abstract:In modern smartphone cameras, the Image Signal Processor (ISP) is the core element that converts the RAW readings from the sensor into perceptually pleasant RGB images for the end users. The ISP is typically proprietary and handcrafted and consists of several blocks such as white balance, color correction, and tone mapping. Deep learning-based ISPs aim to transform RAW images into DSLR-like RGB images using deep neural networks. However, most learned ISPs are trained using patches (small regions) due to computational limitations. Such methods lack global context, which limits their efficacy on full-resolution images and harms their ability to capture global properties such as color constancy or illumination. First, we propose a novel module that can be integrated into any neural ISP to capture the global context information from the full RAW images. Second, we propose an efficient and simple neural ISP that utilizes our proposed module. Our model achieves state-of-the-art results on different benchmarks using diverse and real smartphone images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge