Omar Bahri

M-CELS: Counterfactual Explanation for Multivariate Time Series Data Guided by Learned Saliency Maps

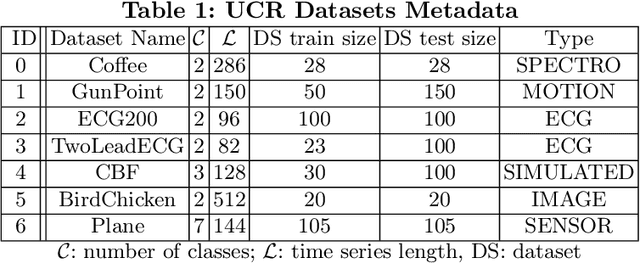

Nov 04, 2024Abstract:Over the past decade, multivariate time series classification has received great attention. Machine learning (ML) models for multivariate time series classification have made significant strides and achieved impressive success in a wide range of applications and tasks. The challenge of many state-of-the-art ML models is a lack of transparency and interpretability. In this work, we introduce M-CELS, a counterfactual explanation model designed to enhance interpretability in multidimensional time series classification tasks. Our experimental validation involves comparing M-CELS with leading state-of-the-art baselines, utilizing seven real-world time-series datasets from the UEA repository. The results demonstrate the superior performance of M-CELS in terms of validity, proximity, and sparsity, reinforcing its effectiveness in providing transparent insights into the decisions of machine learning models applied to multivariate time series data.

Info-CELS: Informative Saliency Map Guided Counterfactual Explanation

Oct 27, 2024

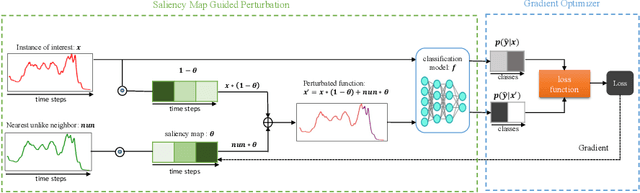

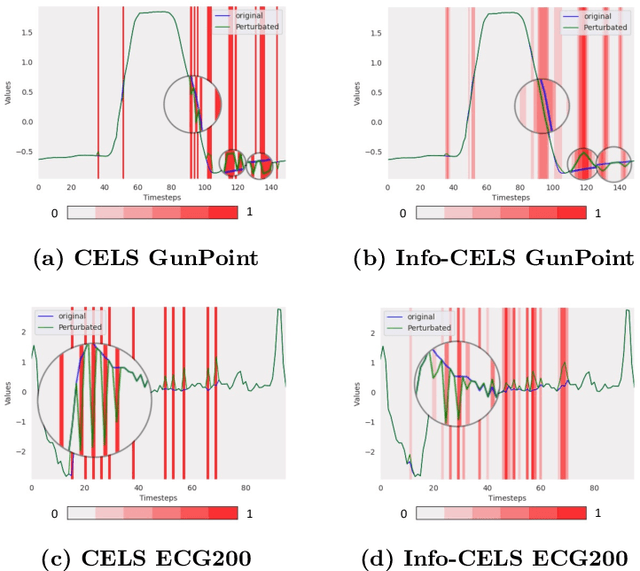

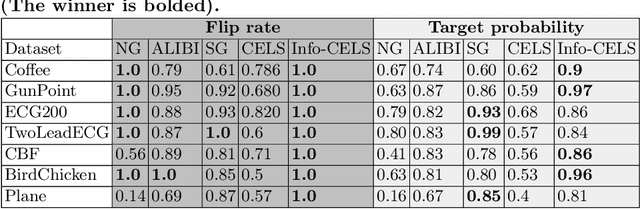

Abstract:As the demand for interpretable machine learning approaches continues to grow, there is an increasing necessity for human involvement in providing informative explanations for model decisions. This is necessary for building trust and transparency in AI-based systems, leading to the emergence of the Explainable Artificial Intelligence (XAI) field. Recently, a novel counterfactual explanation model, CELS, has been introduced. CELS learns a saliency map for the interest of an instance and generates a counterfactual explanation guided by the learned saliency map. While CELS represents the first attempt to exploit learned saliency maps not only to provide intuitive explanations for the reason behind the decision made by the time series classifier but also to explore post hoc counterfactual explanations, it exhibits limitations in terms of high validity for the sake of ensuring high proximity and sparsity. In this paper, we present an enhanced approach that builds upon CELS. While the original model achieved promising results in terms of sparsity and proximity, it faced limitations in validity. Our proposed method addresses this limitation by removing mask normalization to provide more informative and valid counterfactual explanations. Through extensive experimentation on datasets from various domains, we demonstrate that our approach outperforms the CELS model, achieving higher validity and producing more informative explanations.

Shapelet-Based Counterfactual Explanations for Multivariate Time Series

Aug 22, 2022

Abstract:As machine learning and deep learning models have become highly prevalent in a multitude of domains, the main reservation in their adoption for decision-making processes is their black-box nature. The Explainable Artificial Intelligence (XAI) paradigm has gained a lot of momentum lately due to its ability to reduce models opacity. XAI methods have not only increased stakeholders' trust in the decision process but also helped developers ensure its fairness. Recent efforts have been invested in creating transparent models and post-hoc explanations. However, fewer methods have been developed for time series data, and even less when it comes to multivariate datasets. In this work, we take advantage of the inherent interpretability of shapelets to develop a model agnostic multivariate time series (MTS) counterfactual explanation algorithm. Counterfactuals can have a tremendous impact on making black-box models explainable by indicating what changes have to be performed on the input to change the final decision. We test our approach on a real-life solar flare prediction dataset and prove that our approach produces high-quality counterfactuals. Moreover, a comparison to the only MTS counterfactual generation algorithm shows that, in addition to being visually interpretable, our explanations are superior in terms of proximity, sparsity, and plausibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge