Olivier Colot

SPOCC: Scalable POssibilistic Classifier Combination -- toward robust aggregation of classifiers

Aug 18, 2019

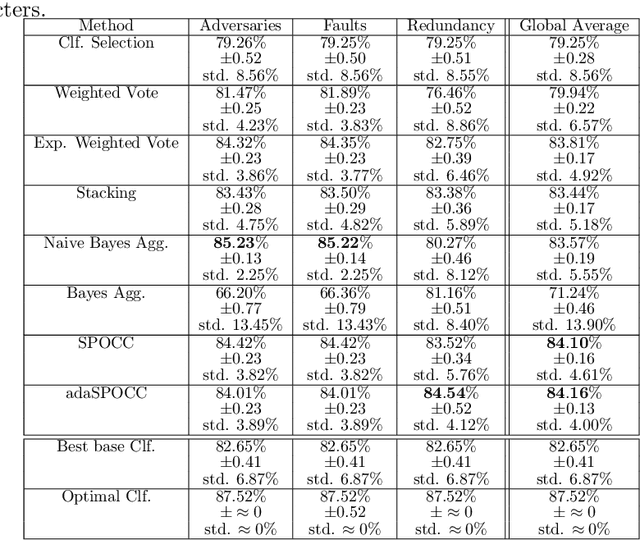

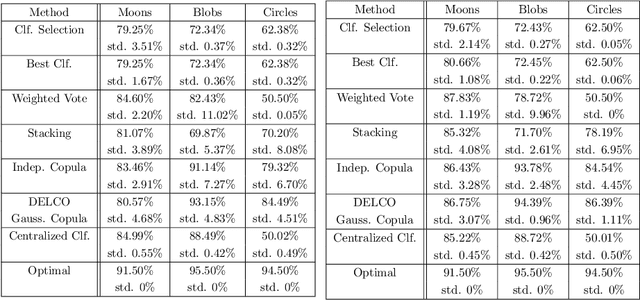

Abstract:We investigate a problem in which each member of a group of learners is trained separately to solve the same classification task. Each learner has access to a training dataset (possibly with overlap across learners) but each trained classifier can be evaluated on a validation dataset. We propose a new approach to aggregate the learner predictions in the possibility theory framework. For each classifier prediction, we build a possibility distribution assessing how likely the classifier prediction is correct using frequentist probabilities estimated on the validation set. The possibility distributions are aggregated using an adaptive t-norm that can accommodate dependency and poor accuracy of the classifier predictions. We prove that the proposed approach possesses a number of desirable classifier combination robustness properties.

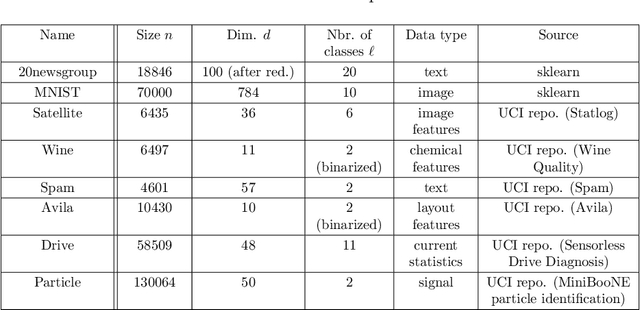

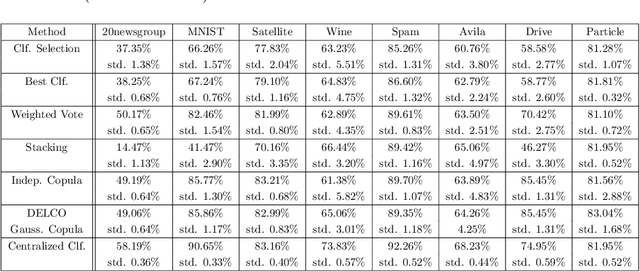

Decentralized learning with budgeted network load using Gaussian copulas and classifier ensembles

Apr 26, 2018

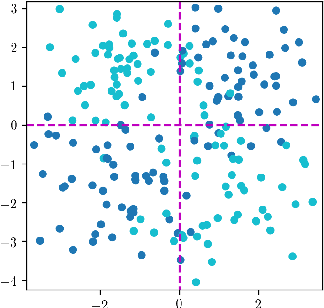

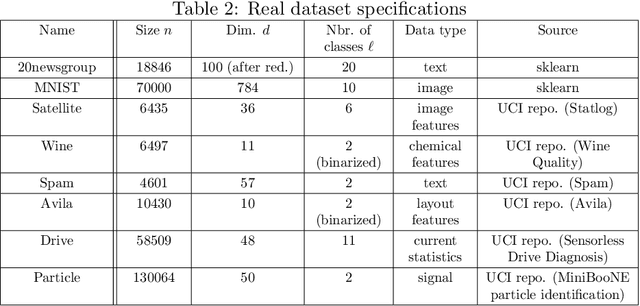

Abstract:We examine a network of learners which address the same classification task but must learn from different data sets. The learners can share a limited portion of their data sets so as to preserve the network load. We introduce DELCO (standing for Decentralized Ensemble Learning with COpulas), a new approach in which the shared data and the trained models are sent to a central machine that allows to build an ensemble of classifiers. The proposed method aggregates the base classifiers using a probabilistic model relying on Gaussian copulas. Experiments on logistic regressor ensembles demonstrate competing accuracy and increased robustness as compared to gold standard approaches. A companion python implementation can be downloaded at https://github.com/john-klein/DELCO

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge