Oliver Schütze

MMD-Newton Method for Multi-objective Optimization

May 20, 2025Abstract:Maximum mean discrepancy (MMD) has been widely employed to measure the distance between probability distributions. In this paper, we propose using MMD to solve continuous multi-objective optimization problems (MOPs). For solving MOPs, a common approach is to minimize the distance (e.g., Hausdorff) between a finite approximate set of the Pareto front and a reference set. Viewing these two sets as empirical measures, we propose using MMD to measure the distance between them. To minimize the MMD value, we provide the analytical expression of its gradient and Hessian matrix w.r.t. the search variables, and use them to devise a novel set-oriented, MMD-based Newton (MMDN) method. Also, we analyze the theoretical properties of MMD's gradient and Hessian, including the first-order stationary condition and the eigenspectrum of the Hessian, which are important for verifying the correctness of MMDN. To solve complicated problems, we propose hybridizing MMDN with multiobjective evolutionary algorithms (MOEAs), where we first execute an EA for several iterations to get close to the global Pareto front and then warm-start MMDN with the result of the MOEA to efficiently refine the approximation. We empirically test the hybrid algorithm on 11 widely used benchmark problems, and the results show the hybrid (MMDN + MOEA) can achieve a much better optimization accuracy than EA alone with the same computation budget.

A Newton Method for Hausdorff Approximations of the Pareto Front within Multi-objective Evolutionary Algorithms

May 09, 2024

Abstract:A common goal in evolutionary multi-objective optimization is to find suitable finite-size approximations of the Pareto front of a given multi-objective optimization problem. While many multi-objective evolutionary algorithms have proven to be very efficient in finding good Pareto front approximations, they may need quite a few resources or may even fail to obtain optimal or nearly approximations. Hereby, optimality is implicitly defined by the chosen performance indicator. In this work, we propose a set-based Newton method for Hausdorff approximations of the Pareto front to be used within multi-objective evolutionary algorithms. To this end, we first generalize the previously proposed Newton step for the performance indicator for the treatment of constrained problems for general reference sets. To approximate the target Pareto front, we propose a particular strategy for generating the reference set that utilizes the data gathered by the evolutionary algorithm during its run. Finally, we show the benefit of the Newton method as a post-processing step on several benchmark test functions and different base evolutionary algorithms.

Automatic Model Selection for Neural Networks

May 15, 2019

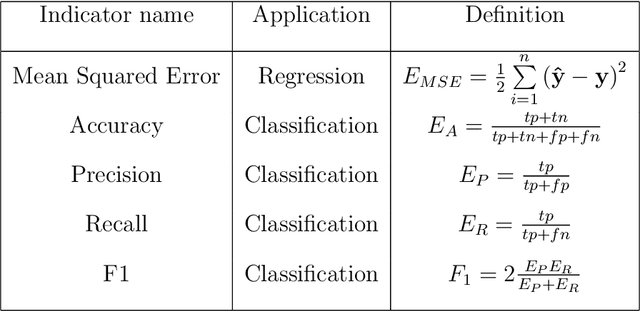

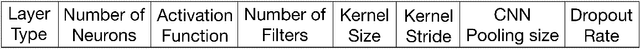

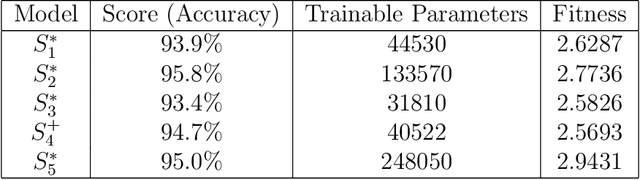

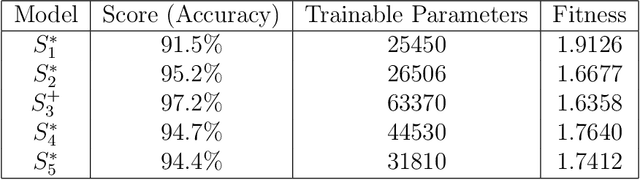

Abstract:Neural networks and deep learning are changing the way that artificial intelligence is being done. Efficiently choosing a suitable network architecture and fine-tune its hyper-parameters for a specific dataset is a time-consuming task given the staggering number of possible alternatives. In this paper, we address the problem of model selection by means of a fully automated framework for efficiently selecting a neural network model for a given task: classification or regression. The algorithm, named Automatic Model Selection, is a modified micro-genetic algorithm that automatically and efficiently finds the most suitable neural network model for a given dataset. The main contributions of this method are a simple list based encoding for neural networks as genotypes in an evolutionary algorithm, new crossover, and mutation operators, the introduction of a fitness function that considers both, the accuracy of the model and its complexity and a method to measure the similarity between two neural networks. AMS is evaluated on two different datasets. By comparing some models obtained with AMS to state-of-the-art models for each dataset we show that AMS can automatically find efficient neural network models. Furthermore, AMS is computationally efficient and can make use of distributed computing paradigms to further boost its performance.

A Neural Network-Evolutionary Computational Framework for Remaining Useful Life Estimation of Mechanical Systems

May 15, 2019

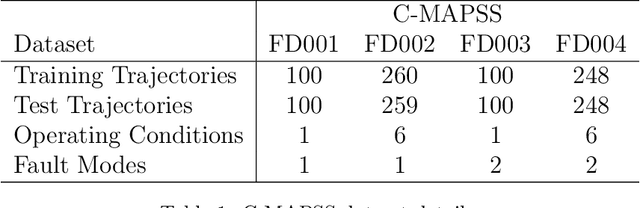

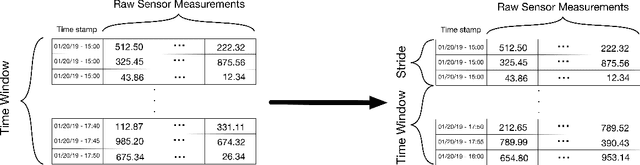

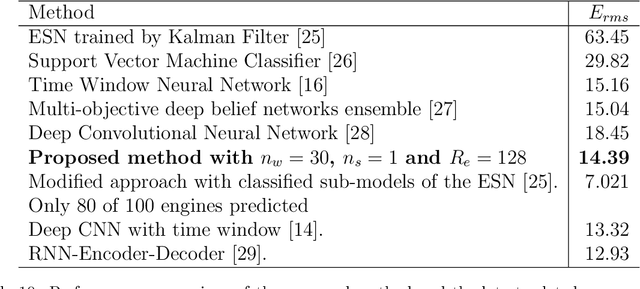

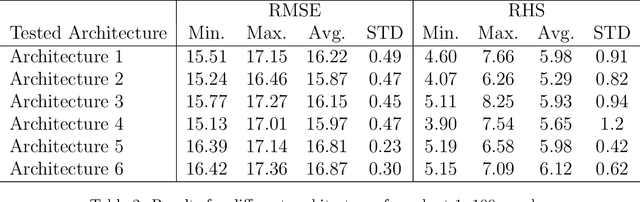

Abstract:This paper presents a framework for estimating the remaining useful life (RUL) of mechanical systems. The framework consists of a multi-layer perceptron and an evolutionary algorithm for optimizing the data-related parameters. The framework makes use of a strided time window to estimate the RUL for mechanical components. Tuning the data-related parameters can become a very time consuming task. The framework presented here automatically reshapes the data such that the efficiency of the model is increased. Furthermore, the complexity of the model is kept low, e.g. neural networks with few hidden layers and few neurons at each layer. Having simple models has several advantages like short training times and the capacity of being in environments with limited computational resources such as embedded systems. The proposed method is evaluated on the publicly available C-MAPSS dataset, its accuracy is compared against other state-of-the art methods for the same dataset.

* Published at Neural Networks 116, (2019) 178-187

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge