Oliver Cobb

Towards Practicable Sequential Shift Detectors

Jul 27, 2023

Abstract:There is a growing awareness of the harmful effects of distribution shift on the performance of deployed machine learning models. Consequently, there is a growing interest in detecting these shifts before associated costs have time to accumulate. However, desiderata of crucial importance to the practicable deployment of sequential shift detectors are typically overlooked by existing works, precluding their widespread adoption. We identify three such desiderata, highlight existing works relevant to their satisfaction, and recommend impactful directions for future research.

Context-Aware Drift Detection

Mar 16, 2022

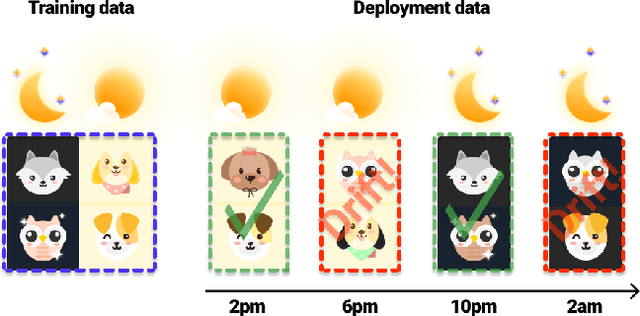

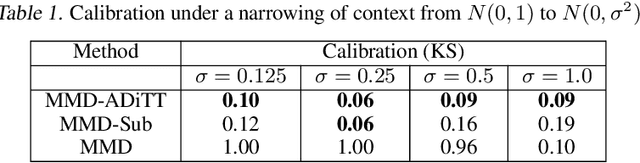

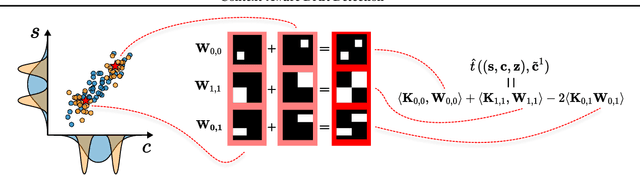

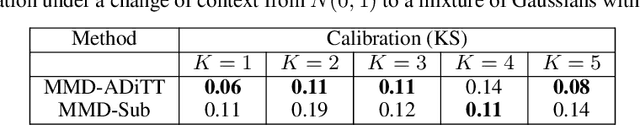

Abstract:When monitoring machine learning systems, two-sample tests of homogeneity form the foundation upon which existing approaches to drift detection build. They are used to test for evidence that the distribution underlying recent deployment data differs from that underlying the historical reference data. Often, however, various factors such as time-induced correlation mean that batches of recent deployment data are not expected to form an i.i.d. sample from the historical data distribution. Instead we may wish to test for differences in the distributions conditional on \textit{context} that is permitted to change. To facilitate this we borrow machinery from the causal inference domain to develop a more general drift detection framework built upon a foundation of two-sample tests for conditional distributional treatment effects. We recommend a particular instantiation of the framework based on maximum conditional mean discrepancies. We then provide an empirical study demonstrating its effectiveness for various drift detection problems of practical interest, such as detecting drift in the distributions underlying subpopulations of data in a manner that is insensitive to their respective prevalences. The study additionally demonstrates applicability to ImageNet-scale vision problems.

Sequential Multivariate Change Detection with Calibrated and Memoryless False Detection Rates

Aug 02, 2021

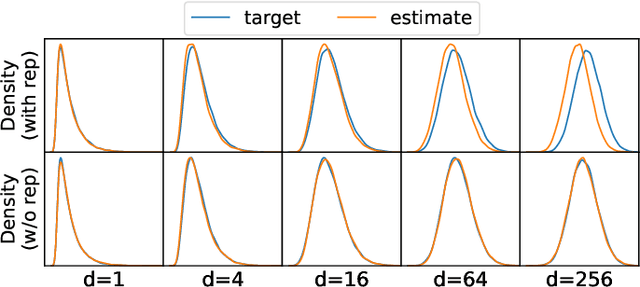

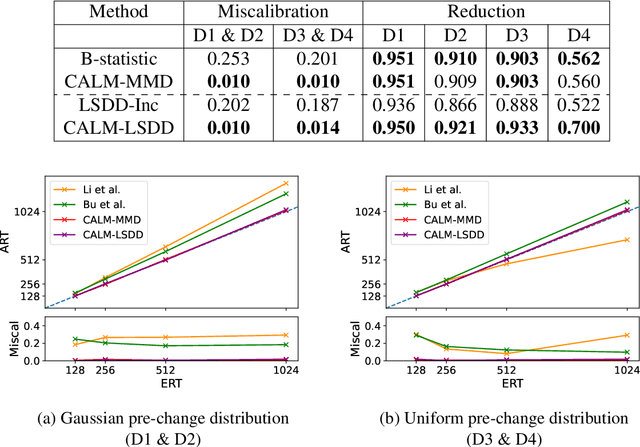

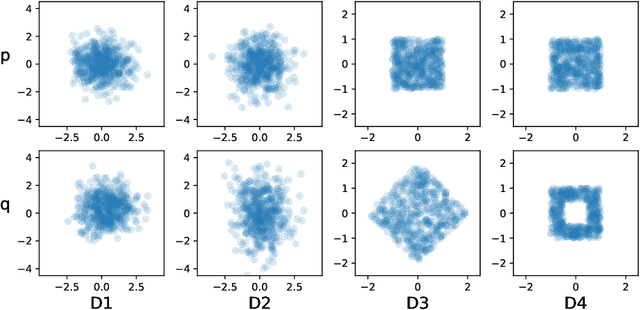

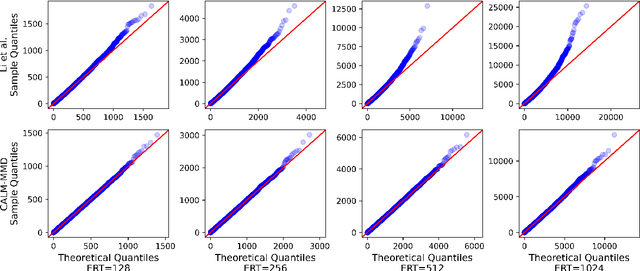

Abstract:Responding appropriately to the detections of a sequential change detector requires knowledge of the rate at which false positives occur in the absence of change. When the pre-change and post-change distributions are unknown, setting detection thresholds to achieve a desired false positive rate is challenging, even when there exists a large number of samples from the reference distribution. Existing works resort to setting time-invariant thresholds that focus on the expected runtime of the detector in the absence of change, either bounding it loosely from below or targeting it directly but with asymptotic arguments that we show cause significant miscalibration in practice. We present a simulation-based approach to setting time-varying thresholds that allows a desired expected runtime to be targeted with a 20x reduction in miscalibration whilst additionally keeping the false positive rate constant across time steps. Whilst the approach to threshold setting is metric agnostic, we show that when using the popular and powerful quadratic time MMD estimator, thoughtful structuring of the computation can reduce the cost during configuration from $O(N^2B)$ to $O(N^2+NB)$ and during operation from $O(N^2)$ to $O(N)$, where $N$ is the number of reference samples and $B$ the number of bootstrap samples. Code is made available as part of the open-source Python library \texttt{alibi-detect}.

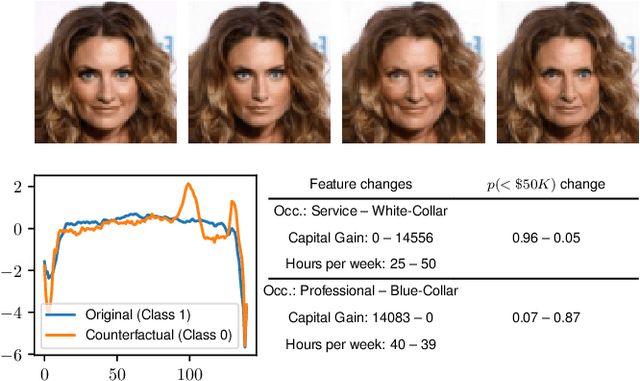

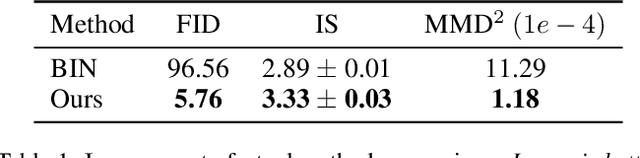

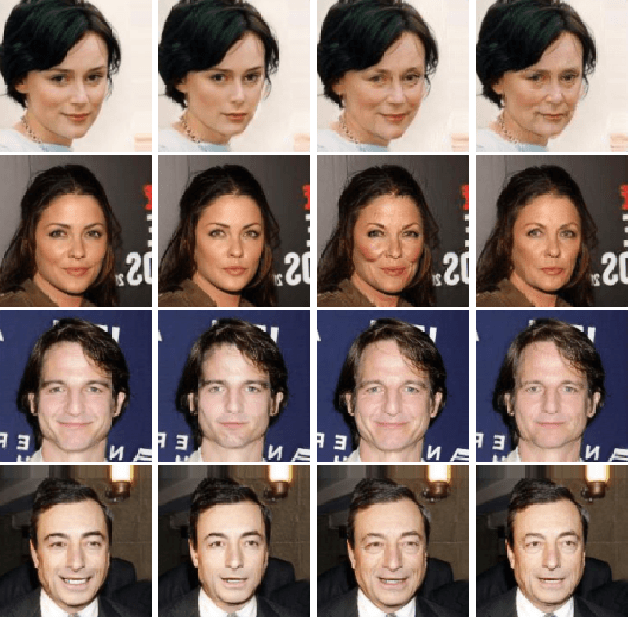

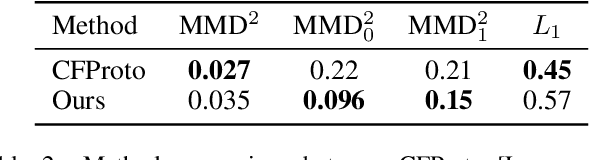

Conditional Generative Models for Counterfactual Explanations

Jan 25, 2021

Abstract:Counterfactual instances offer human-interpretable insight into the local behaviour of machine learning models. We propose a general framework to generate sparse, in-distribution counterfactual model explanations which match a desired target prediction with a conditional generative model, allowing batches of counterfactual instances to be generated with a single forward pass. The method is flexible with respect to the type of generative model used as well as the task of the underlying predictive model. This allows straightforward application of the framework to different modalities such as images, time series or tabular data as well as generative model paradigms such as GANs or autoencoders and predictive tasks like classification or regression. We illustrate the effectiveness of our method on image (CelebA), time series (ECG) and mixed-type tabular (Adult Census) data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge