Oleg Shipitko

TomoSLAM: factor graph optimization for rotation angle refinement in microtomography

Nov 10, 2021

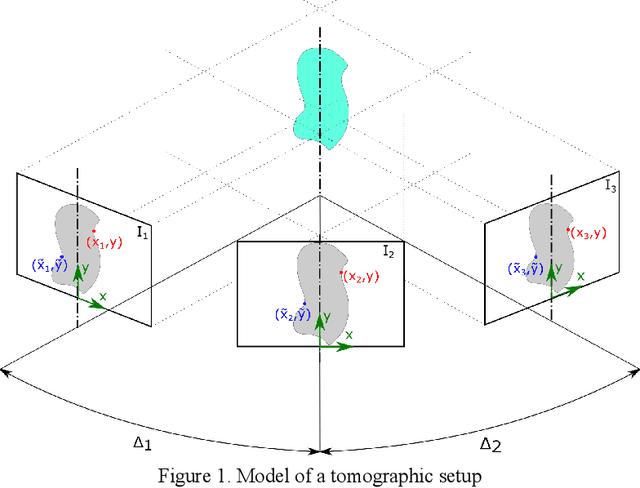

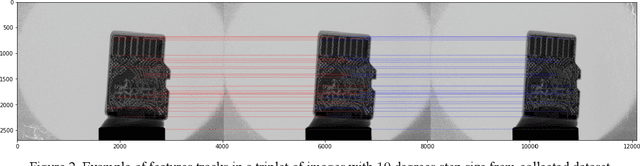

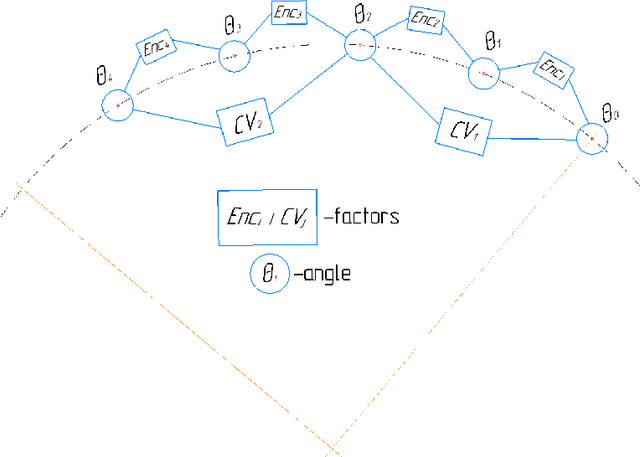

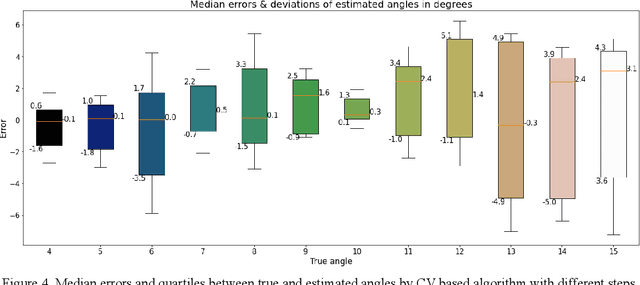

Abstract:In computed tomography (CT), the relative trajectories of a sample, a detector, and a signal source are traditionally considered to be known, since they are caused by the intentional preprogrammed movement of the instrument parts. However, due to the mechanical backlashes, rotation sensor measurement errors, thermal deformations real trajectory differs from desired ones. This negatively affects the resulting quality of tomographic reconstruction. Neither the calibration nor preliminary adjustments of the device completely eliminates the inaccuracy of the trajectory but significantly increase the cost of instrument maintenance. A number of approaches to this problem are based on an automatic refinement of the source and sensor position estimate relative to the sample for each projection (at each time step) during the reconstruction process. A similar problem of position refinement while observing different images of an object from different angles is well known in robotics (particularly, in mobile robots and self-driving vehicles) and is called Simultaneous Localization And Mapping (SLAM). The scientific novelty of this work is to consider the problem of trajectory refinement in microtomography as a SLAM problem. This is achieved by extracting Speeded Up Robust Features (SURF) features from X-ray projections, filtering matches with Random Sample Consensus (RANSAC), calculating angles between projections, and using them in factor graph in combination with stepper motor control signals in order to refine rotation angles.

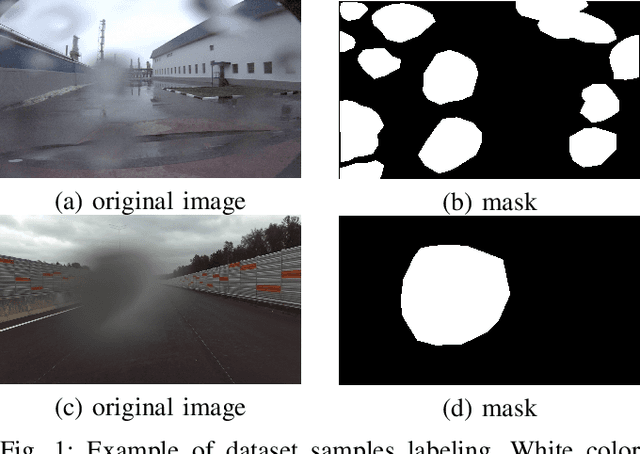

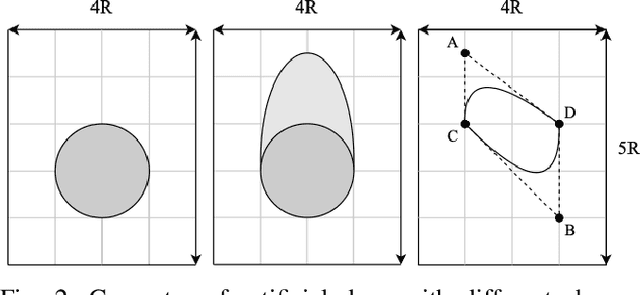

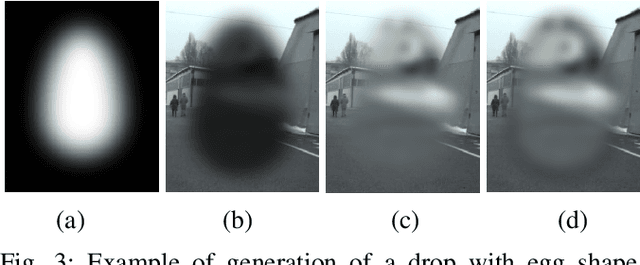

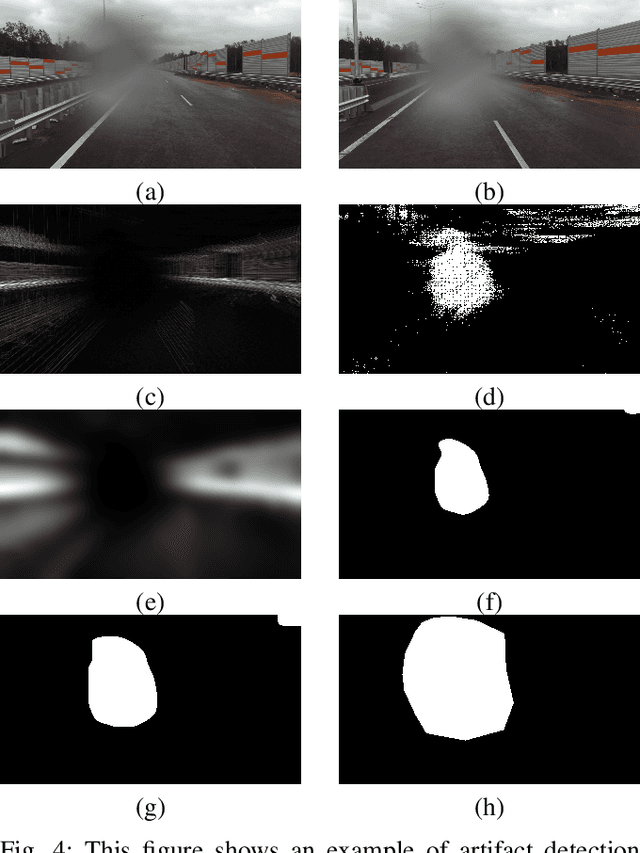

Raindrops on Windshield: Dataset and Lightweight Gradient-Based Detection Algorithm

Apr 11, 2021

Abstract:Autonomous vehicles use cameras as one of the primary sources of information about the environment. Adverse weather conditions such as raindrops, snow, mud, and others, can lead to various image artifacts. Such artifacts significantly degrade the quality and reliability of the obtained visual data and can lead to accidents if they are not detected in time. This paper presents ongoing work on a new dataset for training and assessing vision algorithms' performance for different tasks of image artifacts detection on either camera lens or windshield. At the moment, we present a publicly available set of images containing $8190$ images, of which $3390$ contain raindrops. Images are annotated with the binary mask representing areas with raindrops. We demonstrate the applicability of the dataset in the problems of raindrops presence detection and raindrop region segmentation. To augment the data, we also propose an algorithm for data augmentation which allows the generation of synthetic raindrops on images. Apart from the dataset, we present a novel gradient-based algorithm for raindrop presence detection in a video sequence. The experimental evaluation proves that the algorithm reliably detects raindrops. Moreover, compared with the state-of-the-art cross-correlation-based algorithm \cite{Einecke2014}, the proposed algorithm showed a higher quality of raindrop presence detection and image processing speed, making it applicable for the self-check procedure of real autonomous systems. The dataset is available at \href{https://github.com/EvoCargo/RaindropsOnWindshield}{$github.com/EvoCargo/RaindropsOnWindshield$}.

Safe Speed Control and Collision Probability Estimation Under Ego-Pose Uncertainty for Autonomous Vehicle

Mar 02, 2020

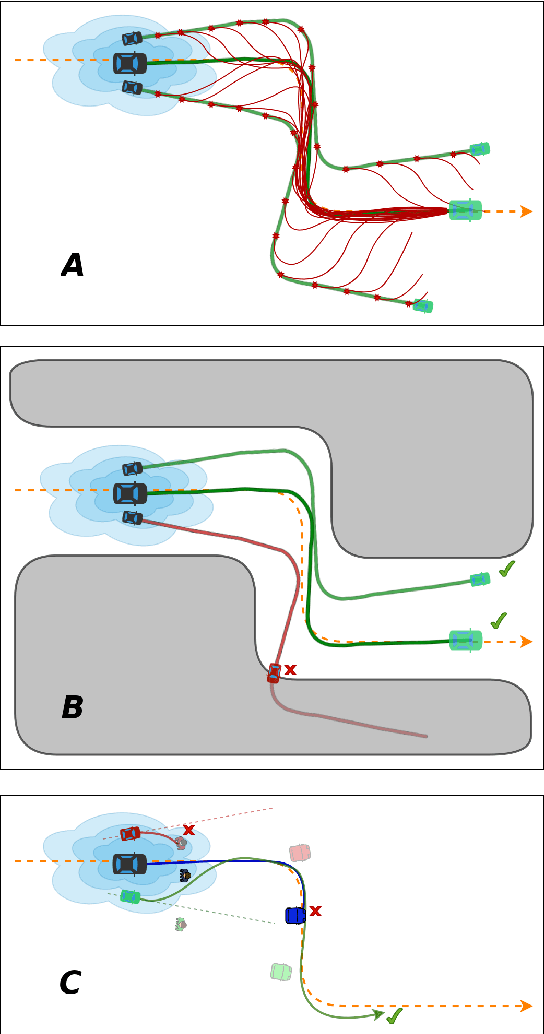

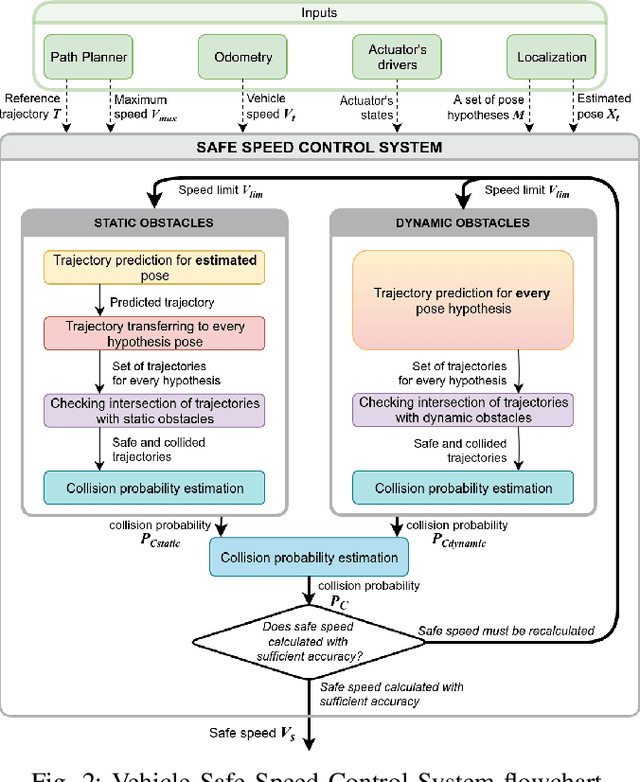

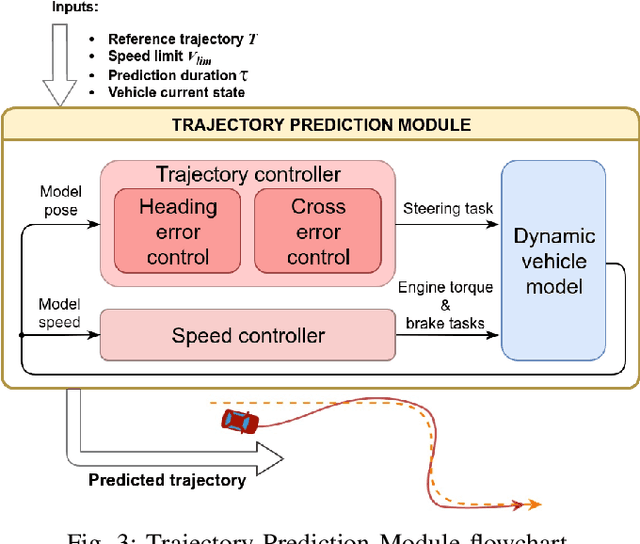

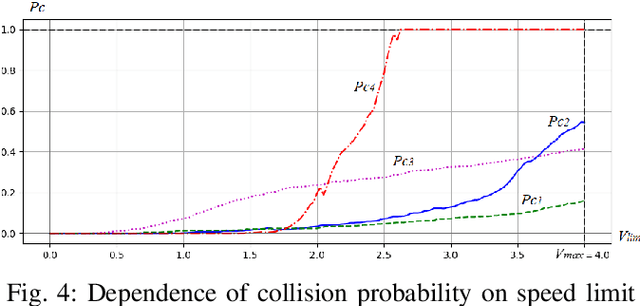

Abstract:In order for autonomous vehicles to become a part of the Intelligent Transportation Ecosystem, they are required to guarantee a particular level of safety. For that to happen a safe vehicle control algorithms need to be developed, which include assessing the probability of a collision while driving along a given trajectory and selecting control signals that minimize this probability. In this paper, we propose a speed control system that estimates a collision probability taking into account static and dynamic obstacles as well as ego-pose uncertainty and chooses the maximum safe speed. For that, the planned trajectory is converted by the control system into control signals that form input for the dynamic vehicle model. The model predicts a real vehicle path. The predicted trajectory is generated for each particle -- a weighted by a probability hypothesis of the localization system about the vehicle pose. Based on the predicted particles' trajectories, the probability of collision is calculated, and a decision is made on the maximum safe speed. The proposed algorithm was validated on the real autonomous vehicle. The experimental results demonstrate that the proposed speed control system reduces the vehicle speed to a safe value when performing maneuvers and driving through narrow openings. Therefore the observed behavior of the system is mimicking a human driver behavior when driving in difficult and ambiguous traffic situations.

Linear Features Observation Model for Autonomous Vehicle Localization

Feb 28, 2020

Abstract:Precise localization is a core ability of an autonomous vehicle. It is a prerequisite for motion planning and execution. The well-established localization approaches such as Kalman and particle filters require a probabilistic observation model allowing to compute a likelihood of measurement given a system state vector, usually vehicle pose, and a map. The higher precision of the localization system may be achieved through the development of a more sophisticated observation model considering various measurement error sources. Meanwhile model needs to be simple to be computable in real-time. This paper proposes an observation model for visually detected linear features. Examples of such features include, but not limited to, road markings and road boundaries. The proposed observation model depicts two core detection error sources: shift error and angular error. It also considers the probability of false-positive detection. The structure of the proposed model allows precomputing and incorporating the measurement error directly into the map represented by a multichannel digital image. Measurement error precomputation and storing the map as an image speeds up observation likelihood computation and in turn localization system. The experimental evaluation on real autonomous vehicle demonstrates that the proposed model allows for precise and reliable localization in a variety of scenarios.

X-ray and Visible Spectra Circular Motion Images Dataset

Oct 01, 2019

Abstract:We present the collections of images of the same rotating plastic object made in X-ray and visible spectra. Both parts of the dataset contain 400 images. The images are maid every 0.5 degrees of the object axial rotation. The collection of images is designed for evaluation of the performance of circular motion estimation algorithms as well as for the study of X-ray nature influence on the image analysis algorithms such as keypoints detection and description. The dataset is available at https://github.com/Visillect/xvcm-dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge