Olakunle Ibitoye

DiPSeN: Differentially Private Self-normalizing Neural Networks For Adversarial Robustness in Federated Learning

Jan 08, 2021

Abstract:The need for robust, secure and private machine learning is an important goal for realizing the full potential of the Internet of Things (IoT). Federated learning has proven to help protect against privacy violations and information leakage. However, it introduces new risk vectors which make machine learning models more difficult to defend against adversarial samples. In this study, we examine the role of differential privacy and self-normalization in mitigating the risk of adversarial samples specifically in a federated learning environment. We introduce DiPSeN, a Differentially Private Self-normalizing Neural Network which combines elements of differential privacy noise with self-normalizing techniques. Our empirical results on three publicly available datasets show that DiPSeN successfully improves the adversarial robustness of a deep learning classifier in a federated learning environment based on several evaluation metrics.

A GAN-based Approach for Mitigating Inference Attacks in Smart Home Environment

Nov 13, 2020

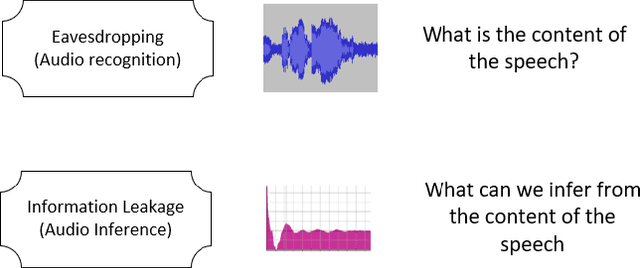

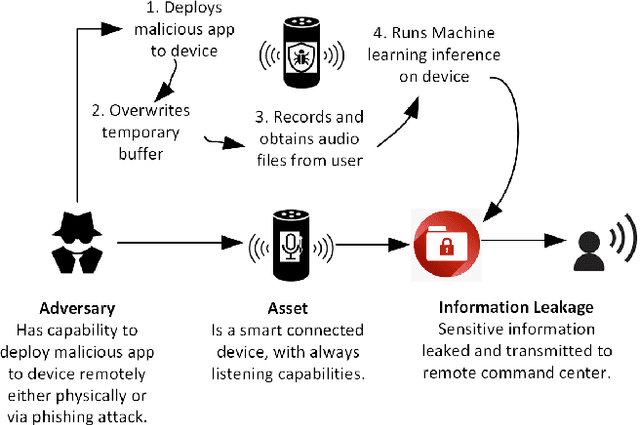

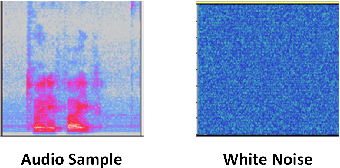

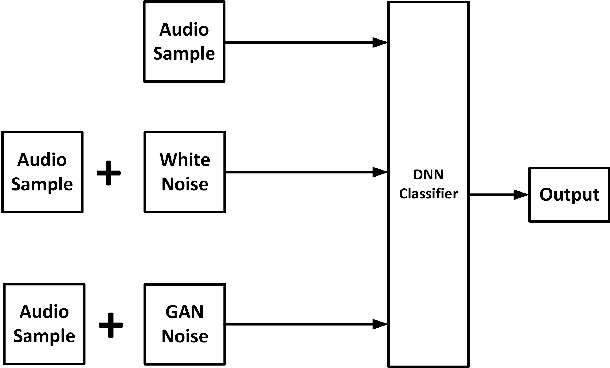

Abstract:The proliferation of smart, connected, always listening devices have introduced significant privacy risks to users in a smart home environment. Beyond the notable risk of eavesdropping, intruders can adopt machine learning techniques to infer sensitive information from audio recordings on these devices, resulting in a new dimension of privacy concerns and attack variables to smart home users. Techniques such as sound masking and microphone jamming have been effectively used to prevent eavesdroppers from listening in to private conversations. In this study, we explore the problem of adversaries spying on smart home users to infer sensitive information with the aid of machine learning techniques. We then analyze the role of randomness in the effectiveness of sound masking for mitigating sensitive information leakage. We propose a Generative Adversarial Network (GAN) based approach for privacy preservation in smart homes which generates random noise to distort the unwanted machine learning-based inference. Our experimental results demonstrate that GANs can be used to generate more effective sound masking noise signals which exhibit more randomness and effectively mitigate deep learning-based inference attacks while preserving the semantics of the audio samples.

The Threat of Adversarial Attacks on Machine Learning in Network Security -- A Survey

Nov 06, 2019

Abstract:Machine learning models have made many decision support systems to be faster, more accurate and more efficient. However, applications of machine learning in network security face more disproportionate threat of active adversarial attacks compared to other domains. This is because machine learning applications in network security such as malware detection, intrusion detection, and spam filtering are by themselves adversarial in nature. In what could be considered an arms race between attackers and defenders, adversaries constantly probe machine learning systems with inputs which are explicitly designed to bypass the system and induce a wrong prediction. In this survey, we first provide a taxonomy of machine learning techniques, styles, and algorithms. We then introduce a classification of machine learning in network security applications. Next, we examine various adversarial attacks against machine learning in network security and introduce two classification approaches for adversarial attacks in network security. First, we classify adversarial attacks in network security based on a taxonomy of network security applications. Secondly, we categorize adversarial attacks in network security into a problem space vs. feature space dimensional classification model. We then analyze the various defenses against adversarial attacks on machine learning-based network security applications. We conclude by introducing an adversarial risk model and evaluate several existing adversarial attacks against machine learning in network security using the risk model. We also identify where each attack classification resides within the adversarial risk model

Analyzing Adversarial Attacks Against Deep Learning for Intrusion Detection in IoT Networks

May 13, 2019

Abstract:Adversarial attacks have been widely studied in the field of computer vision but their impact on network security applications remains an area of open research. As IoT, 5G and AI continue to converge to realize the promise of the fourth industrial revolution (Industry 4.0), security incidents and events on IoT networks have increased. Deep learning techniques are being applied to detect and mitigate many of such security threats against IoT networks. Feedforward Neural Networks (FNN) have been widely used for classifying intrusion attacks in IoT networks. In this paper, we consider a variant of the FNN known as the Self-normalizing Neural Network (SNN) and compare its performance with the FNN for classifying intrusion attacks in an IoT network. Our analysis is performed using the BoT-IoT dataset from the Cyber Range Lab of the center of UNSW Canberra Cyber. In our experimental results, the FNN outperforms the SNN for intrusion detection in IoT networks based on multiple performance metrics such as accuracy, precision, and recall as well as multi-classification metrics such as Cohen's Kappa score. However, when tested for adversarial robustness, the SNN demonstrates better resilience against the adversarial samples from the IoT dataset, presenting a promising future in the quest for safer and more secure deep learning in IoT networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge