Odelia Schwartz

Inference via Sparse Coding in a Hierarchical Vision Model

Aug 03, 2021

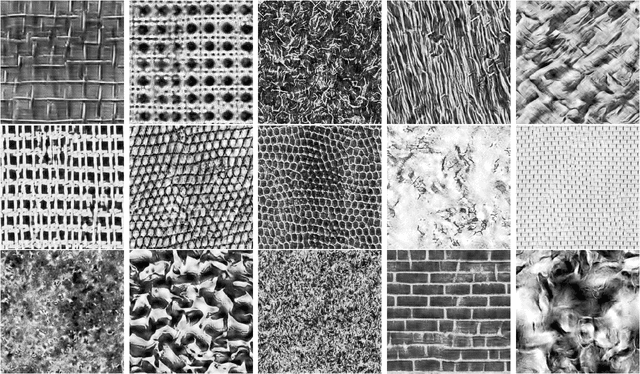

Abstract:Sparse coding has been incorporated in models of the visual cortex for its computational advantages and connection to biology. But how the level of sparsity contributes to performance on visual tasks is not well understood. In this work, sparse coding has been integrated into an existing hierarchical V2 model (Hosoya and Hyv\"arinen, 2015), but replacing the Independent Component Analysis (ICA) with an explicit sparse coding in which the degree of sparsity can be controlled. After training, the sparse coding basis functions with a higher degree of sparsity resembled qualitatively different structures, such as curves and corners. The contributions of the models were assessed with image classification tasks, including object classification, and tasks associated with mid-level vision including figure-ground classification, texture classification, and angle prediction between two line stimuli. In addition, the models were assessed in comparison to a texture sensitivity measure that has been reported in V2 (Freeman et al., 2013), and a deleted-region inference task. The results from the experiments show that while sparse coding performed worse than ICA at classifying images, only sparse coding was able to better match the texture sensitivity level of V2 and infer deleted image regions, both by increasing the degree of sparsity in sparse coding. Higher degrees of sparsity allowed for inference over larger deleted image regions. The mechanism that allows for this inference capability in sparse coding is described here.

Integrating Flexible Normalization into Mid-Level Representations of Deep Convolutional Neural Networks

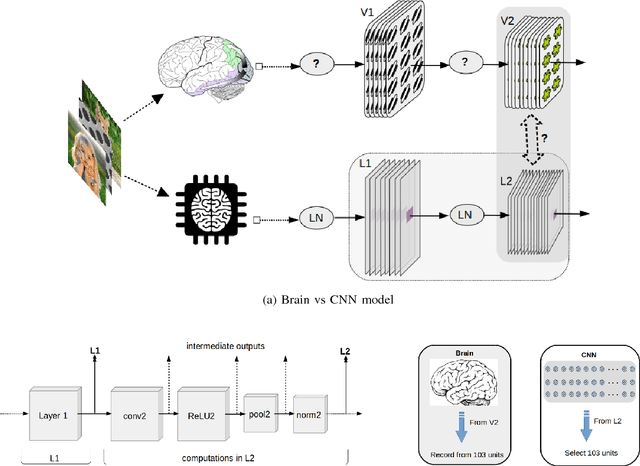

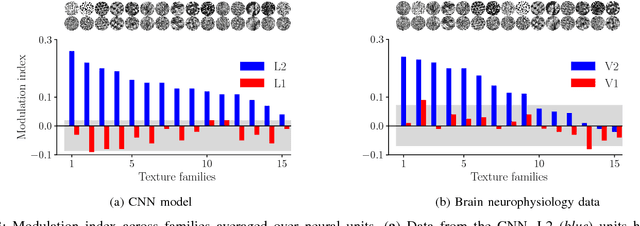

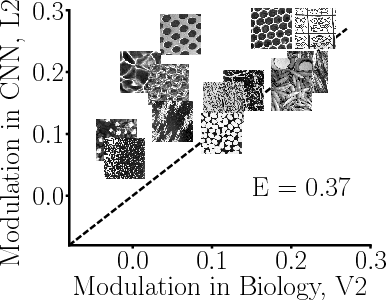

Aug 27, 2018Abstract:Deep convolutional neural networks (CNNs) are becoming increasingly popular models to predict neural responses in visual cortex. However, contextual effects, which are prevalent in neural processing and in perception, are not explicitly handled by current CNNs, including those used for neural prediction. In primary visual cortex, neural responses are modulated by stimuli spatially surrounding the classical receptive field in rich ways. These effects have been modeled with divisive normalization approaches, including flexible models where spatial normalization is recruited only to the degree responses from center and surround locations are deemed statistically dependent. We propose a flexible normalization model applied to mid-level representations of deep CNNs as a tractable way to study contextual normalization mechanisms in mid-level visual areas. This approach captures non-trivial spatial dependencies among mid-level features in CNNs, such as those present in textures and other visual stimuli that arise from tiling high order features, geometrically. We expect that the proposed approach can make predictions about when spatial normalization might be recruited in mid-level cortical areas. We also expect this approach to be useful as part of the CNN toolkit, therefore going beyond more restrictive fixed forms of normalization.

Correspondence of Deep Neural Networks and the Brain for Visual Textures

Jun 07, 2018

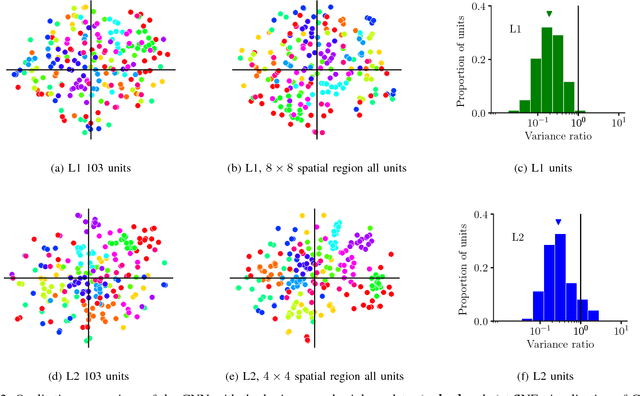

Abstract:Deep convolutional neural networks (CNNs) trained on objects and scenes have shown intriguing ability to predict some response properties of visual cortical neurons. However, the factors and computations that give rise to such ability, and the role of intermediate processing stages in explaining changes that develop across areas of the cortical hierarchy, are poorly understood. We focused on the sensitivity to textures as a paradigmatic example, since recent neurophysiology experiments provide rich data pointing to texture sensitivity in secondary but not primary visual cortex. We developed a quantitative approach for selecting a subset of the neural unit population from the CNN that best describes the brain neural recordings. We found that the first two layers of the CNN showed qualitative and quantitative correspondence to the cortical data across a number of metrics. This compatibility was reduced for the architecture alone rather than the learned weights, for some other related hierarchical models, and only mildly in the absence of a nonlinear computation akin to local divisive normalization. Our results show that the CNN class of model is effective for capturing changes that develop across early areas of cortex, and has the potential to facilitate understanding of the computations that give rise to hierarchical processing in the brain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge