Nuwan Ferdinand

Distributed Reconfigurable Intelligent Surfaces for Energy Efficient Indoor Terahertz Wireless Communications

Oct 12, 2022

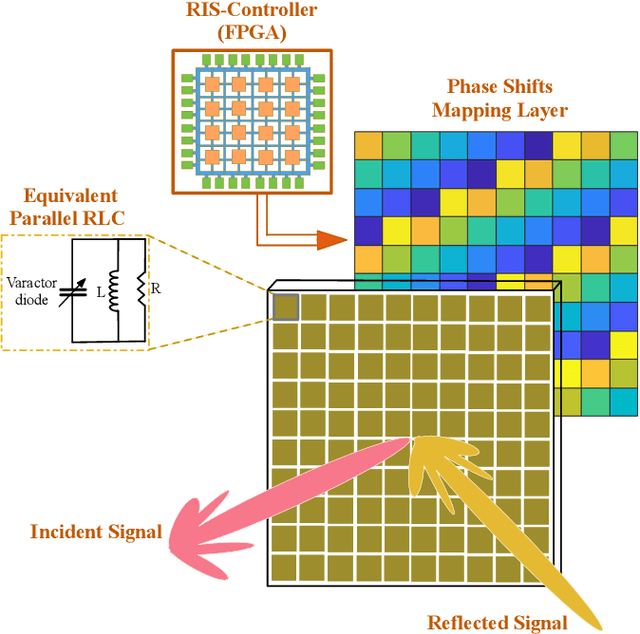

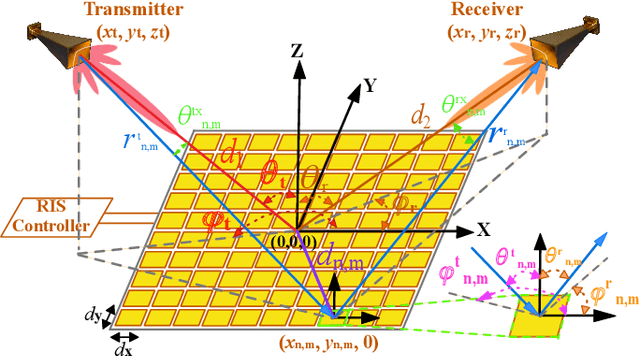

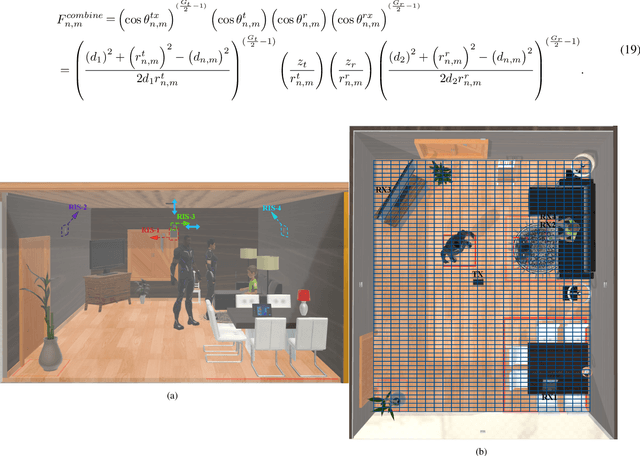

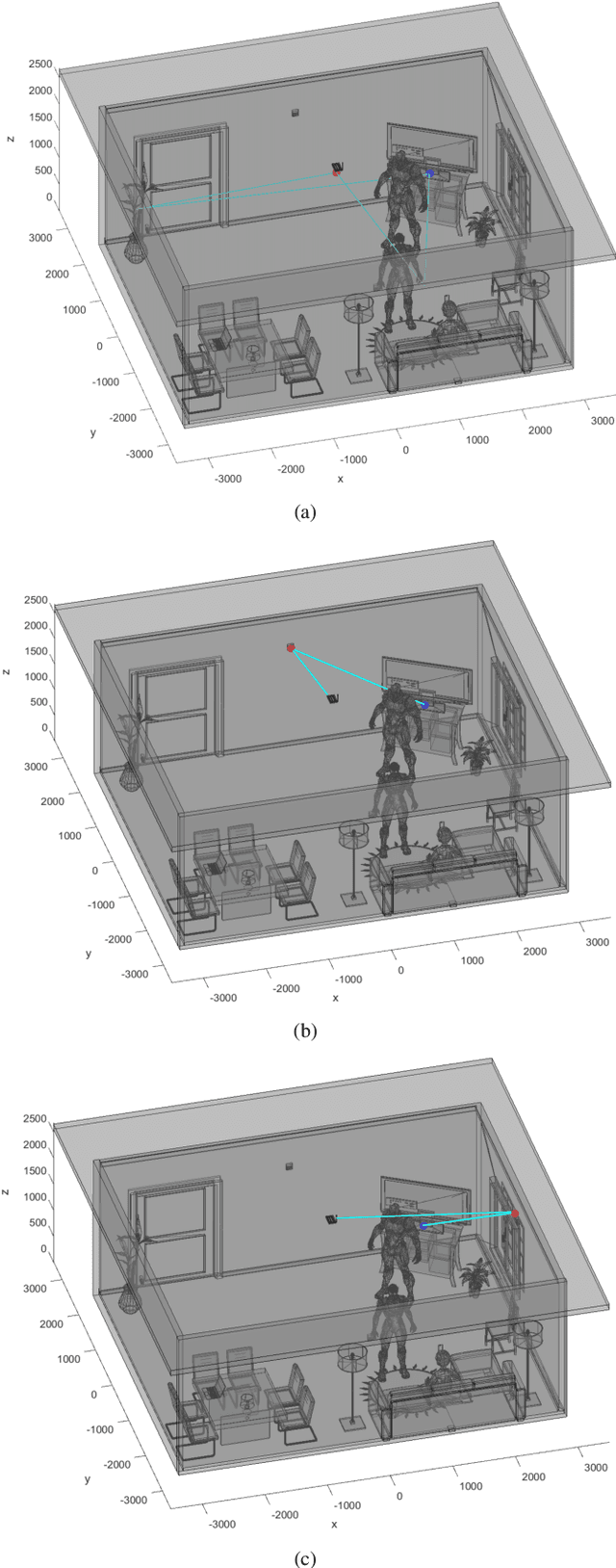

Abstract:With the fifth-generation (5G) networks widely commercialized and fast deployed, the sixth-generation (6G) wireless communication is envisioned to provide competitive quality of service (QoS) in multiple aspects to global users. The critical and underlying research of the 6G is, firstly, highly dependent on the precise modeling and characterization of the wireless propagation when the spectrum is believed to expand to the terahertz (THz) domain. Moreover, future networks' power consumption and energy efficiency are critical factors to consider. In this research, based on a review of the fundamental mechanisms of reconfigurable intelligent surface (RIS) assisted wireless communications, we utilize the 3D ray-tracing method to analyze a realistic indoor THz propagation environment with the existence of human blockers. Furthermore, we propose a distributed RISs framework (DRF) to assist the indoor THz wireless communication to achieve overall energy efficiency. The numerical analysis of simulation results based on more than 2,900 indoor THz wireless communication sub-scenarios has demonstrated the significant efficacy of applying distributed RISs to overcome the mobile human blockage issue, improve the THz signal coverage, increase signal-to-noise ratios (SNRs), and QoS. With practical hardware design constraints investigated, we eventually envision how to utilize the existing integrated sensing and communication techniques to deploy and operate such a system in reality. Such a distributed RISs framework can also lay the foundation of efficient THz communications for Internet-of-Things (IoT) networks.

Anytime MiniBatch: Exploiting Stragglers in Online Distributed Optimization

Jun 10, 2020

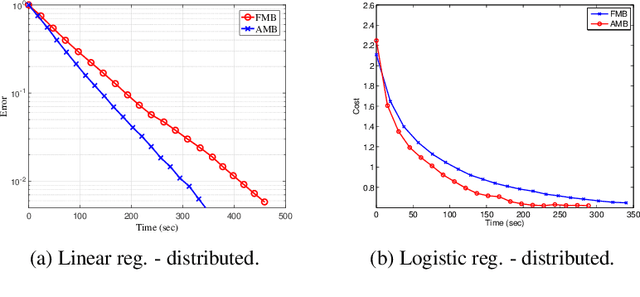

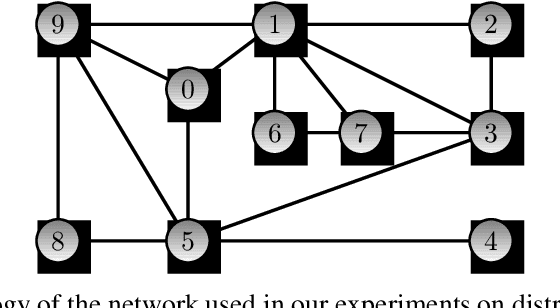

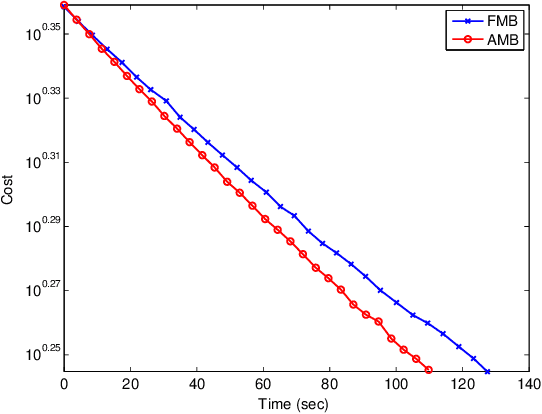

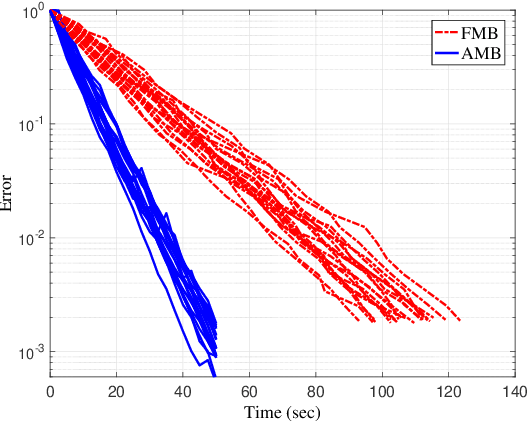

Abstract:Distributed optimization is vital in solving large-scale machine learning problems. A widely-shared feature of distributed optimization techniques is the requirement that all nodes complete their assigned tasks in each computational epoch before the system can proceed to the next epoch. In such settings, slow nodes, called stragglers, can greatly slow progress. To mitigate the impact of stragglers, we propose an online distributed optimization method called Anytime Minibatch. In this approach, all nodes are given a fixed time to compute the gradients of as many data samples as possible. The result is a variable per-node minibatch size. Workers then get a fixed communication time to average their minibatch gradients via several rounds of consensus, which are then used to update primal variables via dual averaging. Anytime Minibatch prevents stragglers from holding up the system without wasting the work that stragglers can complete. We present a convergence analysis and analyze the wall time performance. Our numerical results show that our approach is up to 1.5 times faster in Amazon EC2 and it is up to five times faster when there is greater variability in compute node performance.

* International Conference on Learning Representations (ICLR), May 2019, New Orleans, LA, USA

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge