Nuno Antunes

Exploring Layerwise Adversarial Robustness Through the Lens of t-SNE

Jun 20, 2024

Abstract:Adversarial examples, designed to trick Artificial Neural Networks (ANNs) into producing wrong outputs, highlight vulnerabilities in these models. Exploring these weaknesses is crucial for developing defenses, and so, we propose a method to assess the adversarial robustness of image-classifying ANNs. The t-distributed Stochastic Neighbor Embedding (t-SNE) technique is used for visual inspection, and a metric, which compares the clean and perturbed embeddings, helps pinpoint weak spots in the layers. Analyzing two ANNs on CIFAR-10, one designed by humans and another via NeuroEvolution, we found that differences between clean and perturbed representations emerge early on, in the feature extraction layers, affecting subsequent classification. The findings with our metric are supported by the visual analysis of the t-SNE maps.

MONDEO: Multistage Botnet Detection

Aug 31, 2023

Abstract:Mobile devices have widespread to become the most used piece of technology. Due to their characteristics, they have become major targets for botnet-related malware. FluBot is one example of botnet malware that infects mobile devices. In particular, FluBot is a DNS-based botnet that uses Domain Generation Algorithms (DGA) to establish communication with the Command and Control Server (C2). MONDEO is a multistage mechanism with a flexible design to detect DNS-based botnet malware. MONDEO is lightweight and can be deployed without requiring the deployment of software, agents, or configuration in mobile devices, allowing easy integration in core networks. MONDEO comprises four detection stages: Blacklisting/Whitelisting, Query rate analysis, DGA analysis, and Machine learning evaluation. It was created with the goal of processing streams of packets to identify attacks with high efficiency, in the distinct phases. MONDEO was tested against several datasets to measure its efficiency and performance, being able to achieve high performance with RandomForest classifiers. The implementation is available at github.

Adversarial Robustness Assessment of NeuroEvolution Approaches

Jul 12, 2022

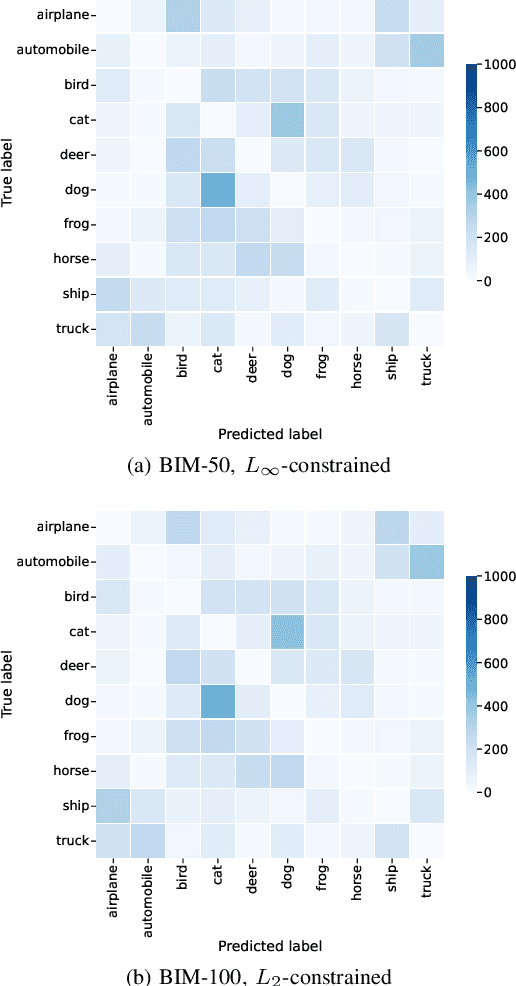

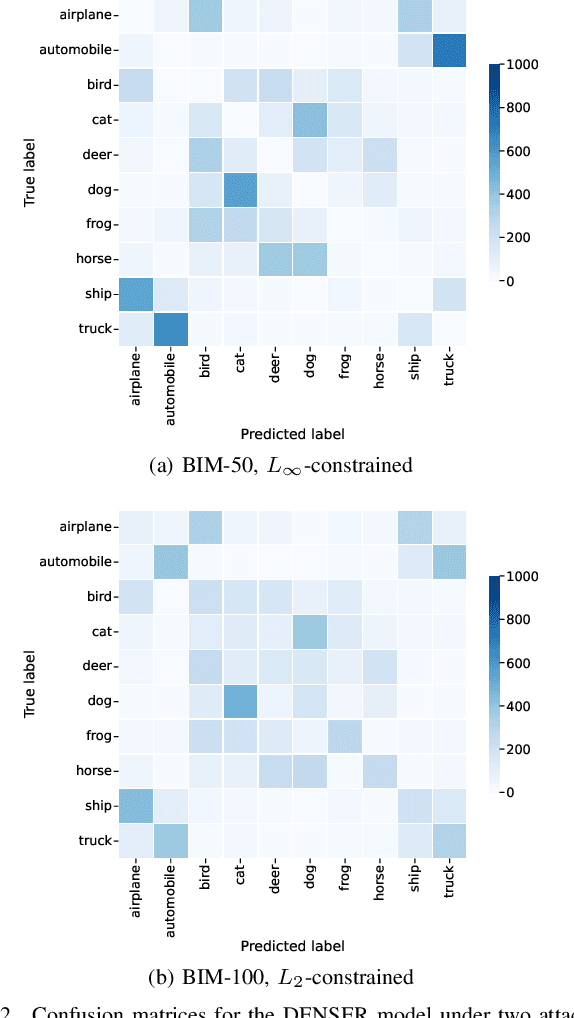

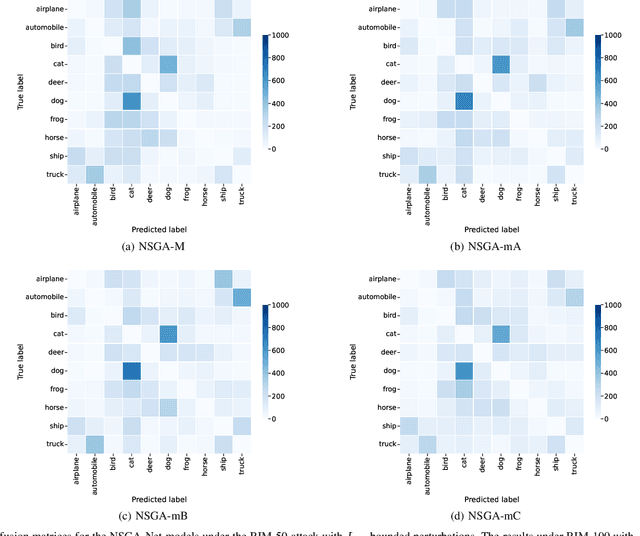

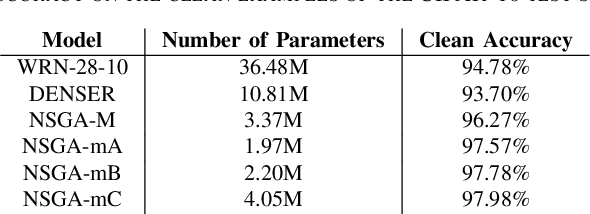

Abstract:NeuroEvolution automates the generation of Artificial Neural Networks through the application of techniques from Evolutionary Computation. The main goal of these approaches is to build models that maximize predictive performance, sometimes with an additional objective of minimizing computational complexity. Although the evolved models achieve competitive results performance-wise, their robustness to adversarial examples, which becomes a concern in security-critical scenarios, has received limited attention. In this paper, we evaluate the adversarial robustness of models found by two prominent NeuroEvolution approaches on the CIFAR-10 image classification task: DENSER and NSGA-Net. Since the models are publicly available, we consider white-box untargeted attacks, where the perturbations are bounded by either the L2 or the Linfinity-norm. Similarly to manually-designed networks, our results show that when the evolved models are attacked with iterative methods, their accuracy usually drops to, or close to, zero under both distance metrics. The DENSER model is an exception to this trend, showing some resistance under the L2 threat model, where its accuracy only drops from 93.70% to 18.10% even with iterative attacks. Additionally, we analyzed the impact of pre-processing applied to the data before the first layer of the network. Our observations suggest that some of these techniques can exacerbate the perturbations added to the original inputs, potentially harming robustness. Thus, this choice should not be neglected when automatically designing networks for applications where adversarial attacks are prone to occur.

The Impact of Data Preparation on the Fairness of Software Systems

Oct 05, 2019

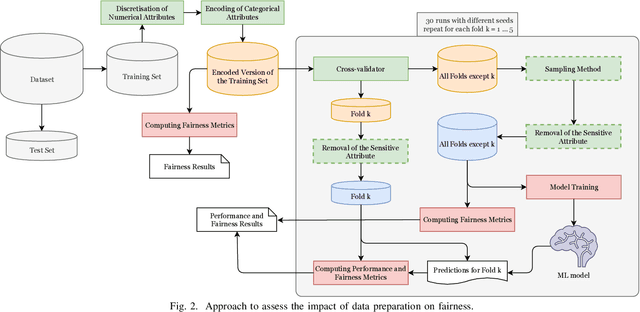

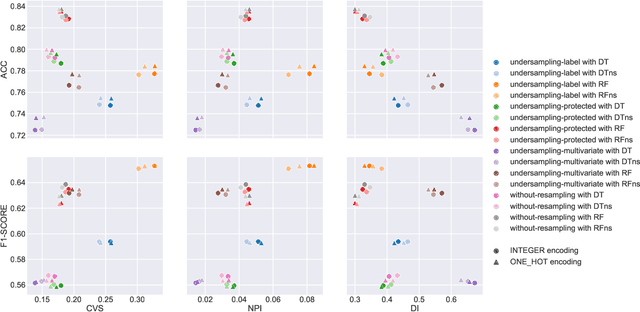

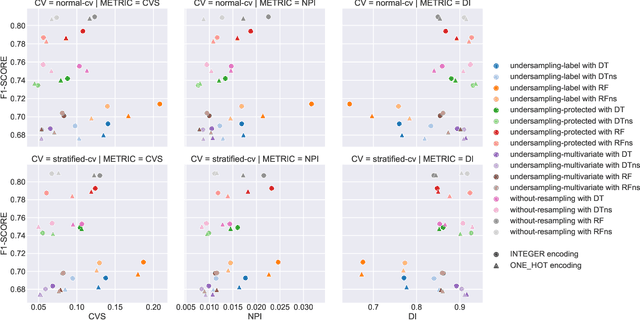

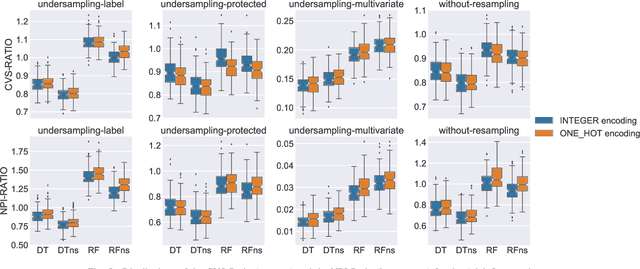

Abstract:Machine learning models are widely adopted in scenarios that directly affect people. The development of software systems based on these models raises societal and legal concerns, as their decisions may lead to the unfair treatment of individuals based on attributes like race or gender. Data preparation is key in any machine learning pipeline, but its effect on fairness is yet to be studied in detail. In this paper, we evaluate how the fairness and effectiveness of the learned models are affected by the removal of the sensitive attribute, the encoding of the categorical attributes, and instance selection methods (including cross-validators and random undersampling). We used the Adult Income and the German Credit Data datasets, which are widely studied and known to have fairness concerns. We applied each data preparation technique individually to analyse the difference in predictive performance and fairness, using statistical parity difference, disparate impact, and the normalised prejudice index. The results show that fairness is affected by transformations made to the training data, particularly in imbalanced datasets. Removing the sensitive attribute is insufficient to eliminate all the unfairness in the predictions, as expected, but it is key to achieve fairer models. Additionally, the standard random undersampling with respect to the true labels is sometimes more prejudicial than performing no random undersampling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge