Nouria Harbi

ERIC

Calling to CNN-LSTM for Rumor Detection: A Deep Multi-channel Model for Message Veracity Classification in Microblogs

Oct 11, 2021

Abstract:Reputed by their low-cost, easy-access, real-time and valuable information, social media also wildly spread unverified or fake news. Rumors can notably cause severe damage on individuals and the society. Therefore, rumor detection on social media has recently attracted tremendous attention. Most rumor detection approaches focus on rumor feature analysis and social features, i.e., metadata in social media. Unfortunately, these features are data-specific and may not always be available, e.g., when the rumor has just popped up and not yet propagated. In contrast, post contents (including images or videos) play an important role and can indicate the diffusion purpose of a rumor. Furthermore, rumor classification is also closely related to opinion mining and sentiment analysis. Yet, to the best of our knowledge, exploiting images and sentiments is little investigated.Considering the available multimodal features from microblogs, notably, we propose in this paper an end-to-end model called deepMONITOR that is based on deep neural networks and allows quite accurate automated rumor verification, by utilizing all three characteristics: post textual and image contents, as well as sentiment. deepMONITOR concatenates image features with the joint text and sentiment features to produce a reliable, fused classification. We conduct extensive experiments on two large-scale, real-world datasets. The results show that deepMONITOR achieves a higher accuracy than state-of-the-art methods.

MONITOR: A Multimodal Fusion Framework to Assess Message Veracity in Social Networks

Sep 06, 2021

Abstract:Users of social networks tend to post and share content with little restraint. Hence, rumors and fake news can quickly spread on a huge scale. This may pose a threat to the credibility of social media and can cause serious consequences in real life. Therefore, the task of rumor detection and verification has become extremely important. Assessing the veracity of a social media message (e.g., by fact checkers) involves analyzing the text of the message, its context and any multimedia attachment. This is a very time-consuming task that can be much helped by machine learning. In the literature, most message veracity verification methods only exploit textual contents and metadata. Very few take both textual and visual contents, and more particularly images, into account. In this paper, we second the hypothesis that exploiting all of the components of a social media post enhances the accuracy of veracity detection. To further the state of the art, we first propose using a set of advanced image features that are inspired from the field of image quality assessment, which effectively contributes to rumor detection. These metrics are good indicators for the detection of fake images, even for those generated by advanced techniques like generative adversarial networks (GANs). Then, we introduce the Multimodal fusiON framework to assess message veracIty in social neTwORks (MONITOR), which exploits all message features (i.e., text, social context, and image features) by supervised machine learning. Such algorithms provide interpretability and explainability in the decisions taken, which we believe is particularly important in the context of rumor verification. Experimental results show that MONITOR can detect rumors with an accuracy of 96% and 89% on the MediaEval benchmark and the FakeNewsNet dataset, respectively. These results are significantly better than those of state-of-the-art machine learning baselines.

Including Images into Message Veracity Assessment in Social Media

Jul 20, 2020

Abstract:The extensive use of social media in the diffusion of information has also laid a fertile ground for the spread of rumors, which could significantly affect the credibility of social media. An ever-increasing number of users post news including, in addition to text, multimedia data such as images and videos. Yet, such multimedia content is easily editable due to the broad availability of simple and effective image and video processing tools. The problem of assessing the veracity of social network posts has attracted a lot of attention from researchers in recent years. However, almost all previous works have focused on analyzing textual contents to determine veracity, while visual contents, and more particularly images, remains ignored or little exploited in the literature. In this position paper, we propose a framework that explores two novel ways to assess the veracity of messages published on social networks by analyzing the credibility of both their textual and visual contents.

Combining Naive Bayes and Decision Tree for Adaptive Intrusion Detection

May 25, 2010

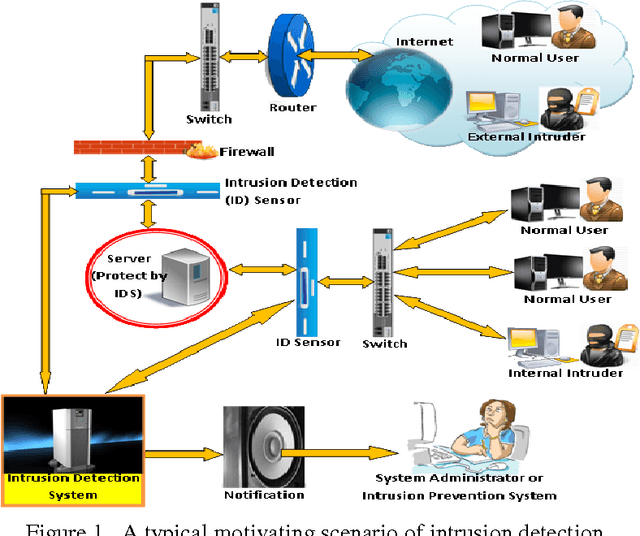

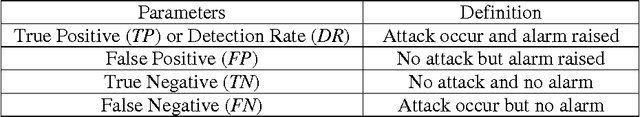

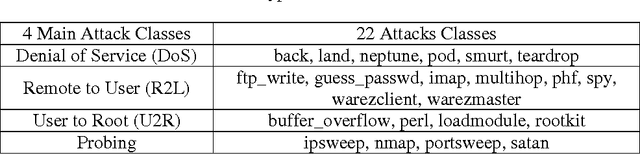

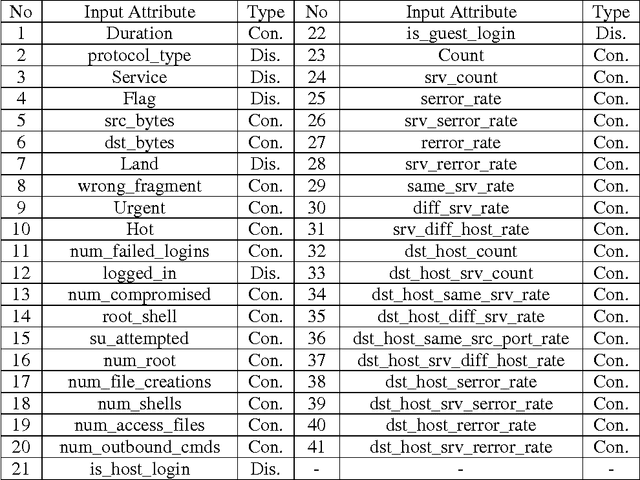

Abstract:In this paper, a new learning algorithm for adaptive network intrusion detection using naive Bayesian classifier and decision tree is presented, which performs balance detections and keeps false positives at acceptable level for different types of network attacks, and eliminates redundant attributes as well as contradictory examples from training data that make the detection model complex. The proposed algorithm also addresses some difficulties of data mining such as handling continuous attribute, dealing with missing attribute values, and reducing noise in training data. Due to the large volumes of security audit data as well as the complex and dynamic properties of intrusion behaviours, several data miningbased intrusion detection techniques have been applied to network-based traffic data and host-based data in the last decades. However, there remain various issues needed to be examined towards current intrusion detection systems (IDS). We tested the performance of our proposed algorithm with existing learning algorithms by employing on the KDD99 benchmark intrusion detection dataset. The experimental results prove that the proposed algorithm achieved high detection rates (DR) and significant reduce false positives (FP) for different types of network intrusions using limited computational resources.

* 14 Pages, IJNSA

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge