Noah Wardrip-Fruin

Clicking some of the silly options: Exploring Player Motivation in Static and Dynamic Educational Interactive Narratives

May 13, 2025

Abstract:Motivation is an important factor underlying successful learning. Previous research has demonstrated the positive effects that static interactive narrative games can have on motivation. Concurrently, advances in AI have made dynamic and adaptive approaches to interactive narrative increasingly accessible. However, limited work has explored the impact that dynamic narratives can have on learner motivation. In this paper, we compare two versions of Academical, a choice-based educational interactive narrative game about research ethics. One version employs a traditional hand-authored branching plot (i.e., static narrative) while the other dynamically sequences plots during play (i.e., dynamic narrative). Results highlight the importance of responsive content and a variety of choices for player engagement, while also illustrating the challenge of balancing pedagogical goals with the dynamic aspects of narrative. We also discuss design implications that arise from these findings. Ultimately, this work provides initial steps to illuminate the emerging potential of AI-driven dynamic narrative in educational games.

CFGs-2-NLU: Sequence-to-Sequence Learning for Mapping Utterances to Semantics and Pragmatics

Jul 22, 2016

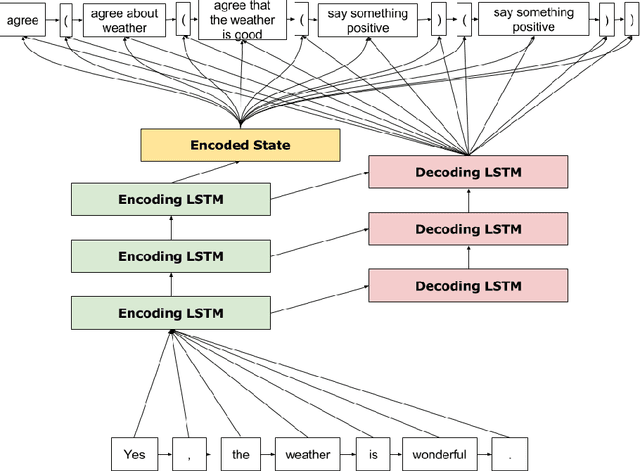

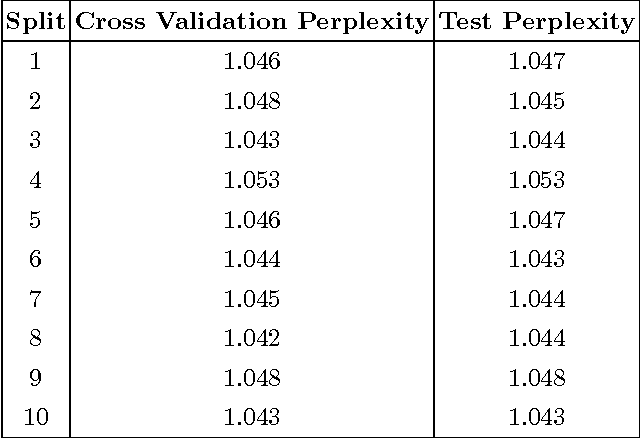

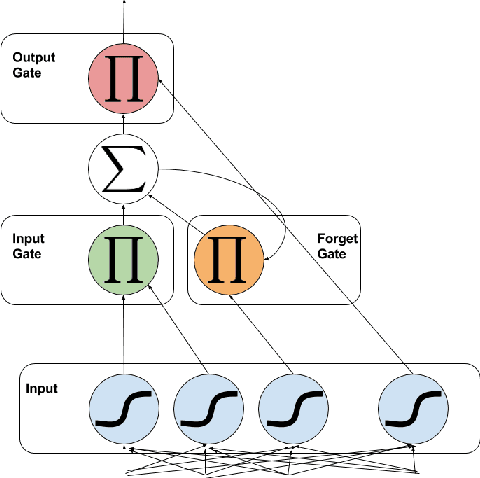

Abstract:In this paper, we present a novel approach to natural language understanding that utilizes context-free grammars (CFGs) in conjunction with sequence-to-sequence (seq2seq) deep learning. Specifically, we take a CFG authored to generate dialogue for our target application for NLU, a videogame, and train a long short-term memory (LSTM) recurrent neural network (RNN) to map the surface utterances that it produces to traces of the grammatical expansions that yielded them. Critically, this CFG was authored using a tool we have developed that supports arbitrary annotation of the nonterminal symbols in the grammar. Because we already annotated the symbols in this grammar for the semantic and pragmatic considerations that our game's dialogue manager operates over, we can use the grammatical trace associated with any surface utterance to infer such information. During gameplay, we translate player utterances into grammatical traces (using our RNN), collect the mark-up attributed to the symbols included in that trace, and pass this information to the dialogue manager, which updates the conversation state accordingly. From an offline evaluation task, we demonstrate that our trained RNN translates surface utterances to grammatical traces with great accuracy. To our knowledge, this is the first usage of seq2seq learning for conversational agents (our game's characters) who explicitly reason over semantic and pragmatic considerations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge