Nithin Raghunathan

Anomaly Detection through Transfer Learning in Agriculture and Manufacturing IoT Systems

Feb 11, 2021

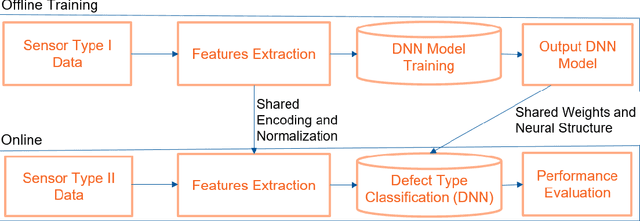

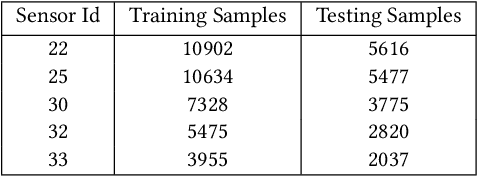

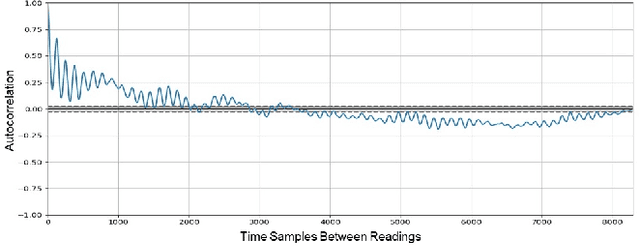

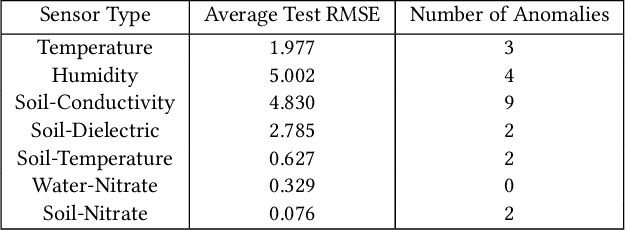

Abstract:IoT systems have been facing increasingly sophisticated technical problems due to the growing complexity of these systems and their fast deployment practices. Consequently, IoT managers have to judiciously detect failures (anomalies) in order to reduce their cyber risk and operational cost. While there is a rich literature on anomaly detection in many IoT-based systems, there is no existing work that documents the use of ML models for anomaly detection in digital agriculture and in smart manufacturing systems. These two application domains pose certain salient technical challenges. In agriculture the data is often sparse, due to the vast areas of farms and the requirement to keep the cost of monitoring low. Second, in both domains, there are multiple types of sensors with varying capabilities and costs. The sensor data characteristics change with the operating point of the environment or machines, such as, the RPM of the motor. The inferencing and the anomaly detection processes therefore have to be calibrated for the operating point. In this paper, we analyze data from sensors deployed in an agricultural farm with data from seven different kinds of sensors, and from an advanced manufacturing testbed with vibration sensors. We evaluate the performance of ARIMA and LSTM models for predicting the time series of sensor data. Then, considering the sparse data from one kind of sensor, we perform transfer learning from a high data rate sensor. We then perform anomaly detection using the predicted sensor data. Taken together, we show how in these two application domains, predictive failure classification can be achieved, thus paving the way for predictive maintenance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge