Nir Raviv

perm2vec: Graph Permutation Selection for Decoding of Error Correction Codes using Self-Attention

Feb 06, 2020

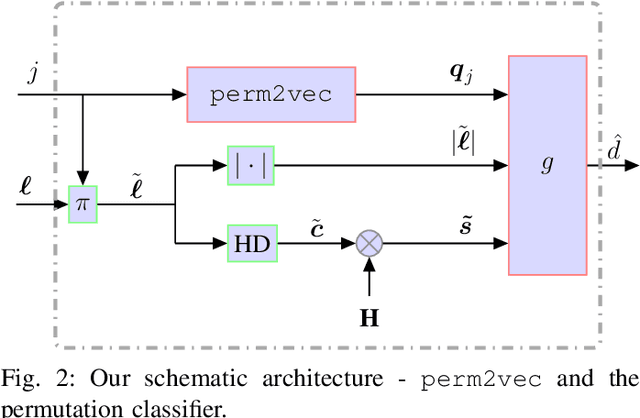

Abstract:Error correction codes are integral part of communication applications, boosting the reliability of transmission. The optimal decoding of transmitted codewords is the maximum likelihood rule, which is NP-hard due to the curse of dimensionality. For practical realizations, suboptimal decoding algorithms are employed; yet limited theoretical insights prevents one from exploiting the full potential of these algorithms. One such insight is the choice of permutation in permutation decoding. We present a data-driven framework for permutation selection, combining domain knowledge with machine learning concepts such as node embedding and self-attention. Significant and consistent improvements in the bit error rate are introduced for all simulated codes, over the baseline decoders. To the best of the authors' knowledge, this work is the first to leverage the benefits of the neural Transformer networks in physical layer communication systems.

Active Deep Decoding of Linear Codes

Jun 06, 2019

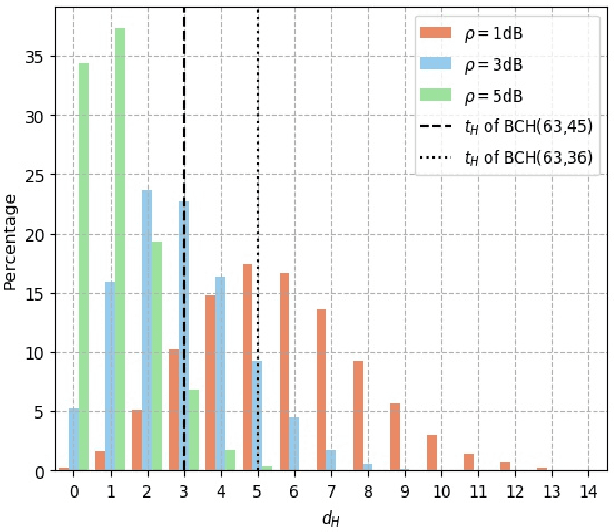

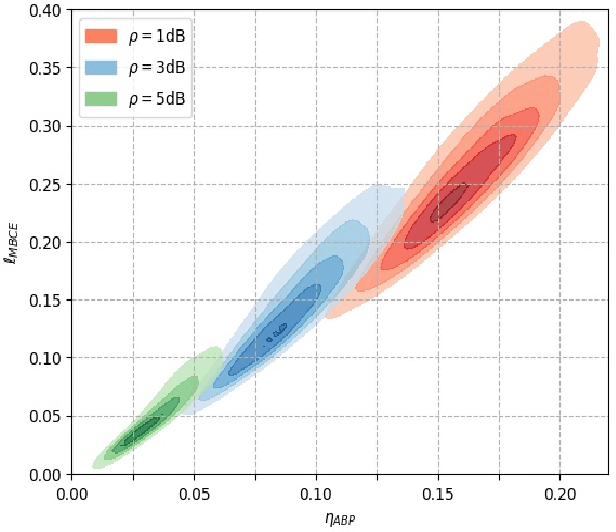

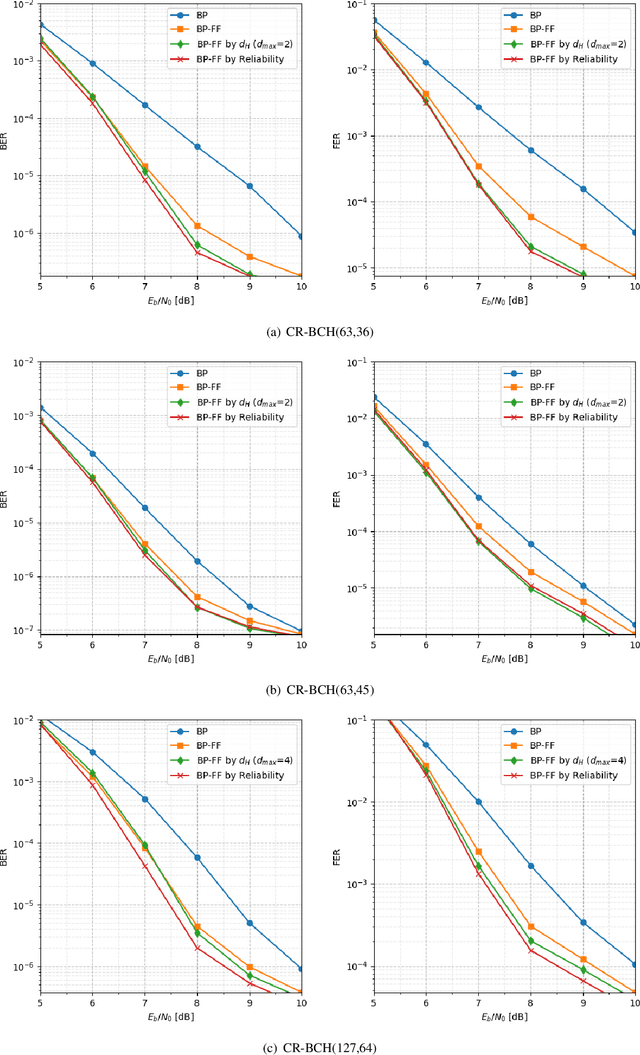

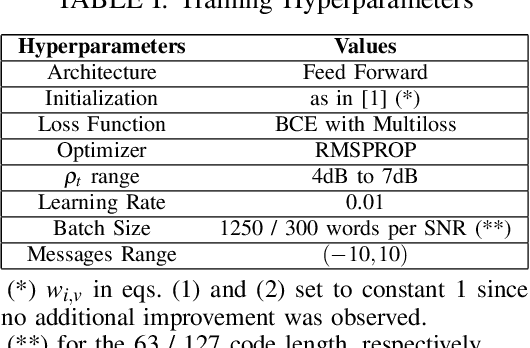

Abstract:High quality data is essential in deep learning to train a robust model. While in other fields data is sparse and costly to collect, in error decoding it is free to query and label thus allowing potential data exploitation. Utilizing this fact and inspired by active learning, two novel methods are introduced to improve Weighted Belief Propagation (WBP) decoding. These methods incorporate machine-learning concepts with error decoding measures. For BCH(63,36), (63,45) and (127,64) codes, with cycle-reduced parity-check matrices, improvement of up to 1dB in BER and FER is demonstrated by smartly sampling the data, without increasing inference (decoding) complexity. The proposed methods constitutes an example guidelines for model enhancement by incorporation of domain knowledge from error-correcting field into a deep learning model. These guidelines can be adapted to any other deep learning based communication block.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge