Nikos G. Evgenidis

Improving Reliability of Hybrid Bit-Semantic Communications for Cellular Networks

Feb 09, 2026Abstract:Semantic communications (SemComs) have been considered as a promising solution to reduce the amount of transmitted information, thus paving the way for more energy-and spectrum-efficient wireless networks. Nevertheless, SemComs rely heavily on the utilization of deep neural networks (DNNs) at the transceivers, which limit the accuracy between the original and reconstructed data and are challenging to implement in practice due to increased architecture complexity. Thus, hybrid cellular networks that utilize both conventional bit communications (BitComs) and SemComs have been introduced to bridge the gap between required and existing infrastructure. To facilitate such networks, in this work, we investigate reliability by deriving closed-form expressions for the outage probability of the network. Additionally, we propose a generalized outage probability through which the cell radius that achieves a desired outage threshold for a specific range of users is calculated in closed form. Additionally, to consider the practical limitations caused by the specialized dedicated hardware and the increased memory and computational resources that are required to support SemCom, a semantic utilization metric is proposed. Based on this metric, we express the probability that a specific number of users select SemCom transmission and calculate the optimal cell radius for that number in closed form. Simulation results validate the derived analytical expressions and the characterized design properties of the cell radius found through the proposed metrics, providing useful insights.

Split Learning in Computer Vision for Semantic Segmentation Delay Minimization

Dec 18, 2024

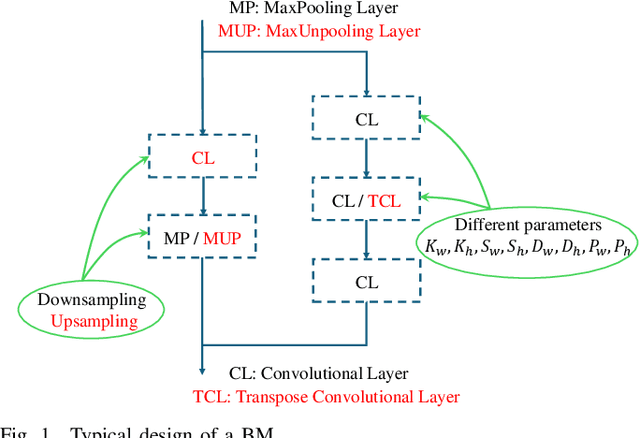

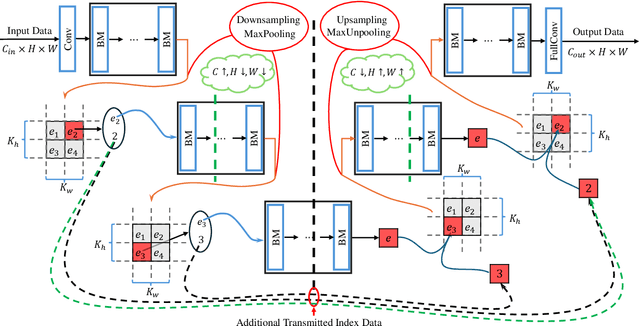

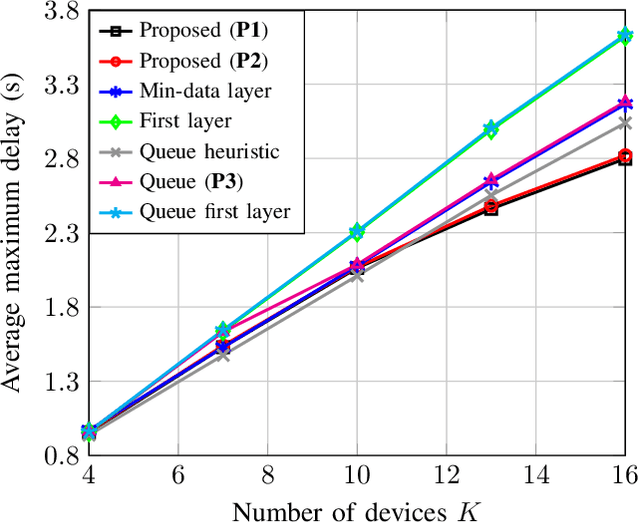

Abstract:In this paper, we propose a novel approach to minimize the inference delay in semantic segmentation using split learning (SL), tailored to the needs of real-time computer vision (CV) applications for resource-constrained devices. Semantic segmentation is essential for applications such as autonomous vehicles and smart city infrastructure, but faces significant latency challenges due to high computational and communication loads. Traditional centralized processing methods are inefficient for such scenarios, often resulting in unacceptable inference delays. SL offers a promising alternative by partitioning deep neural networks (DNNs) between edge devices and a central server, enabling localized data processing and reducing the amount of data required for transmission. Our contribution includes the joint optimization of bandwidth allocation, cut layer selection of the edge devices' DNN, and the central server's processing resource allocation. We investigate both parallel and serial data processing scenarios and propose low-complexity heuristic solutions that maintain near-optimal performance while reducing computational requirements. Numerical results show that our approach effectively reduces inference delay, demonstrating the potential of SL for improving real-time CV applications in dynamic, resource-constrained environments.

Hybrid Semantic-Shannon Communications

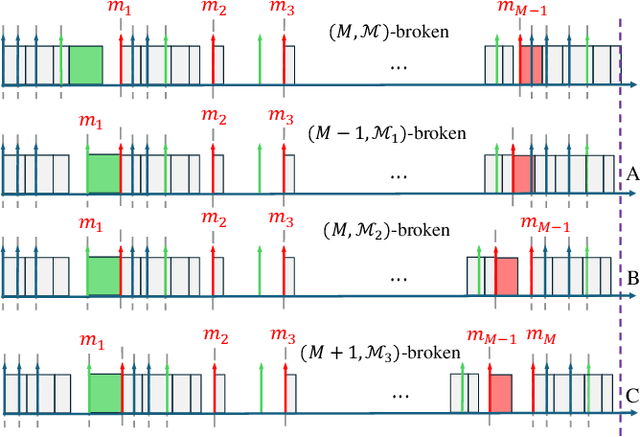

Oct 02, 2024Abstract:Semantic communications are considered a promising beyond-Shannon/bit paradigm to reduce network traffic and increase reliability, thus making wireless networks more energy efficient, robust, and sustainable. However, the performance is limited by the efficiency of the semantic transceivers, i.e., the achievable "similarity" between the transmitted and received signals. Under strict similarity conditions, semantic transmission may not be applicable and bit communication is mandatory. In this paper, for the first time in the literature, we propose a multi-carrier Hybrid Semantic-Shannon communication system where, without loss of generality, the case of text transmission is investigated. To this end, a joint semantic-bit transmission selection and power allocation optimization problem is formulated, aiming to minimize two transmission delay metrics widely used in the literature, subject to strict similarity thresholds. Despite their non-convexity, both problems are decomposed into a convex and a mixed linear integer programming problem by using alternating optimization, both of which can be solved optimally. Furthermore, to improve the performance of the proposed hybrid schemes, a novel association of text sentences to subcarriers is proposed based on the data size of the sentences and the channel gains of the subcarriers. We show that the proposed association is optimal in terms of transmission delay. Numerical simulations verify the effectiveness of the proposed hybrid semantic-bit communication scheme and the derived sentence-to-subcarrier association, and provide useful insights into the design parameters of such systems.

Waveform Design for Over-the-Air Computing

May 31, 2024Abstract:In response to the increasing number of devices anticipated in next-generation networks, a shift toward over-the-air (OTA) computing has been proposed. Leveraging the superposition of multiple access channels, OTA computing enables efficient resource management by supporting simultaneous uncoded transmission in the time and the frequency domain. Thus, to advance the integration of OTA computing, our study presents a theoretical analysis addressing practical issues encountered in current digital communication transceivers, such as time sampling error and intersymbol interference (ISI). To this end, we examine the theoretical mean squared error (MSE) for OTA transmission under time sampling error and ISI, while also exploring methods for minimizing the MSE in the OTA transmission. Utilizing alternating optimization, we also derive optimal power policies for both the devices and the base station. Additionally, we propose a novel deep neural network (DNN)-based approach to design waveforms enhancing OTA transmission performance under time sampling error and ISI. To ensure fair comparison with existing waveforms like the raised cosine (RC) and the better-than-raised-cosine (BRTC), we incorporate a custom loss function integrating energy and bandwidth constraints, along with practical design considerations such as waveform symmetry. Simulation results validate our theoretical analysis and demonstrate performance gains of the designed pulse over RC and BTRC waveforms. To facilitate testing of our results without necessitating the DNN structure recreation, we provide curve fitting parameters for select DNN-based waveforms as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge