Nikita Zeulin

Resource-Efficient Federated Hyperdimensional Computing

Jun 02, 2023

Abstract:In conventional federated hyperdimensional computing (HDC), training larger models usually results in higher predictive performance but also requires more computational, communication, and energy resources. If the system resources are limited, one may have to sacrifice the predictive performance by reducing the size of the HDC model. The proposed resource-efficient federated hyperdimensional computing (RE-FHDC) framework alleviates such constraints by training multiple smaller independent HDC sub-models and refining the concatenated HDC model using the proposed dropout-inspired procedure. Our numerical comparison demonstrates that the proposed framework achieves a comparable or higher predictive performance while consuming less computational and wireless resources than the baseline federated HDC implementation.

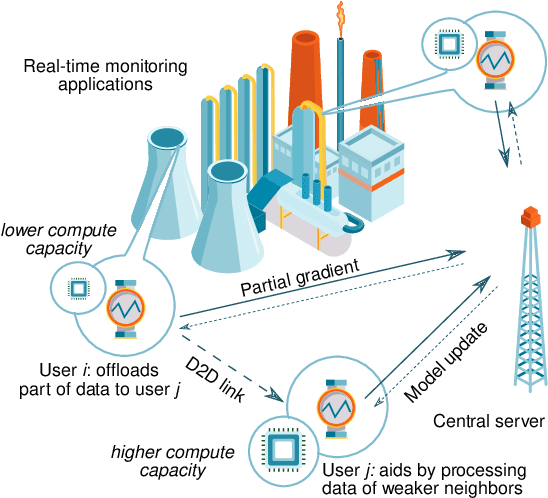

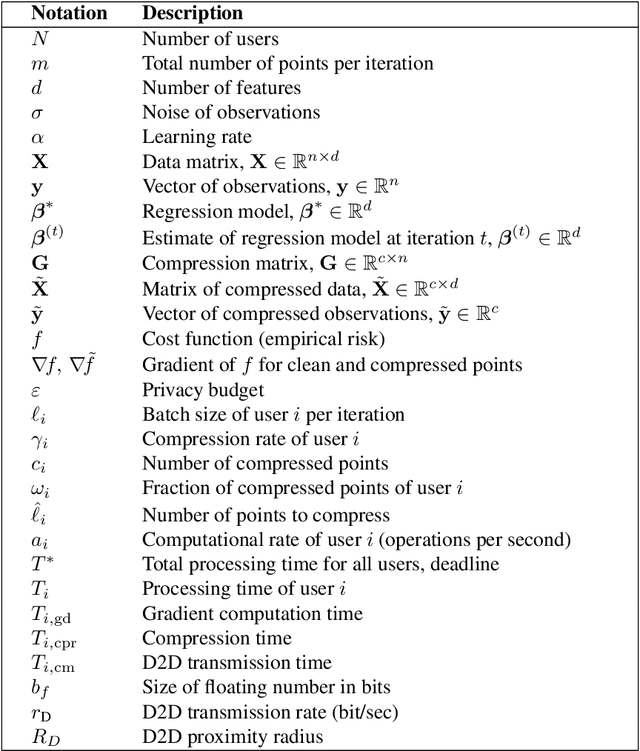

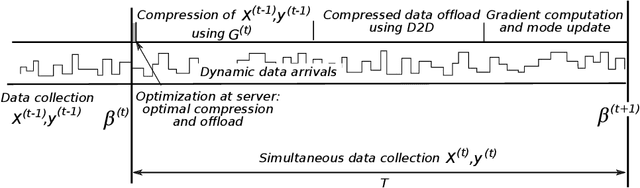

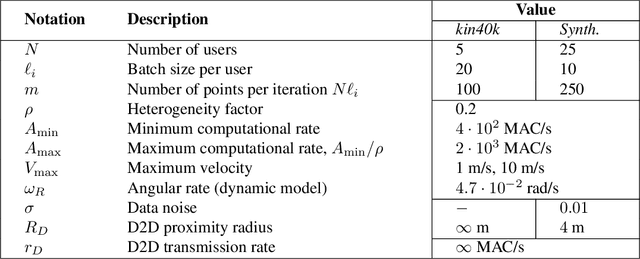

Dynamic Network-Assisted D2D-Aided Coded Distributed Learning

Nov 26, 2021

Abstract:Today, various machine learning (ML) applications offer continuous data processing and real-time data analytics at the edge of a wireless network. Distributed ML solutions are seriously challenged by resource heterogeneity, in particular, the so-called straggler effect. To address this issue, we design a novel device-to-device (D2D)-aided coded federated learning method (D2D-CFL) for load balancing across devices while characterizing privacy leakage. The proposed solution captures system dynamics, including data (time-dependent learning model, varied intensity of data arrivals), device (diverse computational resources and volume of training data), and deployment (varied locations and D2D graph connectivity). We derive an optimal compression rate for achieving minimum processing time and establish its connection with the convergence time. The resulting optimization problem provides suboptimal compression parameters, which improve the total training time. Our proposed method is beneficial for real-time collaborative applications, where the users continuously generate training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge