Niki Gitinabard

How Widely Can Prediction Models be Generalized? An Analysis of Performance Prediction in Blended Courses

Apr 15, 2019

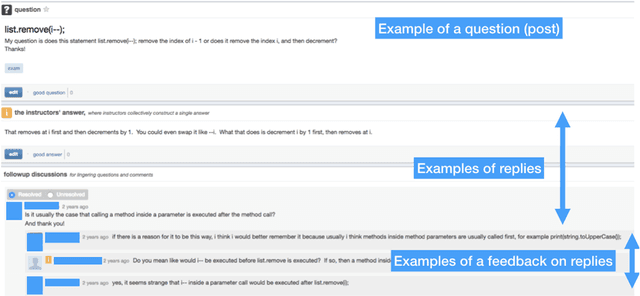

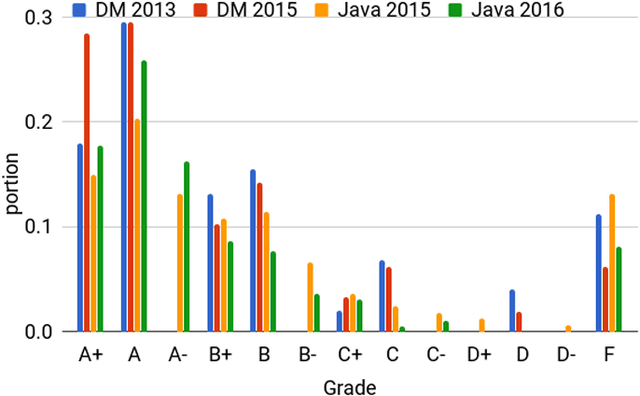

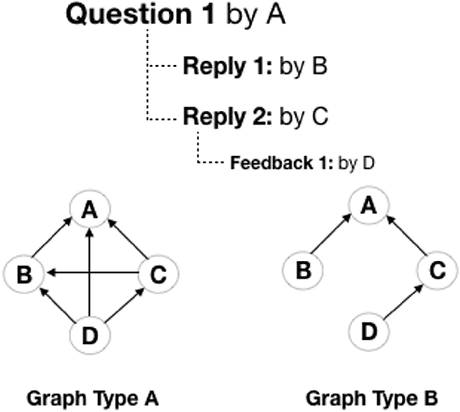

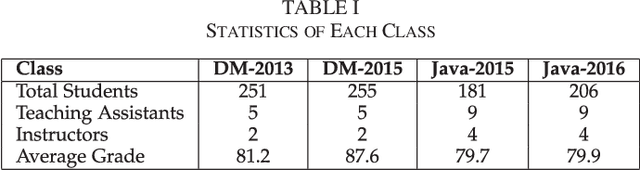

Abstract:Blended courses that mix in-person instruction with online platforms are increasingly popular in secondary education. These tools record a rich amount of data on students' study habits and social interactions. Prior research has shown that these metrics are correlated with students' performance in face to face classes. However, predictive models for blended courses are still limited and have not yet succeeded at early prediction or cross-class predictions even for repeated offerings of the same course. In this work, we use data from two offerings of two different undergraduate courses to train and evaluate predictive models on student performance based upon persistent student characteristics including study habits and social interactions. We analyze the performance of these models on the same offering, on different offerings of the same course, and across courses to see how well they generalize. We also evaluate the models on different segments of the courses to determine how early reliable predictions can be made. This work tells us in part how much data is required to make robust predictions and how cross-class data may be used, or not, to boost model performance. The results of this study will help us better understand how similar the study habits, social activities, and the teamwork styles are across semesters for students in each performance category. These trained models also provide an avenue to improve our existing support platforms to better support struggling students early in the semester with the goal of providing timely intervention.

Your Actions or Your Associates? Predicting Certification and Dropout in MOOCs with Behavioral and Social Features

Aug 31, 2018

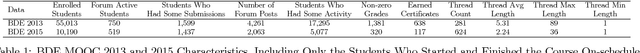

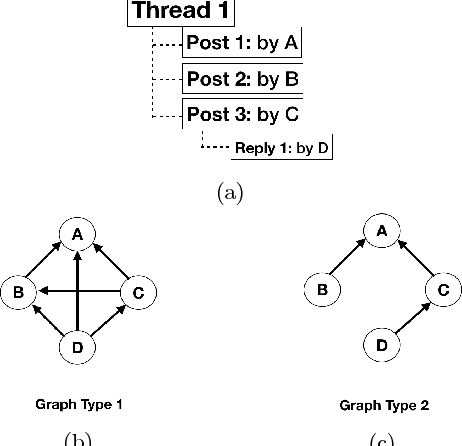

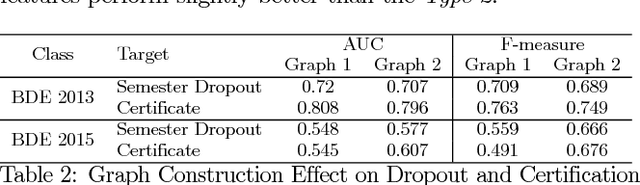

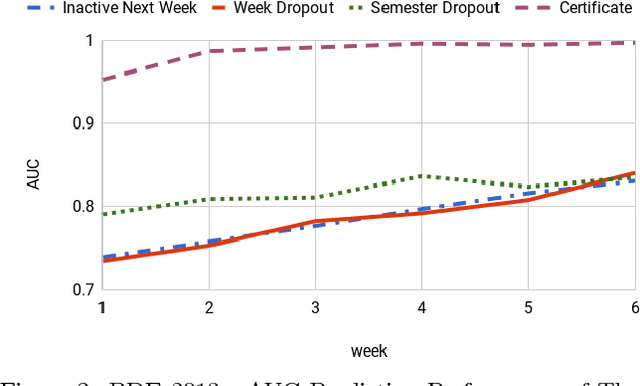

Abstract:The high level of attrition and low rate of certification in Massive Open Online Courses (MOOCs) has prompted a great deal of research. Prior researchers have focused on predicting dropout based upon behavioral features such as student confusion, click-stream patterns, and social interactions. However, few studies have focused on combining student logs with forum data. In this work, we use data from two different offerings of the same MOOC. We conduct a survival analysis to identify likely dropouts. We then examine two classes of features, social and behavioral, and apply a combination of modeling and feature-selection methods to identify the most relevant features to predict both dropout and certification. We examine the utility of three different model types and we consider the impact of different definitions of dropout on the predictors. Finally, we assess the reliability of the models over time by evaluating whether or not models from week 1 can predict dropout in week 2, and so on. The outcomes of this study will help instructors identify students likely to fail or dropout as soon as the first two weeks and provide them with more support.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge