Nidhal Carla Bouaynaya

Adversarially Diversified Rehearsal Memory (ADRM): Mitigating Memory Overfitting Challenge in Continual Learning

May 20, 2024

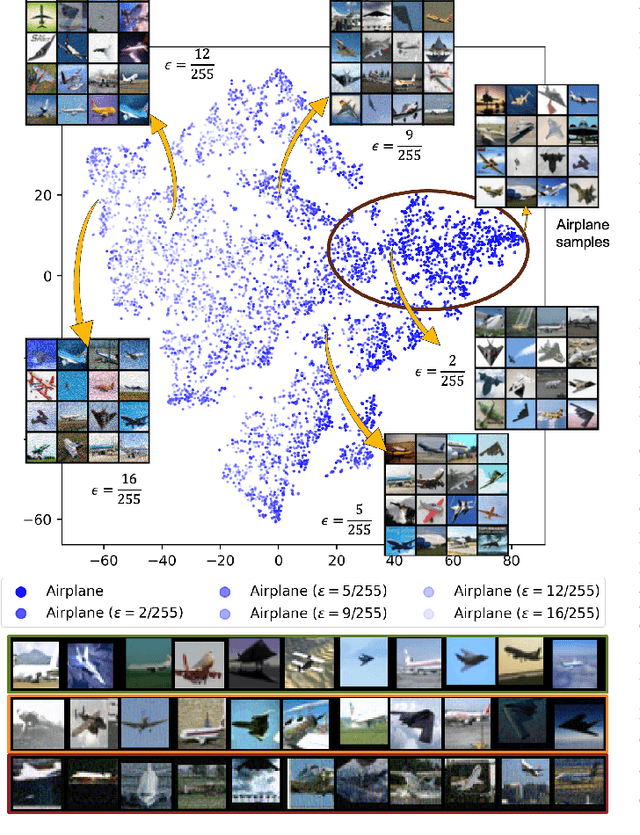

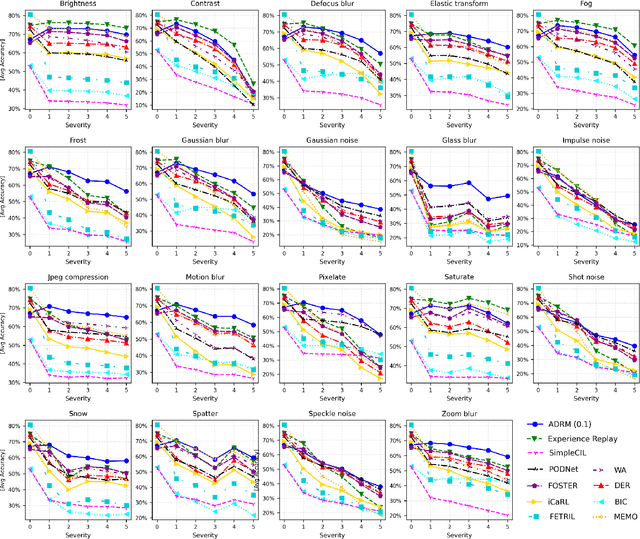

Abstract:Continual learning focuses on learning non-stationary data distribution without forgetting previous knowledge. Rehearsal-based approaches are commonly used to combat catastrophic forgetting. However, these approaches suffer from a problem called "rehearsal memory overfitting, " where the model becomes too specialized on limited memory samples and loses its ability to generalize effectively. As a result, the effectiveness of the rehearsal memory progressively decays, ultimately resulting in catastrophic forgetting of the learned tasks. We introduce the Adversarially Diversified Rehearsal Memory (ADRM) to address the memory overfitting challenge. This novel method is designed to enrich memory sample diversity and bolster resistance against natural and adversarial noise disruptions. ADRM employs the FGSM attacks to introduce adversarially modified memory samples, achieving two primary objectives: enhancing memory diversity and fostering a robust response to continual feature drifts in memory samples. Our contributions are as follows: Firstly, ADRM addresses overfitting in rehearsal memory by employing FGSM to diversify and increase the complexity of the memory buffer. Secondly, we demonstrate that ADRM mitigates memory overfitting and significantly improves the robustness of CL models, which is crucial for safety-critical applications. Finally, our detailed analysis of features and visualization demonstrates that ADRM mitigates feature drifts in CL memory samples, significantly reducing catastrophic forgetting and resulting in a more resilient CL model. Additionally, our in-depth t-SNE visualizations of feature distribution and the quantification of the feature similarity further enrich our understanding of feature representation in existing CL approaches. Our code is publically available at https://github.com/hikmatkhan/ADRM.

Brain-Inspired Continual Learning-Robust Feature Distillation and Re-Consolidation for Class Incremental Learning

Apr 22, 2024Abstract:Artificial intelligence (AI) and neuroscience share a rich history, with advancements in neuroscience shaping the development of AI systems capable of human-like knowledge retention. Leveraging insights from neuroscience and existing research in adversarial and continual learning, we introduce a novel framework comprising two core concepts: feature distillation and re-consolidation. Our framework, named Robust Rehearsal, addresses the challenge of catastrophic forgetting inherent in continual learning (CL) systems by distilling and rehearsing robust features. Inspired by the mammalian brain's memory consolidation process, Robust Rehearsal aims to emulate the rehearsal of distilled experiences during learning tasks. Additionally, it mimics memory re-consolidation, where new experiences influence the integration of past experiences to mitigate forgetting. Extensive experiments conducted on CIFAR10, CIFAR100, and real-world helicopter attitude datasets showcase the superior performance of CL models trained with Robust Rehearsal compared to baseline methods. Furthermore, examining different optimization training objectives-joint, continual, and adversarial learning-we highlight the crucial role of feature learning in model performance. This underscores the significance of rehearsing CL-robust samples in mitigating catastrophic forgetting. In conclusion, aligning CL approaches with neuroscience insights offers promising solutions to the challenge of catastrophic forgetting, paving the way for more robust and human-like AI systems.

Deep Ensemble for Rotorcraft Attitude Prediction

Jun 29, 2023Abstract:Historically, the rotorcraft community has experienced a higher fatal accident rate than other aviation segments, including commercial and general aviation. Recent advancements in artificial intelligence (AI) and the application of these technologies in different areas of our lives are both intriguing and encouraging. When developed appropriately for the aviation domain, AI techniques provide an opportunity to help design systems that can address rotorcraft safety challenges. Our recent work demonstrated that AI algorithms could use video data from onboard cameras and correctly identify different flight parameters from cockpit gauges, e.g., indicated airspeed. These AI-based techniques provide a potentially cost-effective solution, especially for small helicopter operators, to record the flight state information and perform post-flight analyses. We also showed that carefully designed and trained AI systems could accurately predict rotorcraft attitude (i.e., pitch and yaw) from outside scenes (images or video data). Ordinary off-the-shelf video cameras were installed inside the rotorcraft cockpit to record the outside scene, including the horizon. The AI algorithm could correctly identify rotorcraft attitude at an accuracy in the range of 80\%. In this work, we combined five different onboard camera viewpoints to improve attitude prediction accuracy to 94\%. In this paper, five onboard camera views included the pilot windshield, co-pilot windshield, pilot Electronic Flight Instrument System (EFIS) display, co-pilot EFIS display, and the attitude indicator gauge. Using video data from each camera view, we trained various convolutional neural networks (CNNs), which achieved prediction accuracy in the range of 79\% % to 90\% %. We subsequently ensembled the learned knowledge from all CNNs and achieved an ensembled accuracy of 93.3\%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge