Nicky Nirlipta Sahoo

KDPhys: An Attention Guided 3D to 2D Knowledge Distillation for Real-time Video-Based Physiological Measurement

Jan 02, 2026Abstract:Camera-based physiological monitoring, such as remote photoplethysmography (rPPG), captures subtle variations in skin optical properties caused by pulsatile blood volume changes using standard digital camera sensors. The demand for real-time, non-contact physiological measurement has increased significantly, particularly during the SARS-CoV-2 pandemic, to support telehealth and remote health monitoring applications. In this work, we propose an attention-based knowledge distillation (KD) framework, termed KDPhys, for extracting rPPG signals from facial video sequences. The proposed method distills global temporal representations from a 3D convolutional neural network (CNN) teacher model to a lightweight 2D CNN student model through effective 3D-to-2D feature distillation. To the best of our knowledge, this is the first application of knowledge distillation in the rPPG domain. Furthermore, we introduce a Distortion Loss incorporating Shape and Time (DILATE), which jointly accounts for both morphological and temporal characteristics of rPPG signals. Extensive qualitative and quantitative evaluations are conducted on three benchmark datasets. The proposed model achieves a significant reduction in computational complexity, using only half the parameters of existing methods while operating 56.67% faster. With just 0.23M parameters, it achieves an 18.15% reduction in Mean Absolute Error (MAE) compared to state-of-the-art approaches, attaining an average MAE of 1.78 bpm across all datasets. Additional experiments under diverse environmental conditions and activity scenarios further demonstrate the robustness and adaptability of the proposed approach.

* This paper has been published in Biomedical Signal Processing and Control

Deep learning based non-contact physiological monitoring in Neonatal Intensive Care Unit

Jul 25, 2022

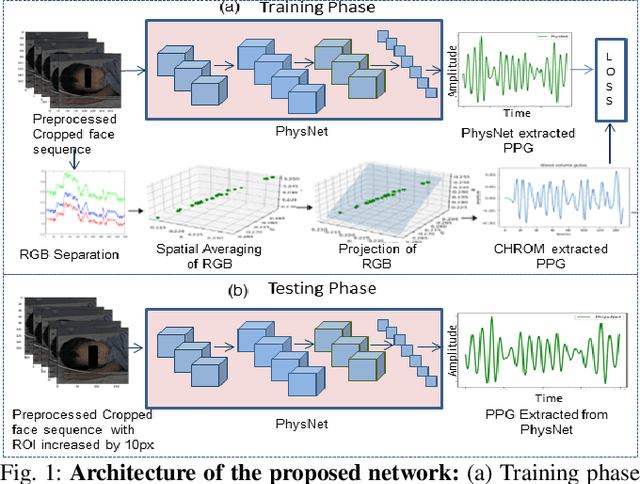

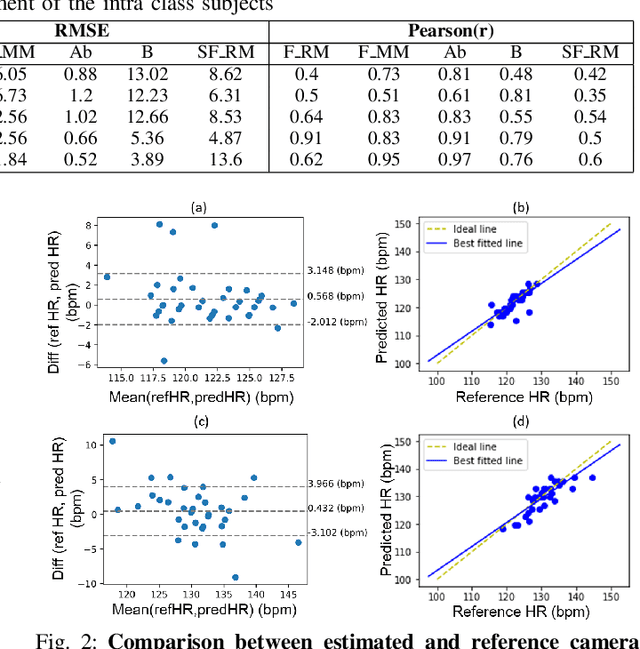

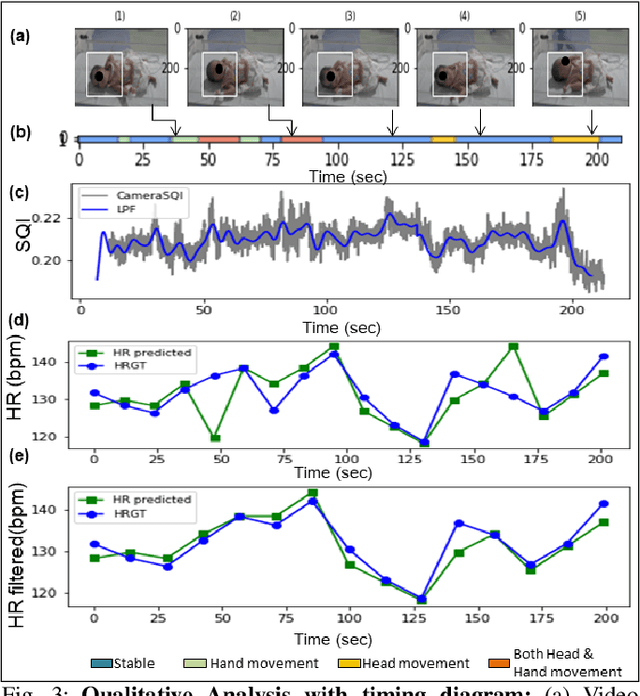

Abstract:Preterm babies in the Neonatal Intensive Care Unit (NICU) have to undergo continuous monitoring of their cardiac health. Conventional monitoring approaches are contact-based, making the neonates prone to various nosocomial infections. Video-based monitoring approaches have opened up potential avenues for contactless measurement. This work presents a pipeline for remote estimation of cardiopulmonary signals from videos in NICU setup. We have proposed an end-to-end deep learning (DL) model that integrates a non-learning based approach to generate surrogate ground truth (SGT) labels for supervision, thus refraining from direct dependency on true ground truth labels. We have performed an extended qualitative and quantitative analysis to examine the efficacy of our proposed DL-based pipeline and achieved an overall average mean absolute error of 4.6 beats per minute (bpm) and root mean square error of 6.2 bpm in the estimated heart rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge