Nick Wright

O-TALC: Steps Towards Combating Oversegmentation within Online Action Segmentation

Apr 10, 2024Abstract:Online temporal action segmentation shows a strong potential to facilitate many HRI tasks where extended human action sequences must be tracked and understood in real time. Traditional action segmentation approaches, however, operate in an offline two stage approach, relying on computationally expensive video wide features for segmentation, rendering them unsuitable for online HRI applications. In order to facilitate online action segmentation on a stream of incoming video data, we introduce two methods for improved training and inference of backbone action recognition models, allowing them to be deployed directly for online frame level classification. Firstly, we introduce surround dense sampling whilst training to facilitate training vs. inference clip matching and improve segment boundary predictions. Secondly, we introduce an Online Temporally Aware Label Cleaning (O-TALC) strategy to explicitly reduce oversegmentation during online inference. As our methods are backbone invariant, they can be deployed with computationally efficient spatio-temporal action recognition models capable of operating in real time with a small segmentation latency. We show our method outperforms similar online action segmentation work as well as matches the performance of many offline models with access to full temporal resolution when operating on challenging fine-grained datasets.

The Effects of Signal-to-Noise Ratio on Generative Adversarial Networks Applied to Marine Bioacoustic Data

Dec 22, 2023Abstract:In recent years generative adversarial networks (GANs) have been used to supplement datasets within the field of marine bioacoustics. This is driven by factors such as the cost to collect data, data sparsity and aid preprocessing. One notable challenge with marine bioacoustic data is the low signal-to-noise ratio (SNR) posing difficulty when applying deep learning techniques such as GANs. This work investigates the effect SNR has on the audio-based GAN performance and examines three different evaluation methodologies for GAN performance, yielding interesting results on the effects of SNR on GANs, specifically WaveGAN.

Towards Automatic Cetacean Photo-Identification: A Framework for Fine-Grain, Few-Shot Learning in Marine Ecology

Dec 07, 2022

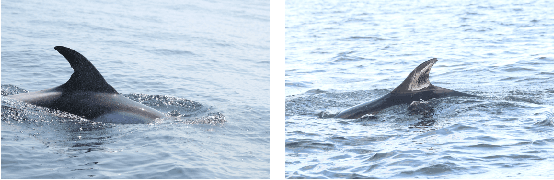

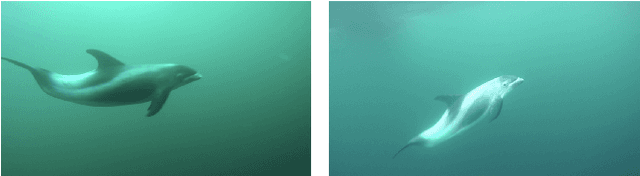

Abstract:Photo-identification (photo-id) is one of the main non-invasive capture-recapture methods utilised by marine researchers for monitoring cetacean (dolphin, whale, and porpoise) populations. This method has historically been performed manually resulting in high workload and cost due to the vast number of images collected. Recently automated aids have been developed to help speed-up photo-id, although they are often disjoint in their processing and do not utilise all available identifying information. Work presented in this paper aims to create a fully automatic photo-id aid capable of providing most likely matches based on all available information without the need for data pre-processing such as cropping. This is achieved through a pipeline of computer vision models and post-processing techniques aimed at detecting cetaceans in unedited field imagery before passing them downstream for individual level catalogue matching. The system is capable of handling previously uncatalogued individuals and flagging these for investigation thanks to catalogue similarity comparison. We evaluate the system against multiple real-life photo-id catalogues, achieving mAP@IOU[0.5] = 0.91, 0.96 for the task of dorsal fin detection on catalogues from Tanzania and the UK respectively and 83.1, 97.5% top-10 accuracy for the task of individual classification on catalogues from the UK and USA.

Hand Guided High Resolution Feature Enhancement for Fine-Grained Atomic Action Segmentation within Complex Human Assemblies

Nov 24, 2022Abstract:Due to the rapid temporal and fine-grained nature of complex human assembly atomic actions, traditional action segmentation approaches requiring the spatial (and often temporal) down sampling of video frames often loose vital fine-grained spatial and temporal information required for accurate classification within the manufacturing domain. In order to fully utilise higher resolution video data (often collected within the manufacturing domain) and facilitate real time accurate action segmentation - required for human robot collaboration - we present a novel hand location guided high resolution feature enhanced model. We also propose a simple yet effective method of deploying offline trained action recognition models for real time action segmentation on temporally short fine-grained actions, through the use of surround sampling while training and temporally aware label cleaning at inference. We evaluate our model on a novel action segmentation dataset containing 24 (+background) atomic actions from video data of a real world robotics assembly production line. Showing both high resolution hand features as well as traditional frame wide features improve fine-grained atomic action classification, and that though temporally aware label clearing our model is capable of surpassing similar encoder/decoder methods, while allowing for real time classification.

NDD20: A large-scale few-shot dolphin dataset for coarse and fine-grained categorisation

May 27, 2020

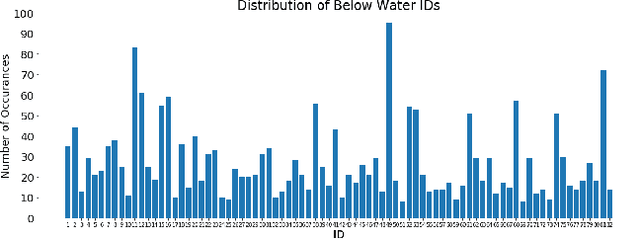

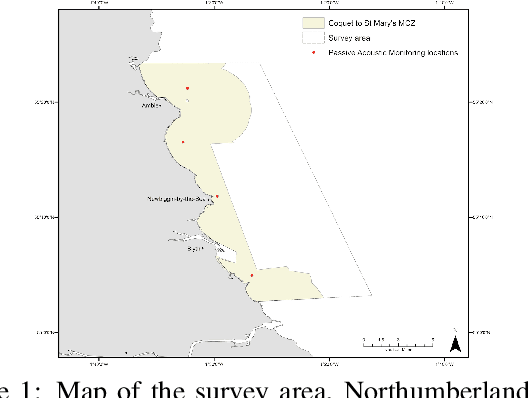

Abstract:We introduce the Northumberland Dolphin Dataset 2020 (NDD20), a challenging image dataset annotated for both coarse and fine-grained instance segmentation and categorisation. This dataset, the first release of the NDD, was created in response to the rapid expansion of computer vision into conservation research and the production of field-deployable systems suited to extreme environmental conditions -- an area with few open source datasets. NDD20 contains a large collection of above and below water images of two different dolphin species for traditional coarse and fine-grained segmentation. All data contained in NDD20 was obtained via manual collection in the North Sea around the Northumberland coastline, UK. We present experimentation using standard deep learning network architecture trained using NDD20 and report baselines results.

The Northumberland Dolphin Dataset: A Multimedia Individual Cetacean Dataset for Fine-Grained Categorisation

Aug 07, 2019

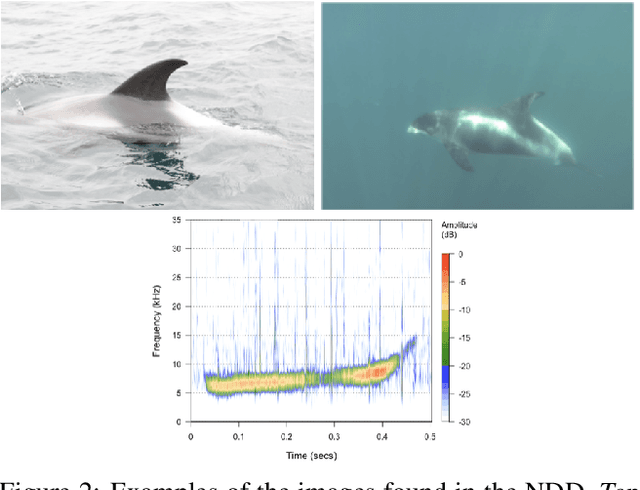

Abstract:Methods for cetacean research include photo-identification (photo-id) and passive acoustic monitoring (PAM) which generate thousands of images per expedition that are currently hand categorised by researchers into the individual dolphins sighted. With the vast amount of data obtained it is crucially important to develop a system that is able to categorise this quickly. The Northumberland Dolphin Dataset (NDD) is an on-going novel dataset project made up of above and below water images of, and spectrograms of whistles from, white-beaked dolphins. These are produced by photo-id and PAM data collection methods applied off the coast of Northumberland, UK. This dataset will aid in building cetacean identification models, reducing the number of human-hours required to categorise images. Example use cases and areas identified for speed up are examined.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge