Nguyen Tran Quang

When is Network Lasso Accurate?

Dec 18, 2017

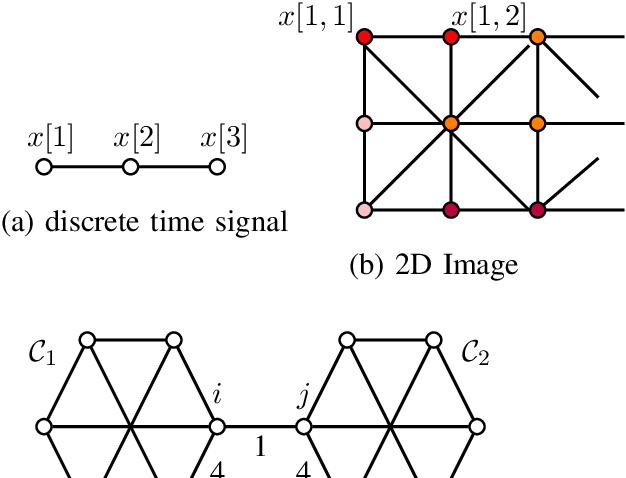

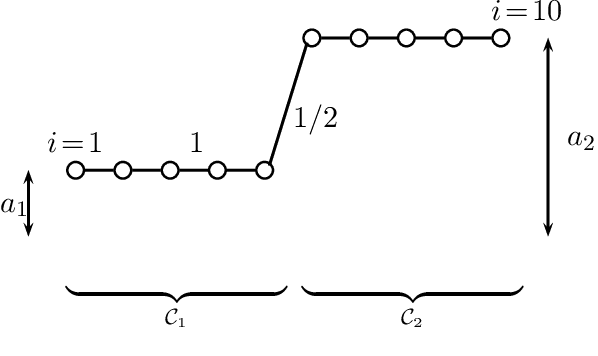

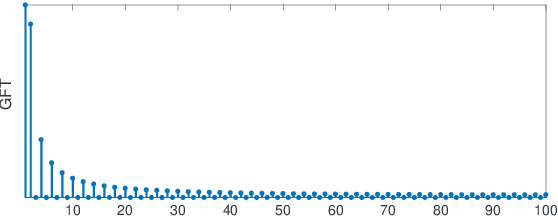

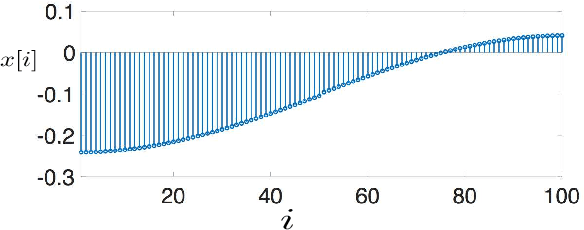

Abstract:The "least absolute shrinkage and selection operator" (Lasso) method has been adapted recently for networkstructured datasets. In particular, this network Lasso method allows to learn graph signals from a small number of noisy signal samples by using the total variation of a graph signal for regularization. While efficient and scalable implementations of the network Lasso are available, only little is known about the conditions on the underlying network structure which ensure network Lasso to be accurate. By leveraging concepts of compressed sensing, we address this gap and derive precise conditions on the underlying network topology and sampling set which guarantee the network Lasso for a particular loss function to deliver an accurate estimate of the entire underlying graph signal. We also quantify the error incurred by network Lasso in terms of two constants which reflect the connectivity of the sampled nodes.

Learning conditional independence structure for high-dimensional uncorrelated vector processes

Sep 13, 2016Abstract:We formulate and analyze a graphical model selection method for inferring the conditional independence graph of a high-dimensional nonstationary Gaussian random process (time series) from a finite-length observation. The observed process samples are assumed uncorrelated over time and having a time-varying marginal distribution. The selection method is based on testing conditional variances obtained for small subsets of process components. This allows to cope with the high-dimensional regime, where the sample size can be (drastically) smaller than the process dimension. We characterize the required sample size such that the proposed selection method is successful with high probability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge