Nengzhong Yin

AdaDiffSR: Adaptive Region-aware Dynamic Acceleration Diffusion Model for Real-World Image Super-Resolution

Oct 23, 2024

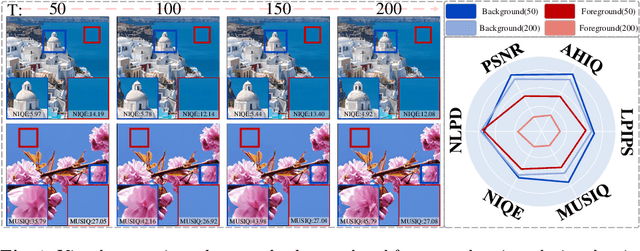

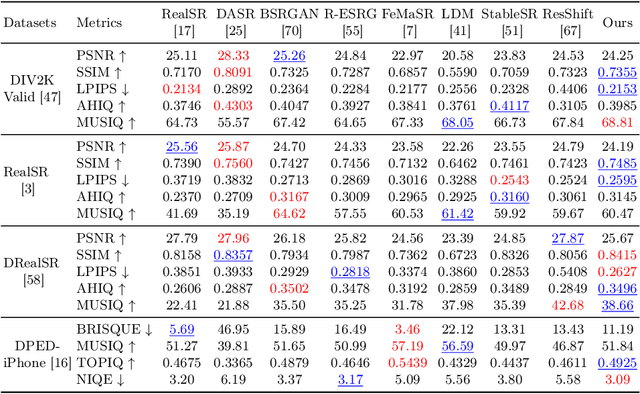

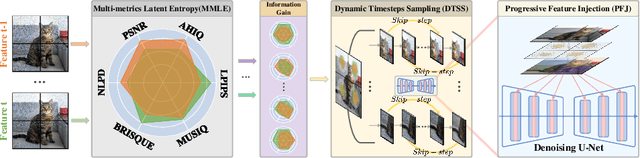

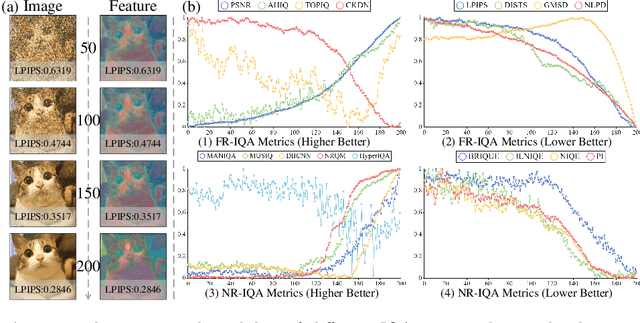

Abstract:Diffusion models (DMs) have shown promising results on single-image super-resolution and other image-to-image translation tasks. Benefiting from more computational resources and longer inference times, they are able to yield more realistic images. Existing DMs-based super-resolution methods try to achieve an overall average recovery over all regions via iterative refinement, ignoring the consideration that different input image regions require different timesteps to reconstruct. In this work, we notice that previous DMs-based super-resolution methods suffer from wasting computational resources to reconstruct invisible details. To further improve the utilization of computational resources, we propose AdaDiffSR, a DMs-based SR pipeline with dynamic timesteps sampling strategy (DTSS). Specifically, by introducing the multi-metrics latent entropy module (MMLE), we can achieve dynamic perception of the latent spatial information gain during the denoising process, thereby guiding the dynamic selection of the timesteps. In addition, we adopt a progressive feature injection module (PFJ), which dynamically injects the original image features into the denoising process based on the current information gain, so as to generate images with both fidelity and realism. Experiments show that our AdaDiffSR achieves comparable performance over current state-of-the-art DMs-based SR methods while consuming less computational resources and inference time on both synthetic and real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge