Nele Russwinkel

Talking Like One of Us: Effects of Using Regional Language in a Humanoid Social Robot

Dec 06, 2024Abstract:Social robots are becoming more and more perceptible in public service settings. For engaging people in a natural environment a smooth social interaction as well as acceptance by the users are important issues for future successful Human-Robot Interaction (HRI). The type of verbal communication has a special significance here. In this paper we investigate the effects of spoken language varieties of a non-standard/regional language compared to standard language. More precisely we compare a human dialog with a humanoid social robot Pepper where the robot on the one hand is answering in High German and on the other hand in Low German, a regional language that is understood and partly still spoken in the northern parts of Germany. The content of what the robot says remains the same in both variants. We are interested in the effects that these two different ways of robot talk have on human interlocutors who are more or less familiar with Low German in terms of perceived warmth, competence and possible discomfort in conversation against a background of cultural identity. To measure these factors we use the Robotic Social Attributes Scale (RoSAS) on 17 participants with an age ranging from 19 to 61. Our results show that significantly higher warmth is perceived in the Low German version of the conversation.

Project Report: Requirements for a Social Robot as an Information Provider in the Public Sector

Dec 06, 2024Abstract:Is it possible to integrate a humanoid social robot into the work processes or customer care in an official environment, e.g. in municipal offices? If so, what could such an application scenario look like and what skills would the robot need to have when interacting with human customers? What are requirements for this kind of interactions? We have devised an application scenario for such a case, determined the necessary or desirable capabilities of the robot, developed a corresponding robot application and carried out initial tests and evaluations in a project together with the Kiel City Council. One of the most important insights gained in the project was that a humanoid robot with natural language processing capabilities based on large language models as well as human-like gestures and posture changes (animations) proved to be much more preferred by users compared to standard browser-based solutions on tablets for an information system in the City Council. Furthermore, we propose a connection of the ACT-R cognitive architecture with the robot, where an ACT-R model is used in interaction with the robot application to cognitively process and enhance a dialogue between human and robot.

Towards autonomous artificial agents with an active self: modeling sense of control in situated action

Dec 10, 2021

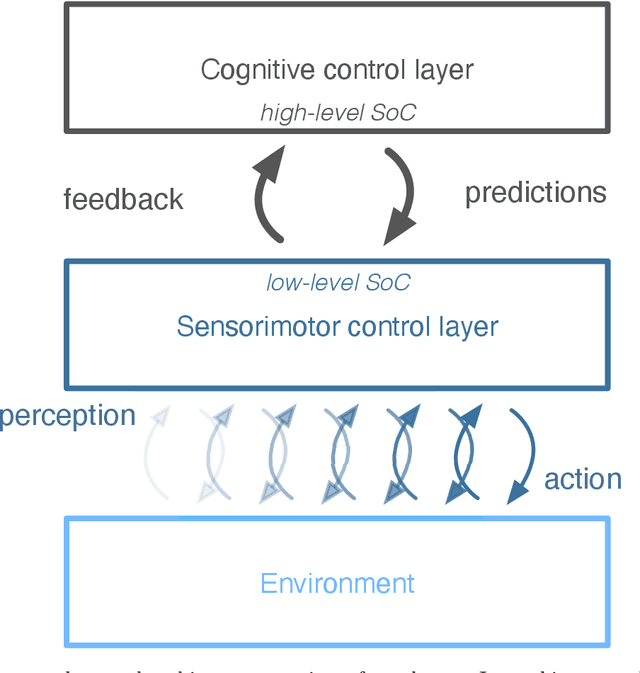

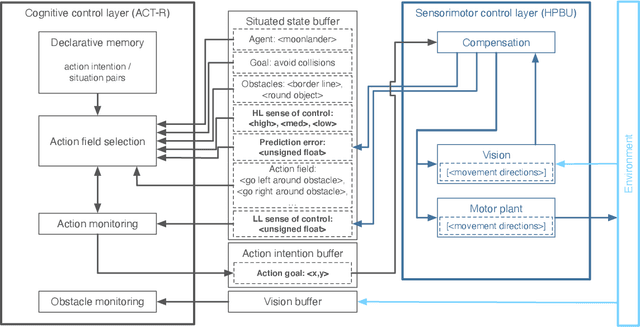

Abstract:In this paper we present a computational modeling account of an active self in artificial agents. In particular we focus on how an agent can be equipped with a sense of control and how it arises in autonomous situated action and, in turn, influences action control. We argue that this requires laying out an embodied cognitive model that combines bottom-up processes (sensorimotor learning and fine-grained adaptation of control) with top-down processes (cognitive processes for strategy selection and decision-making). We present such a conceptual computational architecture based on principles of predictive processing and free energy minimization. Using this general model, we describe how a sense of control can form across the levels of a control hierarchy and how this can support action control in an unpredictable environment. We present an implementation of this model as well as first evaluations in a simulated task scenario, in which an autonomous agent has to cope with un-/predictable situations and experiences corresponding sense of control. We explore different model parameter settings that lead to different ways of combining low-level and high-level action control. The results show the importance of appropriately weighting information in situations where the need for low/high-level action control varies and they demonstrate how the sense of control can facilitate this.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge