Navid Shervani-Tabar

Oja's plasticity rule overcomes several challenges of training neural networks under biological constraints

Aug 15, 2024

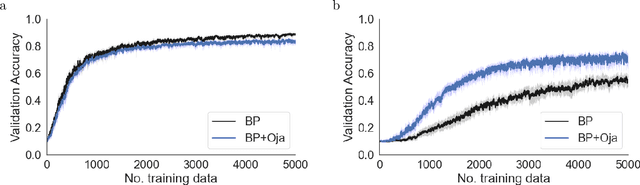

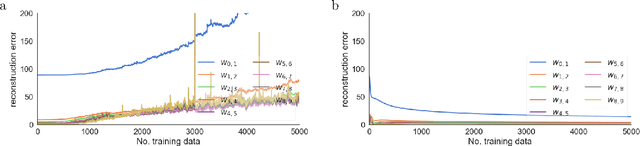

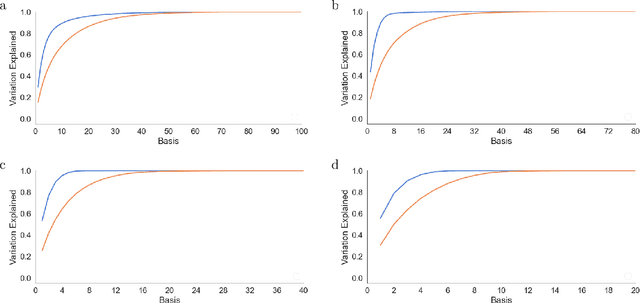

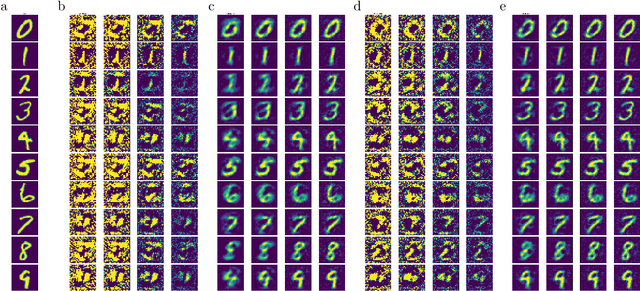

Abstract:There is a large literature on the similarities and differences between biological neural circuits and deep artificial neural networks (DNNs). However, modern training of DNNs relies on several engineering tricks such as data batching, normalization, adaptive optimizers, and precise weight initialization. Despite their critical role in training DNNs, these engineering tricks are often overlooked when drawing parallels between biological and artificial networks, potentially due to a lack of evidence for their direct biological implementation. In this study, we show that Oja's plasticity rule partly overcomes the need for some engineering tricks. Specifically, under difficult, but biologically realistic learning scenarios such as online learning, deep architectures, and sub-optimal weight initialization, Oja's rule can substantially improve the performance of pure backpropagation. Our results demonstrate that simple synaptic plasticity rules can overcome challenges to learning that are typically overcome using less biologically plausible approaches when training DNNs.

Meta-Learning Biologically Plausible Plasticity Rules with Random Feedback Pathways

Nov 07, 2022Abstract:Backpropagation is widely used to train artificial neural networks, but its relationship to synaptic plasticity in the brain is unknown. Some biological models of backpropagation rely on feedback projections that are symmetric with feedforward connections, but experiments do not corroborate the existence of such symmetric backward connectivity. Random feedback alignment offers an alternative model in which errors are propagated backward through fixed, random backward connections. This approach successfully trains shallow models, but learns slowly and does not perform well with deeper models or online learning. In this study, we develop a novel meta-plasticity approach to discover interpretable, biologically plausible plasticity rules that improve online learning performance with fixed random feedback connections. The resulting plasticity rules show improved online training of deep models in the low data regime. Our results highlight the potential of meta-plasticity to discover effective, interpretable learning rules satisfying biological constraints.

Physics-Constrained Predictive Molecular Latent Space Discovery with Graph Scattering Variational Autoencoder

Sep 29, 2020

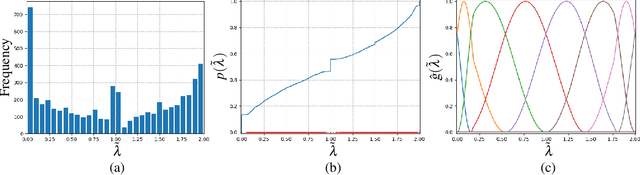

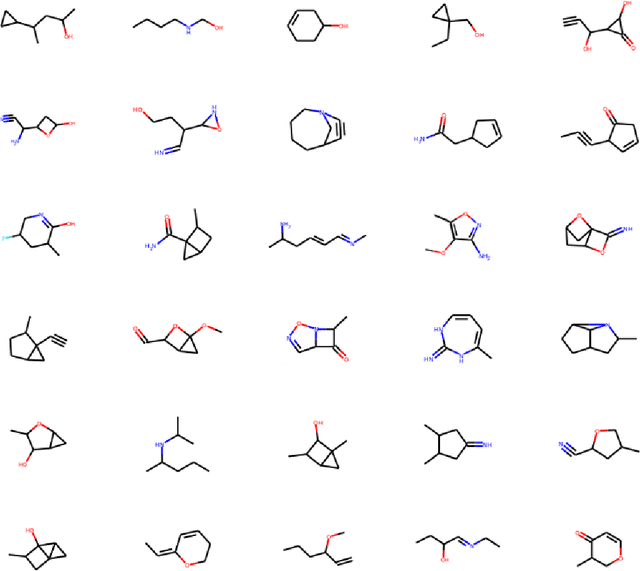

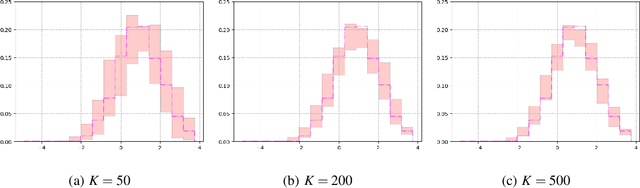

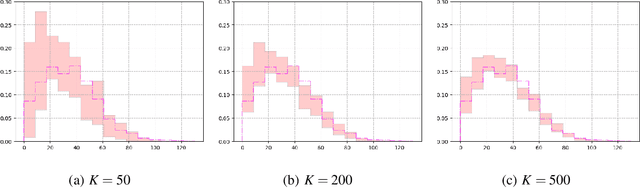

Abstract:Recent advances in artificial intelligence have propelled the development of innovative computational materials modeling and design techniques. In particular, generative deep learning models have been used for molecular representation, discovery and design with applications ranging from drug discovery to solar cell development. In this work, we assess the predictive capabilities of a molecular generative model developed based on variational inference and graph theory. The encoder network is based on the scattering transform, which allows for a better generalization of the model in the presence of limited training data. The scattering layers incorporate adaptive spectral filters which are tailored to the training dataset based on the molecular graphs' spectra. The decoding network is a one-shot graph generative model that conditions atom types on molecular topology. We present a quantitative assessment of the latent space in terms of its predictive ability for organic molecules in the QM9 dataset. To account for the limited size training data set, a Bayesian formalism is considered that allows us capturing the uncertainties in the predicted properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge