Nathan Larson

Continuous Mean-Zero Disagreement-Regularized Imitation Learning (CMZ-DRIL)

Mar 02, 2024

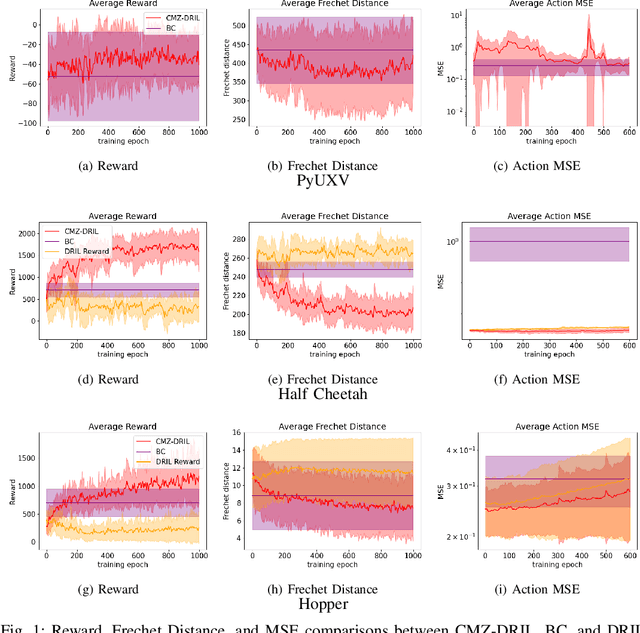

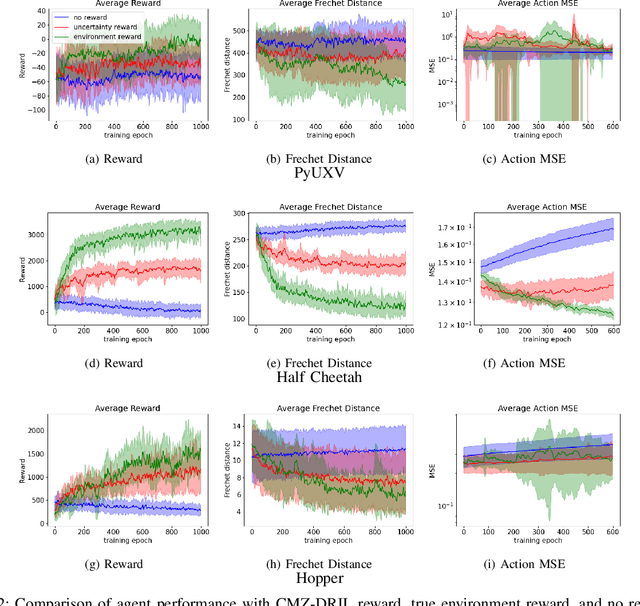

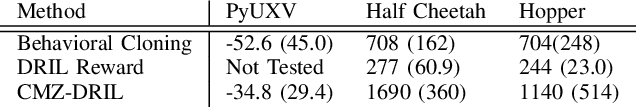

Abstract:Machine-learning paradigms such as imitation learning and reinforcement learning can generate highly performant agents in a variety of complex environments. However, commonly used methods require large quantities of data and/or a known reward function. This paper presents a method called Continuous Mean-Zero Disagreement-Regularized Imitation Learning (CMZ-DRIL) that employs a novel reward structure to improve the performance of imitation-learning agents that have access to only a handful of expert demonstrations. CMZ-DRIL uses reinforcement learning to minimize uncertainty among an ensemble of agents trained to model the expert demonstrations. This method does not use any environment-specific rewards, but creates a continuous and mean-zero reward function from the action disagreement of the agent ensemble. As demonstrated in a waypoint-navigation environment and in two MuJoCo environments, CMZ-DRIL can generate performant agents that behave more similarly to the expert than primary previous approaches in several key metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge