Nandhini Swaminathan

Bias Mitigation via Compensation: A Reinforcement Learning Perspective

Apr 30, 2024Abstract:As AI increasingly integrates with human decision-making, we must carefully consider interactions between the two. In particular, current approaches focus on optimizing individual agent actions but often overlook the nuances of collective intelligence. Group dynamics might require that one agent (e.g., the AI system) compensate for biases and errors in another agent (e.g., the human), but this compensation should be carefully developed. We provide a theoretical framework for algorithmic compensation that synthesizes game theory and reinforcement learning principles to demonstrate the natural emergence of deceptive outcomes from the continuous learning dynamics of agents. We provide simulation results involving Markov Decision Processes (MDP) learning to interact. This work then underpins our ethical analysis of the conditions in which AI agents should adapt to biases and behaviors of other agents in dynamic and complex decision-making environments. Overall, our approach addresses the nuanced role of strategic deception of humans, challenging previous assumptions about its detrimental effects. We assert that compensation for others' biases can enhance coordination and ethical alignment: strategic deception, when ethically managed, can positively shape human-AI interactions.

Application of the NIST AI Risk Management Framework to Surveillance Technology

Mar 22, 2024

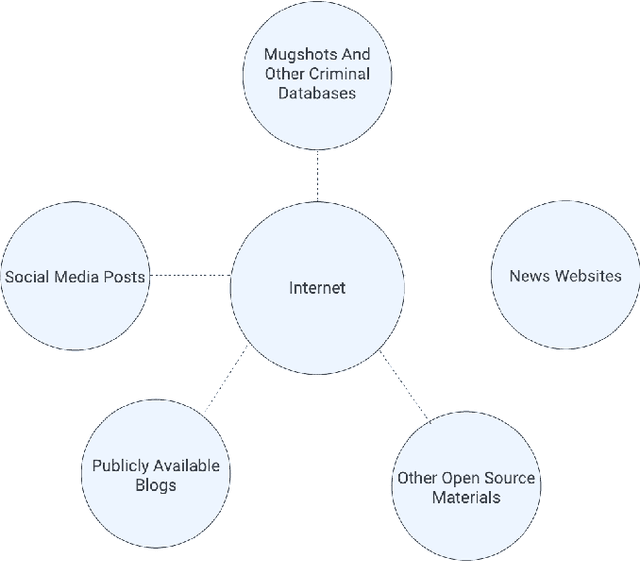

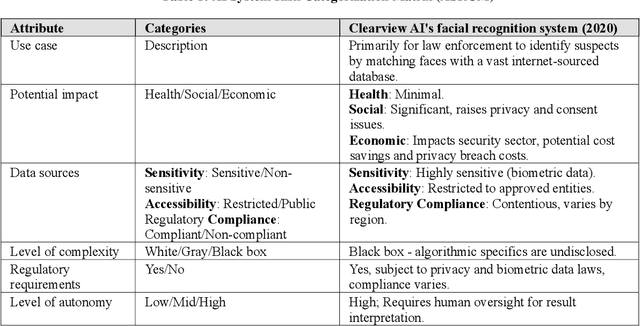

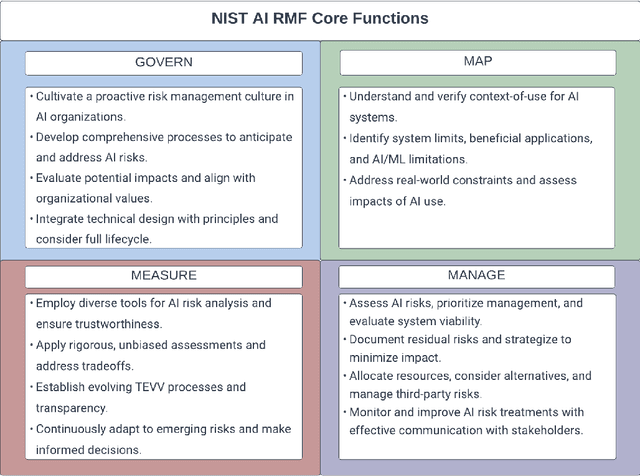

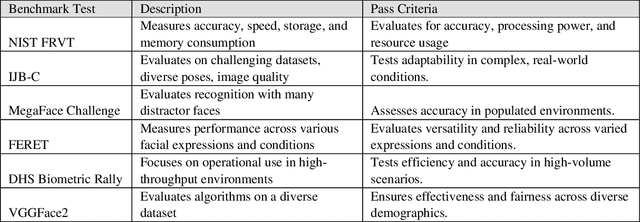

Abstract:This study offers an in-depth analysis of the application and implications of the National Institute of Standards and Technology's AI Risk Management Framework (NIST AI RMF) within the domain of surveillance technologies, particularly facial recognition technology. Given the inherently high-risk and consequential nature of facial recognition systems, our research emphasizes the critical need for a structured approach to risk management in this sector. The paper presents a detailed case study demonstrating the utility of the NIST AI RMF in identifying and mitigating risks that might otherwise remain unnoticed in these technologies. Our primary objective is to develop a comprehensive risk management strategy that advances the practice of responsible AI utilization in feasible, scalable ways. We propose a six-step process tailored to the specific challenges of surveillance technology that aims to produce a more systematic and effective risk management practice. This process emphasizes continual assessment and improvement to facilitate companies in managing AI-related risks more robustly and ensuring ethical and responsible deployment of AI systems. Additionally, our analysis uncovers and discusses critical gaps in the current framework of the NIST AI RMF, particularly concerning its application to surveillance technologies. These insights contribute to the evolving discourse on AI governance and risk management, highlighting areas for future refinement and development in frameworks like the NIST AI RMF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge