Namwoo Kim

How Well Do Vision--Language Models Understand Cities? A Comparative Study on Spatial Reasoning from Street-View Images

Aug 29, 2025Abstract:Effectively understanding urban scenes requires fine-grained spatial reasoning about objects, layouts, and depth cues. However, how well current vision-language models (VLMs), pretrained on general scenes, transfer these abilities to urban domain remains underexplored. To address this gap, we conduct a comparative study of three off-the-shelf VLMs-BLIP-2, InstructBLIP, and LLaVA-1.5-evaluating both zero-shot performance and the effects of fine-tuning with a synthetic VQA dataset specific to urban scenes. We construct such dataset from segmentation, depth, and object detection predictions of street-view images, pairing each question with LLM-generated Chain-of-Thought (CoT) answers for step-by-step reasoning supervision. Results show that while VLMs perform reasonably well in zero-shot settings, fine-tuning with our synthetic CoT-supervised dataset substantially boosts performance, especially for challenging question types such as negation and counterfactuals. This study introduces urban spatial reasoning as a new challenge for VLMs and demonstrates synthetic dataset construction as a practical path for adapting general-purpose models to specialized domains.

TopoCL: Topological Contrastive Learning for Time Series

Feb 05, 2025

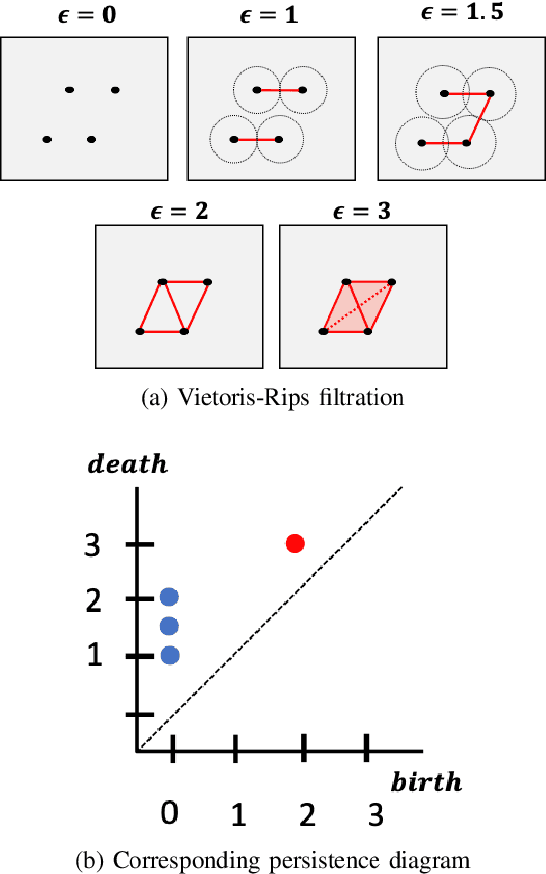

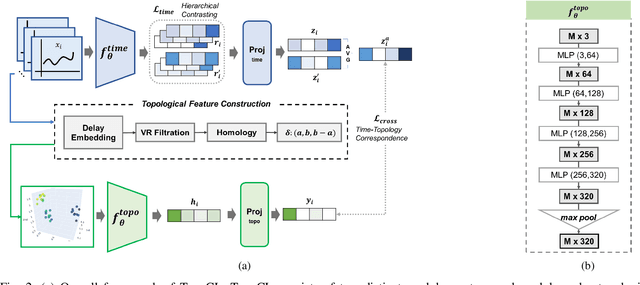

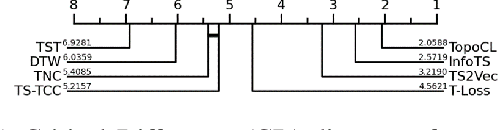

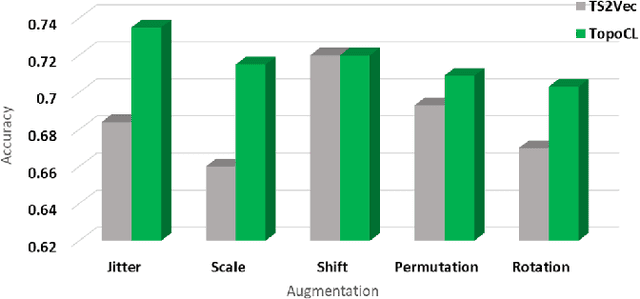

Abstract:Universal time series representation learning is challenging but valuable in real-world applications such as classification, anomaly detection, and forecasting. Recently, contrastive learning (CL) has been actively explored to tackle time series representation. However, a key challenge is that the data augmentation process in CL can distort seasonal patterns or temporal dependencies, inevitably leading to a loss of semantic information. To address this challenge, we propose Topological Contrastive Learning for time series (TopoCL). TopoCL mitigates such information loss by incorporating persistent homology, which captures the topological characteristics of data that remain invariant under transformations. In this paper, we treat the temporal and topological properties of time series data as distinct modalities. Specifically, we compute persistent homology to construct topological features of time series data, representing them in persistence diagrams. We then design a neural network to encode these persistent diagrams. Our approach jointly optimizes CL within the time modality and time-topology correspondence, promoting a comprehensive understanding of both temporal semantics and topological properties of time series. We conduct extensive experiments on four downstream tasks-classification, anomaly detection, forecasting, and transfer learning. The results demonstrate that TopoCL achieves state-of-the-art performance.

MobiCLR: Mobility Time Series Contrastive Learning for Urban Region Representations

Feb 05, 2025Abstract:Recently, learning effective representations of urban regions has gained significant attention as a key approach to understanding urban dynamics and advancing smarter cities. Existing approaches have demonstrated the potential of leveraging mobility data to generate latent representations, providing valuable insights into the intrinsic characteristics of urban areas. However, incorporating the temporal dynamics and detailed semantics inherent in human mobility patterns remains underexplored. To address this gap, we propose a novel urban region representation learning model, Mobility Time Series Contrastive Learning for Urban Region Representations (MobiCLR), designed to capture semantically meaningful embeddings from inflow and outflow mobility patterns. MobiCLR uses contrastive learning to enhance the discriminative power of its representations, applying an instance-wise contrastive loss to capture distinct flow-specific characteristics. Additionally, we develop a regularizer to align output features with these flow-specific representations, enabling a more comprehensive understanding of mobility dynamics. To validate our model, we conduct extensive experiments in Chicago, New York, and Washington, D.C. to predict income, educational attainment, and social vulnerability. The results demonstrate that our model outperforms state-of-the-art models.

Effective Urban Region Representation Learning Using Heterogeneous Urban Graph Attention Network (HUGAT)

Feb 18, 2022

Abstract:Revealing the hidden patterns shaping the urban environment is essential to understand its dynamics and to make cities smarter. Recent studies have demonstrated that learning the representations of urban regions can be an effective strategy to uncover the intrinsic characteristics of urban areas. However, existing studies lack in incorporating diversity in urban data sources. In this work, we propose heterogeneous urban graph attention network (HUGAT), which incorporates heterogeneity of diverse urban datasets. In HUGAT, heterogeneous urban graph (HUG) incorporates both the geo-spatial and temporal people movement variations in a single graph structure. Given a HUG, a set of meta-paths are designed to capture the rich urban semantics as composite relations between nodes. Region embedding is carried out using heterogeneous graph attention network (HAN). HUGAT is designed to consider multiple learning objectives of city's geo-spatial and mobility variations simultaneously. In our extensive experiments on NYC data, HUGAT outperformed all the state-of-the-art models. Moreover, it demonstrated a robust generalization capability across the various prediction tasks of crime, average personal income, and bike flow as well as the spatial clustering task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge