Najmeh Torabian

Emulating the Human Mind: A Neural-symbolic Link Prediction Model with Fast and Slow Reasoning and Filtered Rules

Oct 21, 2023Abstract:Link prediction is an important task in addressing the incompleteness problem of knowledge graphs (KG). Previous link prediction models suffer from issues related to either performance or explanatory capability. Furthermore, models that are capable of generating explanations, often struggle with erroneous paths or reasoning leading to the correct answer. To address these challenges, we introduce a novel Neural-Symbolic model named FaSt-FLiP (stands for Fast and Slow Thinking with Filtered rules for Link Prediction task), inspired by two distinct aspects of human cognition: "commonsense reasoning" and "thinking, fast and slow." Our objective is to combine a logical and neural model for enhanced link prediction. To tackle the challenge of dealing with incorrect paths or rules generated by the logical model, we propose a semi-supervised method to convert rules into sentences. These sentences are then subjected to assessment and removal of incorrect rules using an NLI (Natural Language Inference) model. Our approach to combining logical and neural models involves first obtaining answers from both the logical and neural models. These answers are subsequently unified using an Inference Engine module, which has been realized through both algorithmic implementation and a novel neural model architecture. To validate the efficacy of our model, we conducted a series of experiments. The results demonstrate the superior performance of our model in both link prediction metrics and the generation of more reliable explanations.

Farspredict: A benchmark dataset for link prediction

Mar 26, 2023Abstract:Link prediction with knowledge graph embedding (KGE) is a popular method for knowledge graph completion. Furthermore, training KGEs on non-English knowledge graph promote knowledge extraction and knowledge graph reasoning in the context of these languages. However, many challenges in non-English KGEs pose to learning a low-dimensional representation of a knowledge graph's entities and relations. This paper proposes "Farspredict" a Persian knowledge graph based on Farsbase (the most comprehensive knowledge graph in Persian). It also explains how the knowledge graph structure affects link prediction accuracy in KGE. To evaluate Farspredict, we implemented the popular models of KGE on it and compared the results with Freebase. Given the analysis results, some optimizations on the knowledge graph are carried out to improve its functionality in the KGE. As a result, a new Persian knowledge graph is achieved. Implementation results in the KGE models on Farspredict outperforming Freebases in many cases. At last, we discuss what improvements could be effective in enhancing the quality of Farspredict and how much it improves.

KGRefiner: Knowledge Graph Refinement for Improving Accuracy of Translational Link Prediction Methods

Jun 27, 2021

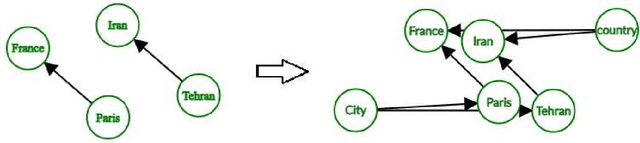

Abstract:Link prediction is the task of predicting missing relations between entities of the knowledge graph by inferring from the facts contained in it. Recent work in link prediction has attempted to provide a model for increasing link prediction accuracy by using more layers in neural network architecture or methods that add to the computational complexity of models. This paper we proposed a method for refining the knowledge graph, which makes the knowledge graph more informative, and link prediction operations can be performed more accurately using relatively fast translational models. Translational link prediction models, such as TransE, TransH, TransD, etc., have much less complexity than deep learning approaches. This method uses the hierarchy of relationships and also the hierarchy of entities in the knowledge graph to add the entity information as a new entity to the graph and connect it to the nodes which contain this information in their hierarchy. Our experiments show that our method can significantly increase the performance of translational link prediction methods in H@10, MR, MRR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge