Naimul Haque

Catastrophic Forgetting in LLMs: A Comparative Analysis Across Language Tasks

Apr 01, 2025Abstract:Large Language Models (LLMs) have significantly advanced Natural Language Processing (NLP), particularly in Natural Language Understanding (NLU) tasks. As we progress toward an agentic world where LLM-based agents autonomously handle specialized tasks, it becomes crucial for these models to adapt to new tasks without forgetting previously learned information - a challenge known as catastrophic forgetting. This study evaluates the continual fine-tuning of various open-source LLMs with different parameter sizes (specifically models under 10 billion parameters) on key NLU tasks from the GLUE benchmark, including SST-2, MRPC, CoLA, and MNLI. By employing prompt engineering and task-specific adjustments, we assess and compare the models' abilities to retain prior knowledge while learning new tasks. Our results indicate that models such as Phi-3.5-mini exhibit minimal forgetting while maintaining strong learning capabilities, making them well-suited for continual learning environments. Additionally, models like Orca-2-7b and Qwen2.5-7B demonstrate impressive learning abilities and overall performance after fine-tuning. This work contributes to understanding catastrophic forgetting in LLMs and highlights prompting engineering to optimize model performance for continual learning scenarios.

BDA: Bangla Text Data Augmentation Framework

Dec 11, 2024

Abstract:Data augmentation involves generating synthetic samples that resemble those in a given dataset. In resource-limited fields where high-quality data is scarce, augmentation plays a crucial role in increasing the volume of training data. This paper introduces a Bangla Text Data Augmentation (BDA) Framework that uses both pre-trained models and rule-based methods to create new variants of the text. A filtering process is included to ensure that the new text keeps the same meaning as the original while also adding variety in the words used. We conduct a comprehensive evaluation of the framework's effectiveness in Bangla text classification tasks. Our framework achieved significant improvement in F1 scores across five distinct datasets, delivering performance equivalent to models trained on 100\% of the data while utilizing only 50\% of the training dataset. Additionally, we explore the impact of data scarcity by progressively reducing the training data and augmenting it through BDA, resulting in notable F1 score enhancements. The study offers a thorough examination of BDA's performance, identifying key factors for optimal results and addressing its limitations through detailed analysis.

BdSpell: A YOLO-based Real-time Finger Spelling System for Bangla Sign Language

Sep 24, 2023Abstract:In the domain of Bangla Sign Language (BdSL) interpretation, prior approaches often imposed a burden on users, requiring them to spell words without hidden characters, which were subsequently corrected using Bangla grammar rules due to the missing classes in BdSL36 dataset. However, this method posed a challenge in accurately guessing the incorrect spelling of words. To address this limitation, we propose a novel real-time finger spelling system based on the YOLOv5 architecture. Our system employs specified rules and numerical classes as triggers to efficiently generate hidden and compound characters, eliminating the necessity for additional classes and significantly enhancing user convenience. Notably, our approach achieves character spelling in an impressive 1.32 seconds with a remarkable accuracy rate of 98\%. Furthermore, our YOLOv5 model, trained on 9147 images, demonstrates an exceptional mean Average Precision (mAP) of 96.4\%. These advancements represent a substantial progression in augmenting BdSL interpretation, promising increased inclusivity and accessibility for the linguistic minority. This innovative framework, characterized by compatibility with existing YOLO versions, stands as a transformative milestone in enhancing communication modalities and linguistic equity within the Bangla Sign Language community.

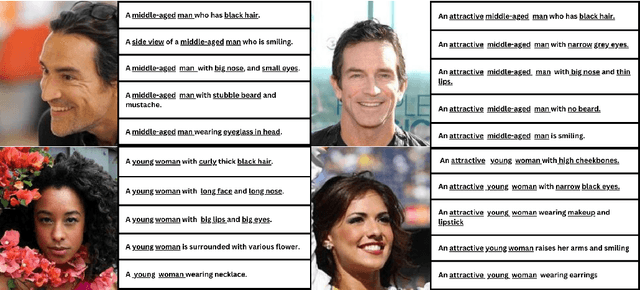

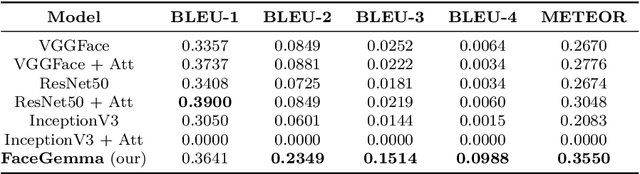

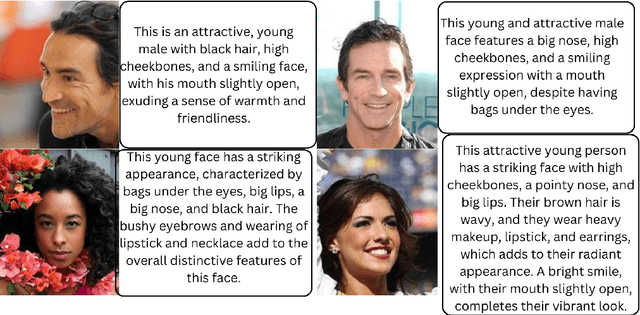

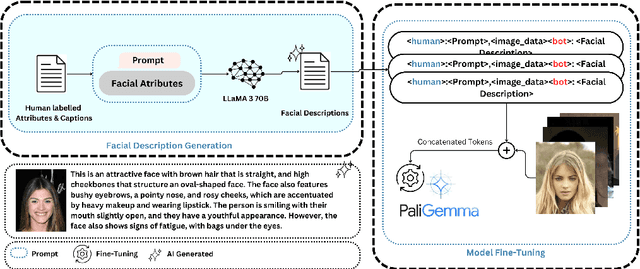

FaceAtt: Enhancing Image Captioning with Facial Attributes for Portrait Images

Sep 24, 2023

Abstract:Automated image caption generation is a critical area of research that enhances accessibility and understanding of visual content for diverse audiences. In this study, we propose the FaceAtt model, a novel approach to attribute-focused image captioning that emphasizes the accurate depiction of facial attributes within images. FaceAtt automatically detects and describes a wide range of attributes, including emotions, expressions, pointed noses, fair skin tones, hair textures, attractiveness, and approximate age ranges. Leveraging deep learning techniques, we explore the impact of different image feature extraction methods on caption quality and evaluate our model's performance using metrics such as BLEU and METEOR. Our FaceAtt model leverages annotated attributes of portraits as supplementary prior knowledge for our portrait images before captioning. This innovative addition yields a subtle yet discernible enhancement in the resulting scores, exemplifying the potency of incorporating additional attribute vectors during training. Furthermore, our research contributes to the broader discourse on ethical considerations in automated captioning. This study sets the stage for future research in refining attribute-focused captioning techniques, with a focus on enhancing linguistic coherence, addressing biases, and accommodating diverse user needs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge