Nahian Ibn Hasan

DeeptDCS: Deep Learning-Based Estimation of Currents Induced During Transcranial Direct Current Stimulation

May 04, 2022

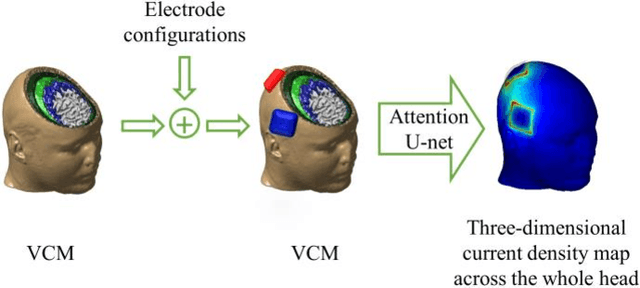

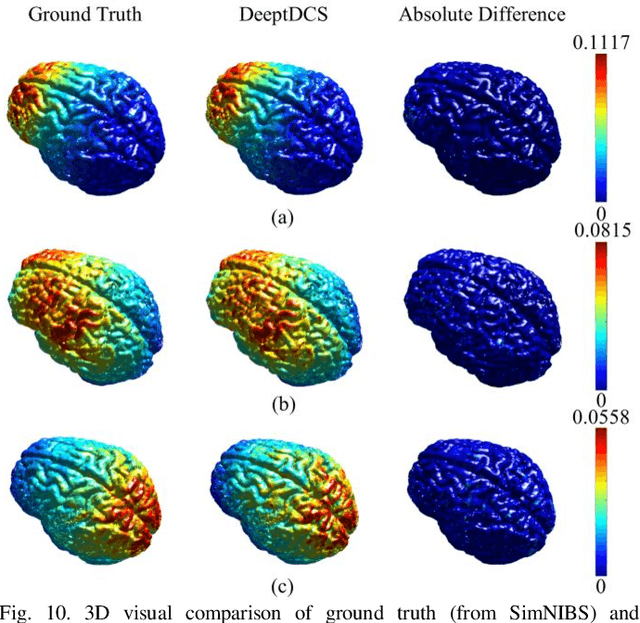

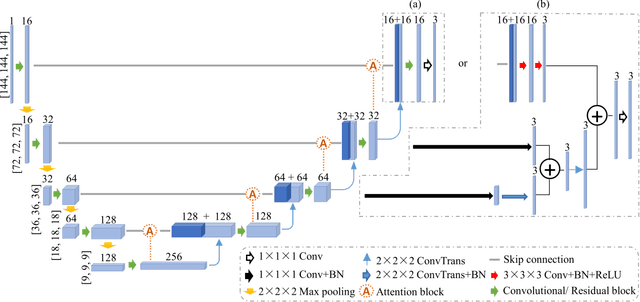

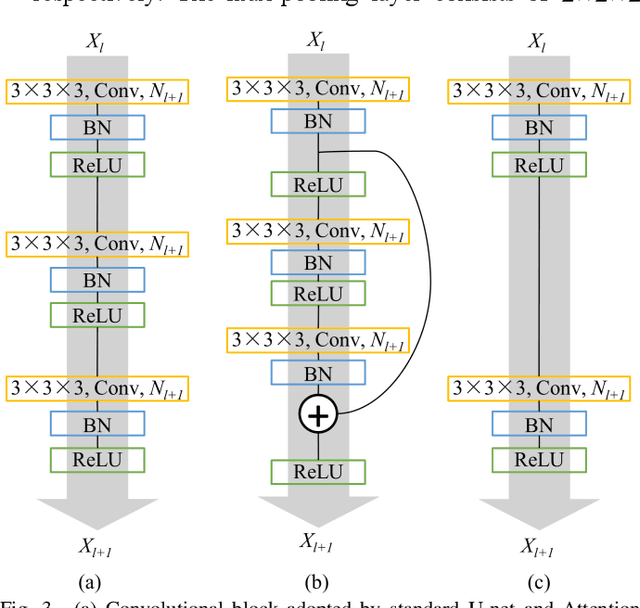

Abstract:Objective: Transcranial direct current stimulation (tDCS) is a non-invasive brain stimulation technique used to generate conduction currents in the head and disrupt brain functions. To rapidly evaluate the tDCS-induced current density in near real-time, this paper proposes a deep learning-based emulator, named DeeptDCS. Methods: The emulator leverages Attention U-net taking the volume conductor models (VCMs) of head tissues as inputs and outputting the three-dimensional current density distribution across the entire head. The electrode configurations are also incorporated into VCMs without increasing the number of input channels; this enables the straightforward incorporation of the non-parametric features of electrodes (e.g., thickness, shape, size, and position) in the training and testing of the proposed emulator. Results: Attention U-net outperforms standard U-net and its other three variants (Residual U-net, Attention Residual U-net, and Multi-scale Residual U-net) in terms of accuracy. The generalization ability of DeeptDCS to non-trained electrode positions can be greatly enhanced through fine-tuning the model. The computational time required by one emulation via DeeptDCS is a fraction of a second. Conclusion: DeeptDCS is at least two orders of magnitudes faster than a physics-based open-source simulator, while providing satisfactorily accurate results. Significance: The high computational efficiency permits the use of DeeptDCS in applications requiring its repetitive execution, such as uncertainty quantification and optimization studies of tDCS.

Bird Species Classification And Acoustic Features Selection Based on Distributed Neural Network with Two Stage Windowing of Short-Term Features

Jan 01, 2022

Abstract:Identification of bird species from audio records is one of the challenging tasks due to the existence of multiple species in the same recording, noise in the background, and long-term recording. Besides, choosing a proper acoustic feature from audio recording for bird species classification is another problem. In this paper, a hybrid method is represented comprising both traditional signal processing and a deep learning-based approach to classify bird species from audio recordings of diverse sources and types. Besides, a detailed study with 34 different features helps to select the proper feature set for classification and analysis in real-time applications. Moreover, the proposed deep neural network uses both acoustic and temporal feature learning. The proposed method starts with detecting voice activity from the raw signal, followed by extracting short-term features from the processed recording using 50 ms (with 25ms overlapping) time windows. Later, the short-term features are reshaped using second stage (non-overlapping) windowing to be trained through a distributed 2D Convolutional Neural Network (CNN) that forwards the output features to a Long and Short Term Memory (LSTM) Network. Then a final dense layer classifies the bird species. For the 10 class classifier, the highest accuracy achieved was 90.45\% for a feature set consisting of 13 Mel Frequency Cepstral Coefficients (MFCCs) and 12 Chroma Vectors. The corresponding specificity and AUC scores are 98.94\% and 94.09\%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge