Nadeem Yousaf

Estimation of BMI from Facial Images using Semantic Segmentation based Region-Aware Pooling

Apr 10, 2021

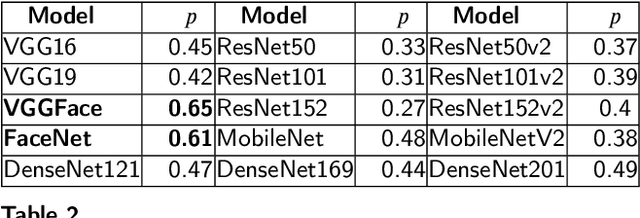

Abstract:Body-Mass-Index (BMI) conveys important information about one's life such as health and socio-economic conditions. Large-scale automatic estimation of BMIs can help predict several societal behaviors such as health, job opportunities, friendships, and popularity. The recent works have either employed hand-crafted geometrical face features or face-level deep convolutional neural network features for face to BMI prediction. The hand-crafted geometrical face feature lack generalizability and face-level deep features don't have detailed local information. Although useful, these methods missed the detailed local information which is essential for exact BMI prediction. In this paper, we propose to use deep features that are pooled from different face regions (eye, nose, eyebrow, lips, etc.,) and demonstrate that this explicit pooling from face regions can significantly boost the performance of BMI prediction. To address the problem of accurate and pixel-level face regions localization, we propose to use face semantic segmentation in our framework. Extensive experiments are performed using different Convolutional Neural Network (CNN) backbones including FaceNet and VGG-face on three publicly available datasets: VisualBMI, Bollywood and VIP attributes. Experimental results demonstrate that, as compared to the recent works, the proposed Reg-GAP gives a percentage improvement of 22.4\% on VIP-attribute, 3.3\% on VisualBMI, and 63.09\% on the Bollywood dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge