Muthuvel Arigovindan

PSTAIC regularization for 2D spatiotemporal image reconstruction

Apr 28, 2024Abstract:We propose a model for restoration of spatio-temporal TIRF images based on infimal decomposition regularization model named STAIC proposed earlier. We propose to strengthen the STAIC algorithm by enabling it to estimate the relative weights in the regularization term by incorporating it as part of the optimization problem. We also design an iterative scheme which alternatively minimizes the weight and image sub-problems. We demonstrate the restoration quality of this regularization scheme against other restoration models enabled by similar weight estimation schemes.

STAIC regularization for spatio-temporal image reconstruction

Apr 07, 2024Abstract:We propose a regularization-based image restoration scheme for 2D images recorded over time (2D+t). We design an infimal convolution-based regularization function which we call spatio-temporal Adaptive Infimal Convolution (STAIC) regularization. We formulate the infimal convolution in the form of an additive decomposition of the 2D+t image such that the extent of spatial and temporal smoothing is controlled in a spatially and temporally varying manner. This makes the regularization adaptable to the local characteristics of the motion leading to an improved ability to handle noise. We also develop a minimization method for image reconstruction by using the proposed form of regularization. We demonstrate the effectiveness of the proposed regularization using TIRF images recorded over time and compare with some selected existing regularizations.

Non-convex regularization based on shrinkage penalty function

Sep 08, 2023Abstract:Total Variation regularization (TV) is a seminal approach for image recovery. TV involves the norm of the image's gradient, aggregated over all pixel locations. Therefore, TV leads to piece-wise constant solutions, resulting in what is known as the "staircase effect." To mitigate this effect, the Hessian Schatten norm regularization (HSN) employs second-order derivatives, represented by the pth norm of eigenvalues in the image hessian, summed across all pixels. HSN demonstrates superior structure-preserving properties compared to TV. However, HSN solutions tend to be overly smoothed. To address this, we introduce a non-convex shrinkage penalty applied to the Hessian's eigenvalues, deviating from the convex lp norm. It is important to note that the shrinkage penalty is not defined directly in closed form, but specified indirectly through its proximal operation. This makes constructing a provably convergent algorithm difficult as the singular values are also defined through a non-linear operation. However, we were able to derive a provably convergent algorithm using proximal operations. We prove the convergence by establishing that the proposed regularization adheres to restricted proximal regularity. The images recovered by this regularization were sharper than the convex counterparts.

Compact Representation of n-th order TGV

Sep 06, 2023Abstract:Although regularization methods based on derivatives are favored for their robustness and computational simplicity, research exploring higher-order derivatives remains limited. This scarcity can possibly be attributed to the appearance of oscillations in reconstructions when directly generalizing TV-1 to higher orders (3 or more). Addressing this, Bredies et. al introduced a notable approach for generalizing total variation, known as Total Generalized Variation (TGV). This technique introduces a regularization that generates estimates embodying piece-wise polynomial behavior of varying degrees across distinct regions of an image.Importantly, to our current understanding, no sufficiently general algorithm exists for solving TGV regularization for orders beyond 2. This is likely because of two problems: firstly, the problem is complex as TGV regularization is defined as a minimization problem with non-trivial constraints, and secondly, TGV is represented in terms of tensor-fields which is difficult to implement. In this work we tackle the first challenge by giving two simple and implementable representations of n th order TGV

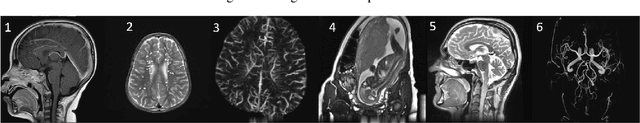

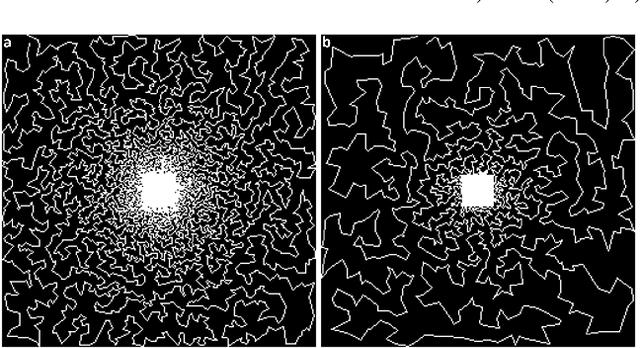

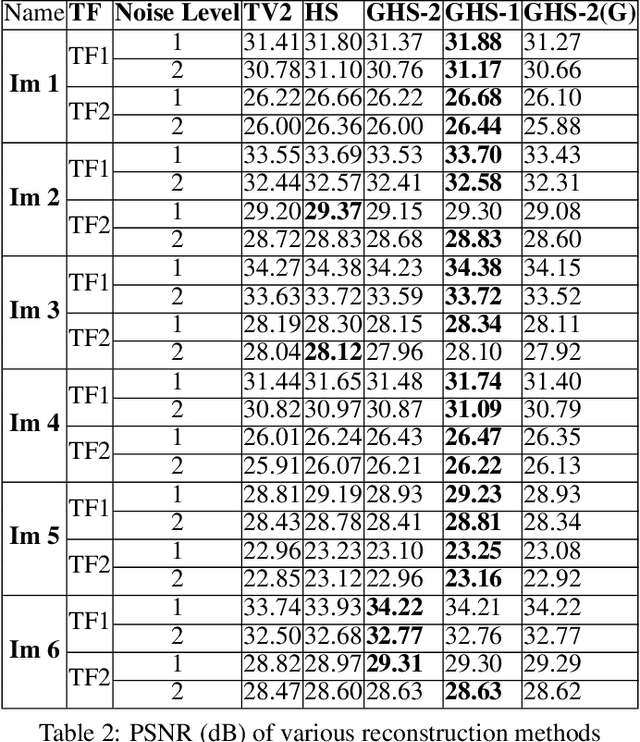

Structurally Adaptive Multi-Derivative Regularization for Image Recovery from Sparse Fourier Samples

Jun 10, 2021

Abstract:The importance of regularization has been well established in image reconstruction -- which is the computational inversion of imaging forward model -- with applications including deconvolution for microscopy, tomographic reconstruction, magnetic resonance imaging, and so on. Originally, the primary role of the regularization was to stabilize the computational inversion of the imaging forward model against noise. However, a recent framework pioneered by Donoho and others, known as compressive sensing, brought the role of regularization beyond the stabilization of inversion. It established a possibility that regularization can recover full images from highly undersampled measurements. However, it was observed that the quality of reconstruction yielded by compressive sensing methods falls abruptly when the under-sampling and/or measurement noise goes beyond a certain threshold. Recently developed learning-based methods are believed to outperform the compressive sensing methods without a steep drop in the reconstruction quality under such imaging conditions. However, the need for training data limits their applicability. In this paper, we develop a regularization method that outperforms compressive sensing methods as well as selected learning-based methods, without any need for training data. The regularization is constructed as a spatially varying weighted sum of first- and canonical second-order derivatives, with the weights determined to be adaptive to the image structure; the weights are determined such that the attenuation of sharp image features -- which is inevitable with the use of any regularization -- is significantly reduced. We demonstrate the effectiveness of the proposed method by performing reconstruction on sparse Fourier samples simulated from a variety of MRI images.

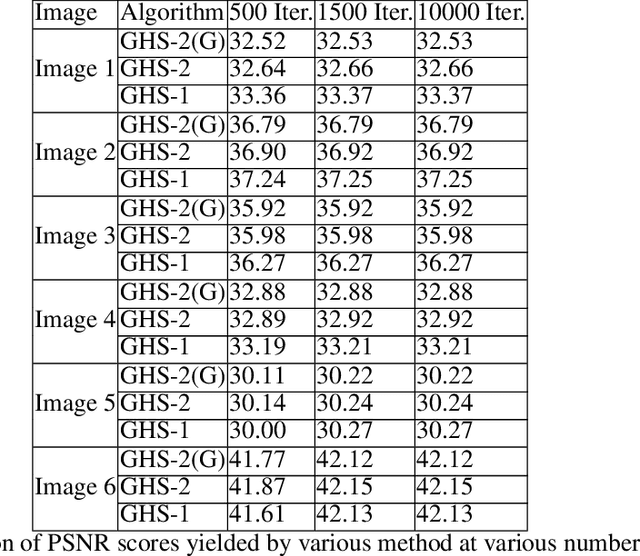

Generalized Hessian-Schatten Norm Regularization for Image Reconstruction

May 25, 2021

Abstract:Regularization plays a crucial role in reliably utilizing imaging systems for scientific and medical investigations. It helps to stabilize the process of computationally undoing any degradation caused by physical limitations of the imaging process. In the past decades, total variation regularization, especially second-order total variation (TV-2) regularization played a dominant role in the literature. Two forms of generalizations, namely Hessian-Schatten norm (HSN) regularization, and total generalized variation (TGV) regularization, have been recently proposed and have become significant developments in the area of regularization for imaging inverse problems owing to their performance. Here, we develop a novel regularization for image recovery that combines the strengths of these well-known forms. We achieve this by restricting the maximization space in the dual form of HSN in the same way that TGV is obtained from TV-2. We name the new regularization as the generalized Hessian-Schatten norm regularization (GHSN), and we develop a novel optimization method for image reconstruction using the new form of regularization based on the well-known framework called alternating direction method of multipliers (ADMM). We demonstrate the strength of the GHSN using some reconstruction examples.

PAT image reconstruction using augmented sparsity regularization with semi-automated tuning of regularization weight

Mar 24, 2021

Abstract:Among all tissue imaging modalities, photo-acoustic tomography (PAT) has been getting increasing attention in the recent past due to the fact that it has high contrast, high penetrability, and has capability of retrieving high resolution. The reconstruction methods used in PAT plays a crucial role in the applicability of PAT, and PAT finds particularly a wider applicability if a model-based regularized reconstruction method is used. A crucial factor that determines the quality of reconstruction in such methods is the choice of regularization weight. Unfortunately, an appropriately tuned value of regularization weight varies significantly with variation in the noise level, as well as, with the variation in the high resolution contents of the image, in a way that has not been well understood. There has been attempts to determine optimum regularization weight from the measured data in the context of using elementary and general purpose regularizations. In this paper, we develop a method for semi-automated tuning of the regularization weight in the context of using a modern type of regularization that was specifically designed for PAT image reconstruction. As a first step, we introduce a relative smoothness constraint with a parameter; this parameter computationally maps into the actual regularization weight, but, its tuning does not vary significantly with variation in the noise level, and with the variation in the high resolution contents of the image. Next, we construct an algorithm that integrates the task of determining this mapping along with obtaining the reconstruction. Finally we demonstrate experimentally that we can run this algorithm with a nominal value of the relative smoothness parameter -- a value independent of the noise level and the structure of the underlying image -- to obtain good quality reconstructions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge