Murilo Gustineli

Tile-Based ViT Inference with Visual-Cluster Priors for Zero-Shot Multi-Species Plant Identification

Jul 08, 2025Abstract:We describe DS@GT's second-place solution to the PlantCLEF 2025 challenge on multi-species plant identification in vegetation quadrat images. Our pipeline combines (i) a fine-tuned Vision Transformer ViTD2PC24All for patch-level inference, (ii) a 4x4 tiling strategy that aligns patch size with the network's 518x518 receptive field, and (iii) domain-prior adaptation through PaCMAP + K-Means visual clustering and geolocation filtering. Tile predictions are aggregated by majority vote and re-weighted with cluster-specific Bayesian priors, yielding a macro-averaged F1 of 0.348 (private leaderboard) while requiring no additional training. All code, configuration files, and reproducibility scripts are publicly available at https://github.com/dsgt-arc/plantclef-2025.

Transfer Learning with Pseudo Multi-Label Birdcall Classification for DS@GT BirdCLEF 2024

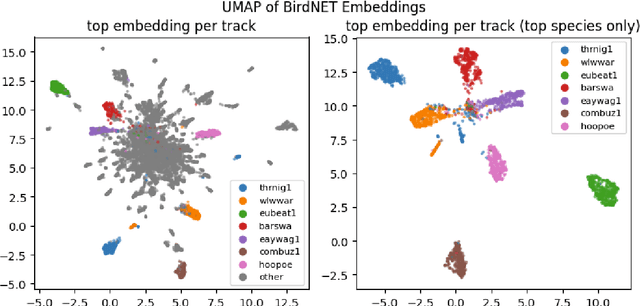

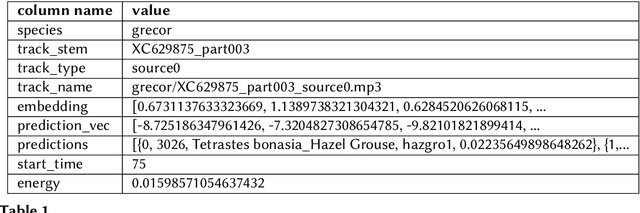

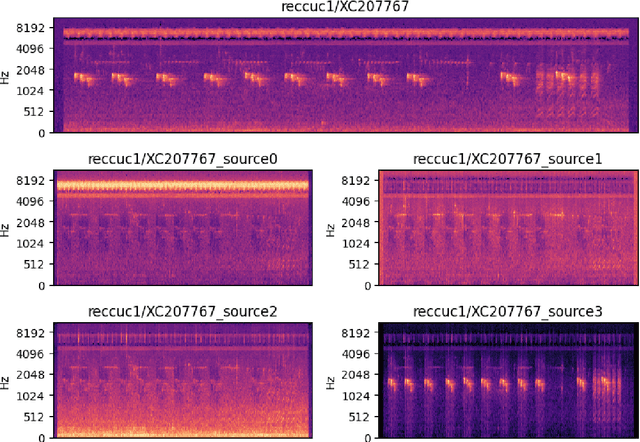

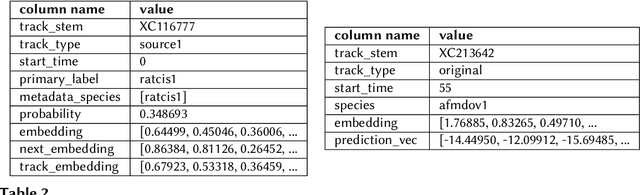

Jul 08, 2024Abstract:We present working notes for the DS@GT team on transfer learning with pseudo multi-label birdcall classification for the BirdCLEF 2024 competition, focused on identifying Indian bird species in recorded soundscapes. Our approach utilizes production-grade models such as the Google Bird Vocalization Classifier, BirdNET, and EnCodec to address representation and labeling challenges in the competition. We explore the distributional shift between this year's edition of unlabeled soundscapes representative of the hidden test set and propose a pseudo multi-label classification strategy to leverage the unlabeled data. Our highest post-competition public leaderboard score is 0.63 using BirdNET embeddings with Bird Vocalization pseudo-labels. Our code is available at https://github.com/dsgt-kaggle-clef/birdclef-2024

Multi-Label Plant Species Classification with Self-Supervised Vision Transformers

Jul 08, 2024Abstract:We present a transfer learning approach using a self-supervised Vision Transformer (DINOv2) for the PlantCLEF 2024 competition, focusing on the multi-label plant species classification. Our method leverages both base and fine-tuned DINOv2 models to extract generalized feature embeddings. We train classifiers to predict multiple plant species within a single image using these rich embeddings. To address the computational challenges of the large-scale dataset, we employ Spark for distributed data processing, ensuring efficient memory management and processing across a cluster of workers. Our data processing pipeline transforms images into grids of tiles, classifying each tile, and aggregating these predictions into a consolidated set of probabilities. Our results demonstrate the efficacy of combining transfer learning with advanced data processing techniques for multi-label image classification tasks. Our code is available at https://github.com/dsgt-kaggle-clef/plantclef-2024.

Transfer Learning with Self-Supervised Vision Transformers for Snake Identification

Jul 08, 2024

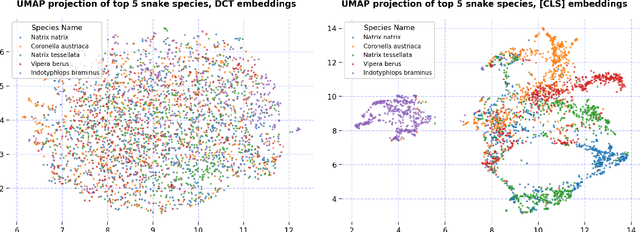

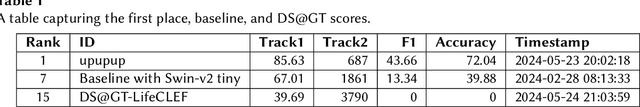

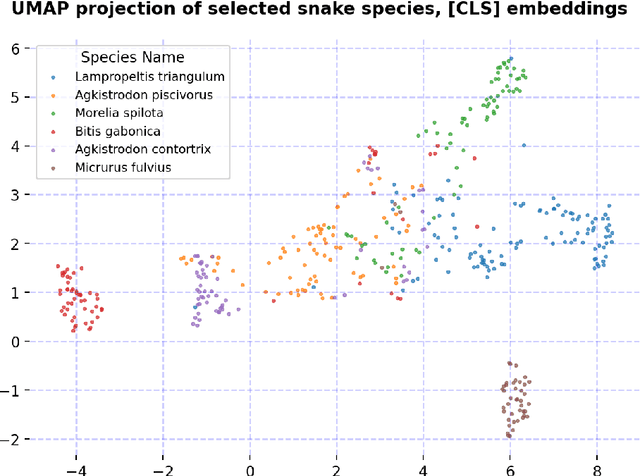

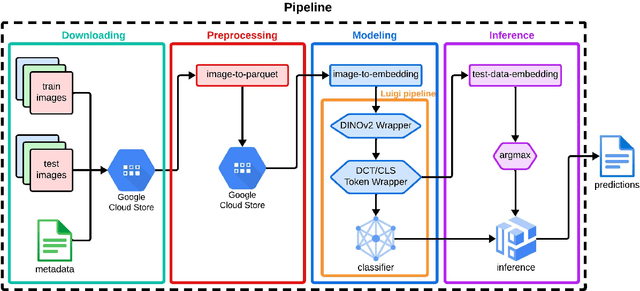

Abstract:We present our approach for the SnakeCLEF 2024 competition to predict snake species from images. We explore and use Meta's DINOv2 vision transformer model for feature extraction to tackle species' high variability and visual similarity in a dataset of 182,261 images. We perform exploratory analysis on embeddings to understand their structure, and train a linear classifier on the embeddings to predict species. Despite achieving a score of 39.69, our results show promise for DINOv2 embeddings in snake identification. All code for this project is available at https://github.com/dsgt-kaggle-clef/snakeclef-2024.

Transfer Learning with Semi-Supervised Dataset Annotation for Birdcall Classification

Jun 29, 2023

Abstract:We present working notes on transfer learning with semi-supervised dataset annotation for the BirdCLEF 2023 competition, focused on identifying African bird species in recorded soundscapes. Our approach utilizes existing off-the-shelf models, BirdNET and MixIT, to address representation and labeling challenges in the competition. We explore the embedding space learned by BirdNET and propose a process to derive an annotated dataset for supervised learning. Our experiments involve various models and feature engineering approaches to maximize performance on the competition leaderboard. The results demonstrate the effectiveness of our approach in classifying bird species and highlight the potential of transfer learning and semi-supervised dataset annotation in similar tasks.

A survey on recently proposed activation functions for Deep Learning

Apr 07, 2022

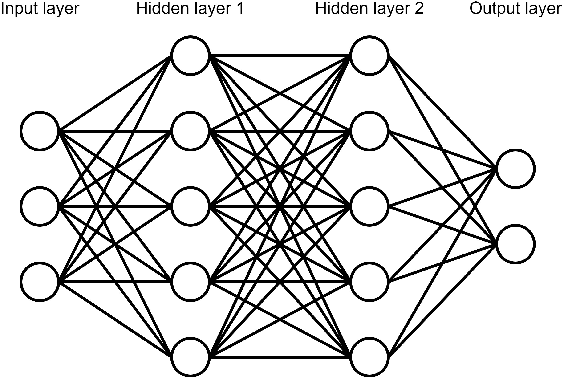

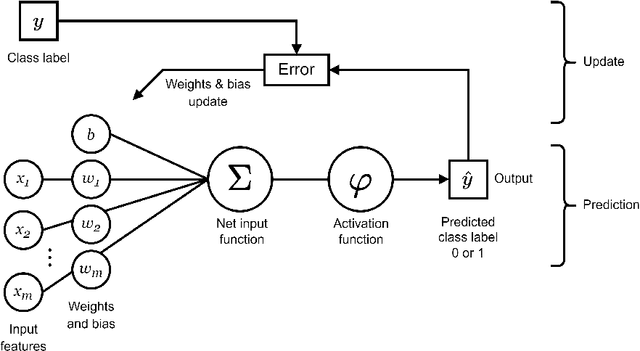

Abstract:Artificial neural networks (ANN), typically referred to as neural networks, are a class of Machine Learning algorithms and have achieved widespread success, having been inspired by the biological structure of the human brain. Neural networks are inherently powerful due to their ability to learn complex function approximations from data. This generalization ability has been able to impact multidisciplinary areas involving image recognition, speech recognition, natural language processing, and others. Activation functions are a crucial sub-component of neural networks. They define the output of a node in the network given a set of inputs. This survey discusses the main concepts of activation functions in neural networks, including; a brief introduction to deep neural networks, a summary of what are activation functions and how they are used in neural networks, their most common properties, the different types of activation functions, some of the challenges, limitations, and alternative solutions faced by activation functions, concluding with the final remarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge