Muhammad Andy Putratama

Safe reinforcement learning with self-improving hard constraints for multi-energy management systems

Apr 18, 2023

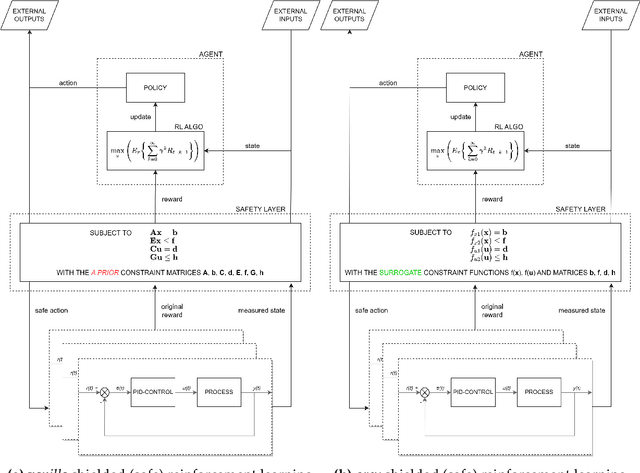

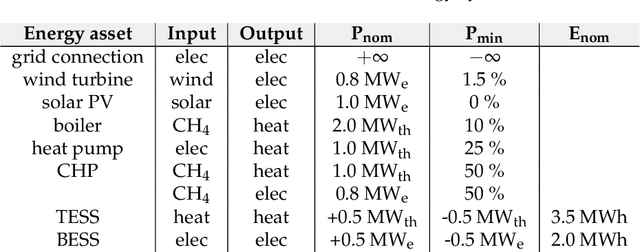

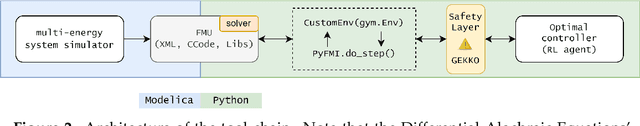

Abstract:Safe reinforcement learning (RL) with hard constraint guarantees is a promising optimal control direction for multi-energy management systems. It only requires the environment-specific constraint functions itself a prior and not a complete model (i.e. plant, disturbance and noise models, and prediction models for states not included in the plant model - e.g. demand, weather, and price forecasts). The project-specific upfront and ongoing engineering efforts are therefore still reduced, better representations of the underlying system dynamics can still be learned and modeling bias is kept to a minimum (no model-based objective function). However, even the constraint functions alone are not always trivial to accurately provide in advance (e.g. an energy balance constraint requires the detailed determination of all energy inputs and outputs), leading to potentially unsafe behavior. In this paper, we present two novel advancements: (I) combining the Optlayer and SafeFallback method, named OptLayerPolicy, to increase the initial utility while keeping a high sample efficiency. (II) introducing self-improving hard constraints, to increase the accuracy of the constraint functions as more data becomes available so that better policies can be learned. Both advancements keep the constraint formulation decoupled from the RL formulation, so that new (presumably better) RL algorithms can act as drop-in replacements. We have shown that, in a simulated multi-energy system case study, the initial utility is increased to 92.4% (OptLayerPolicy) compared to 86.1% (OptLayer) and that the policy after training is increased to 104.9% (GreyOptLayerPolicy) compared to 103.4% (OptLayer) - all relative to a vanilla RL benchmark. While introducing surrogate functions into the optimization problem requires special attention, we do conclude that the newly presented GreyOptLayerPolicy method is the most advantageous.

TreeC: a method to generate interpretable energy management systems using a metaheuristic algorithm

Apr 17, 2023

Abstract:Energy management systems (EMS) have classically been implemented based on rule-based control (RBC) and model predictive control (MPC) methods. Recent research are investigating reinforcement learning (RL) as a new promising approach. This paper introduces TreeC, a machine learning method that uses the metaheuristic algorithm covariance matrix adaptation evolution strategy (CMA-ES) to generate an interpretable EMS modeled as a decision tree. This method learns the decision strategy of the EMS based on historical data contrary to RBC and MPC approaches that are typically considered as non adaptive solutions. The decision strategy of the EMS is modeled as a decision tree and is thus interpretable contrary to RL which mainly uses black-box models (e.g. neural networks). The TreeC method is compared to RBC, MPC and RL strategies in two study cases taken from literature: (1) an electric grid case and (2) a household heating case. The results show that TreeC obtains close performances than MPC with perfect forecast in both cases and obtains similar performances to RL in the electrical grid case and outperforms RL in the household heating case. TreeC demonstrates a performant application of machine learning for energy management systems that is also fully interpretable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge