Moon Hyun Kim

Compare Where It Matters: Using Layer-Wise Regularization To Improve Federated Learning on Heterogeneous Data

Dec 01, 2021

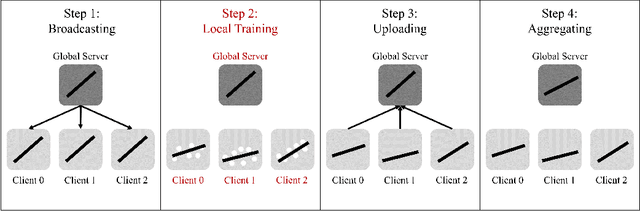

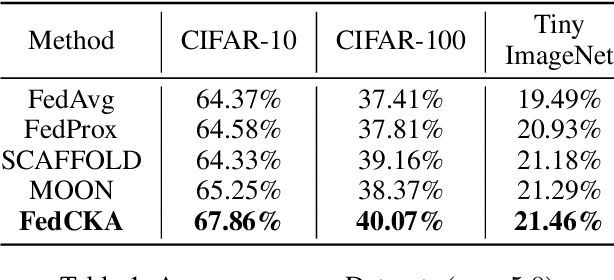

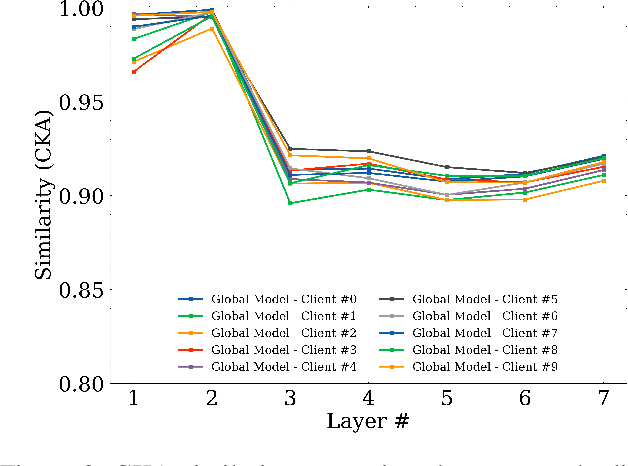

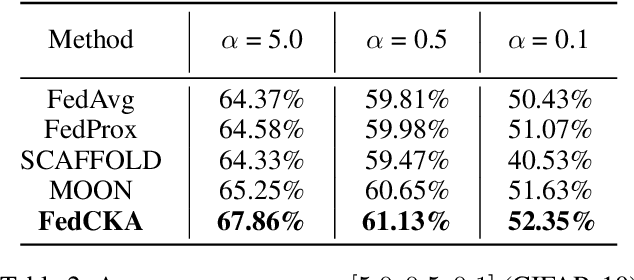

Abstract:Federated Learning is a widely adopted method to train neural networks over distributed data. One main limitation is the performance degradation that occurs when data is heterogeneously distributed. While many works have attempted to address this problem, these methods under-perform because they are founded on a limited understanding of neural networks. In this work, we verify that only certain important layers in a neural network require regularization for effective training. We additionally verify that Centered Kernel Alignment (CKA) most accurately calculates similarity between layers of neural networks trained on different data. By applying CKA-based regularization to important layers during training, we significantly improve performance in heterogeneous settings. We present FedCKA: a simple framework that out-performs previous state-of-the-art methods on various deep learning tasks while also improving efficiency and scalability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge