Mohsen Soryani

EffNetViTLoRA: An Efficient Hybrid Deep Learning Approach for Alzheimer's Disease Diagnosis

Aug 26, 2025

Abstract:Alzheimer's disease (AD) is one of the most prevalent neurodegenerative disorders worldwide. As it progresses, it leads to the deterioration of cognitive functions. Since AD is irreversible, early diagnosis is crucial for managing its progression. Mild Cognitive Impairment (MCI) represents an intermediate stage between Cognitively Normal (CN) individuals and those with AD, and is considered a transitional phase from normal cognition to Alzheimer's disease. Diagnosing MCI is particularly challenging due to the subtle differences between adjacent diagnostic categories. In this study, we propose EffNetViTLoRA, a generalized end-to-end model for AD diagnosis using the whole Alzheimer's Disease Neuroimaging Initiative (ADNI) Magnetic Resonance Imaging (MRI) dataset. Our model integrates a Convolutional Neural Network (CNN) with a Vision Transformer (ViT) to capture both local and global features from MRI images. Unlike previous studies that rely on limited subsets of data, our approach is trained on the full T1-weighted MRI dataset from ADNI, resulting in a more robust and unbiased model. This comprehensive methodology enhances the model's clinical reliability. Furthermore, fine-tuning large pretrained models often yields suboptimal results when source and target dataset domains differ. To address this, we incorporate Low-Rank Adaptation (LoRA) to effectively adapt the pretrained ViT model to our target domain. This method enables efficient knowledge transfer and reduces the risk of overfitting. Our model achieves a classification accuracy of 92.52% and an F1-score of 92.76% across three diagnostic categories: AD, MCI, and CN for full ADNI dataset.

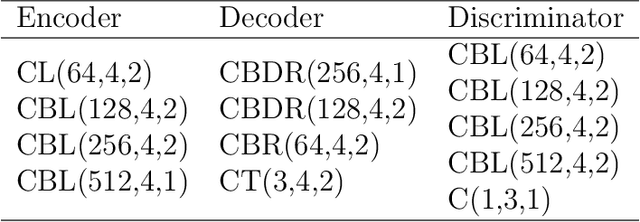

Mass Segmentation in Automated 3-D Breast Ultrasound Using Dual-Path U-net

Sep 29, 2021

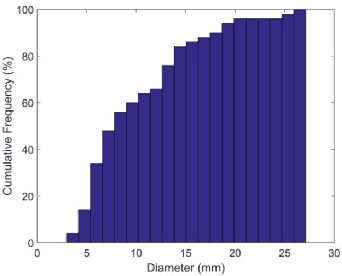

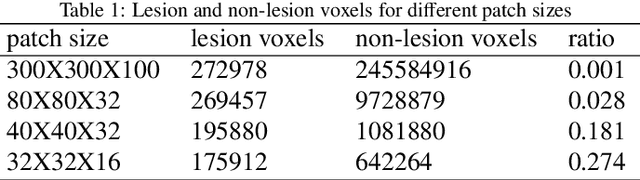

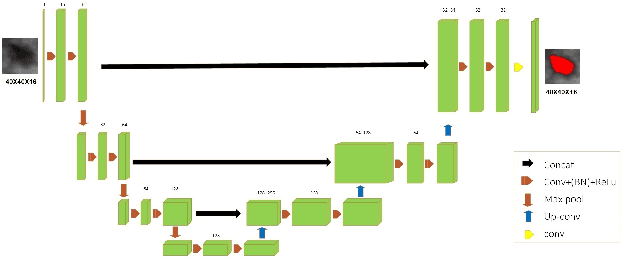

Abstract:Automated 3-D breast ultrasound (ABUS) is a newfound system for breast screening that has been proposed as a supplementary modality to mammography for breast cancer detection. While ABUS has better performance in dense breasts, reading ABUS images is exhausting and time-consuming. So, a computer-aided detection system is necessary for interpretation of these images. Mass segmentation plays a vital role in the computer-aided detection systems and it affects the overall performance. Mass segmentation is a challenging task because of the large variety in size, shape, and texture of masses. Moreover, an imbalanced dataset makes segmentation harder. A novel mass segmentation approach based on deep learning is introduced in this paper. The deep network that is used in this study for image segmentation is inspired by U-net, which has been used broadly for dense segmentation in recent years. The system's performance was determined using a dataset of 50 masses including 38 malign and 12 benign lesions. The proposed segmentation method attained a mean Dice of 0.82 which outperformed a two-stage supervised edge-based method with a mean Dice of 0.74 and an adaptive region growing method with a mean Dice of 0.65.

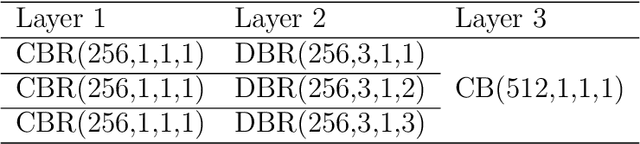

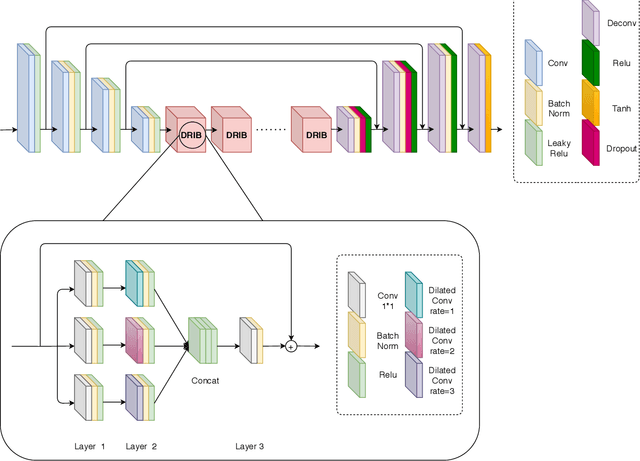

Cloud removal in remote sensing images using generative adversarial networks and SAR-to-optical image translation

Dec 22, 2020

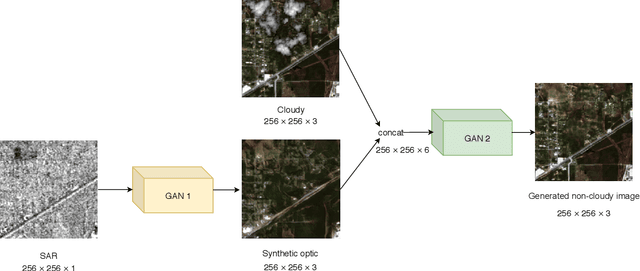

Abstract:Satellite images are often contaminated by clouds. Cloud removal has received much attention due to the wide range of satellite image applications. As the clouds thicken, the process of removing the clouds becomes more challenging. In such cases, using auxiliary images such as near-infrared or synthetic aperture radar (SAR) for reconstructing is common. In this study, we attempt to solve the problem using two generative adversarial networks (GANs). The first translates SAR images into optical images, and the second removes clouds using the translated images of prior GAN. Also, we propose dilated residual inception blocks (DRIBs) instead of vanilla U-net in the generator networks and use structural similarity index measure (SSIM) in addition to the L1 Loss function. Reducing the number of downsamplings and expanding receptive fields by dilated convolutions increase the quality of output images. We used the SEN1-2 dataset to train and test both GANs, and we made cloudy images by adding synthetic clouds to optical images. The restored images are evaluated with PSNR and SSIM. We compare the proposed method with state-of-the-art deep learning models and achieve more accurate results in both SAR-to-optical translation and cloud removal parts.

EKFPnP: Extended Kalman Filter for Camera Pose Estimation in a Sequence of Images

Jun 25, 2019

Abstract:In real-world applications the Perspective-n-Point (PnP) problem should generally be applied in a sequence of images which a set of drift-prone features are tracked over time. In this paper, we consider both the temporal dependency of camera poses and the uncertainty of features for the sequential camera pose estimation. Using the Extended Kalman Filter (EKF), a priori estimate of the camera pose is calculated from the camera motion model and then corrected by minimizing the reprojection error of the reference points. Experimental results, using both simulated and real data, demonstrate that the proposed method improves the robustness of the camera pose estimation, in the presence of noise, compared to the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge