Mohan Wu

Quantum Long Short-Term Memory for Drug Discovery

Jul 29, 2024

Abstract:Quantum computing combined with machine learning (ML) is an extremely promising research area, with numerous studies demonstrating that quantum machine learning (QML) is expected to solve scientific problems more effectively than classical ML. In this work, we successfully apply QML to drug discovery, showing that QML can significantly improve model performance and achieve faster convergence compared to classical ML. Moreover, we demonstrate that the model accuracy of the QML improves as the number of qubits increases. We also introduce noise to the QML model and find that it has little effect on our experimental conclusions, illustrating the high robustness of the QML model. This work highlights the potential application of quantum computing to yield significant benefits for scientific advancement as the qubit quantity increase and quality improvement in the future.

Data-Adaptive Probabilistic Likelihood Approximation for Ordinary Differential Equations

Jun 08, 2023

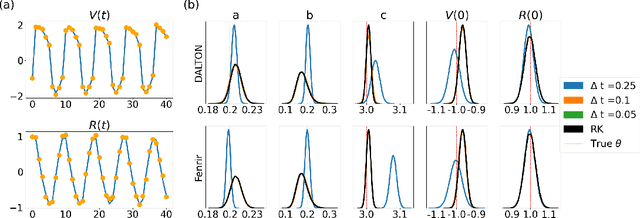

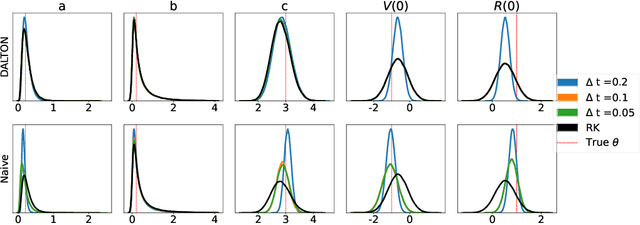

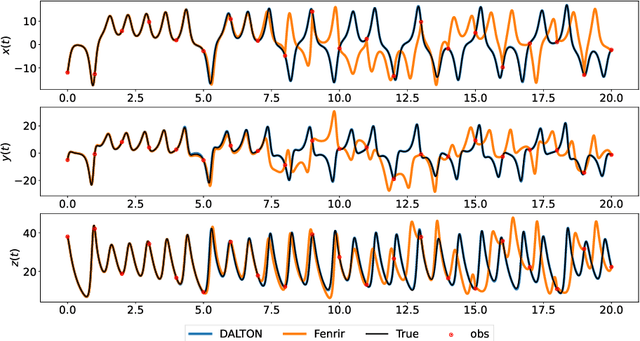

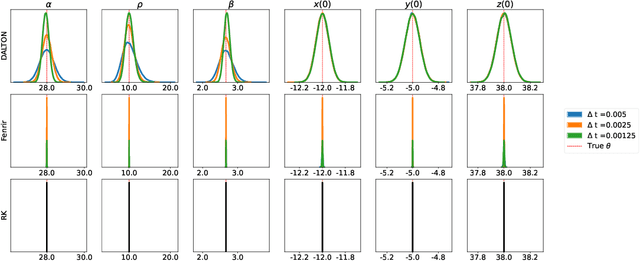

Abstract:Parameter inference for ordinary differential equations (ODEs) is of fundamental importance in many scientific applications. While ODE solutions are typically approximated by deterministic algorithms, new research on probabilistic solvers indicates that they produce more reliable parameter estimates by better accounting for numerical errors. However, many ODE systems are highly sensitive to their parameter values. This produces deep local minima in the likelihood function -- a problem which existing probabilistic solvers have yet to resolve. Here, we show that a Bayesian filtering paradigm for probabilistic ODE solution can dramatically reduce sensitivity to parameters by learning from the noisy ODE observations in a data-adaptive manner. Our method is applicable to ODEs with partially unobserved components and with arbitrary non-Gaussian noise. Several examples demonstrate that it is more accurate than existing probabilistic ODE solvers, and even in some cases than the exact ODE likelihood.

Reinforcement Learning in Computing and Network Convergence Orchestration

Sep 22, 2022

Abstract:As computing power is becoming the core productivity of the digital economy era, the concept of Computing and Network Convergence (CNC), under which network and computing resources can be dynamically scheduled and allocated according to users' needs, has been proposed and attracted wide attention. Based on the tasks' properties, the network orchestration plane needs to flexibly deploy tasks to appropriate computing nodes and arrange paths to the computing nodes. This is a orchestration problem that involves resource scheduling and path arrangement. Since CNC is relatively new, in this paper, we review some researches and applications on CNC. Then, we design a CNC orchestration method using reinforcement learning (RL), which is the first attempt, that can flexibly allocate and schedule computing resources and network resources. Which aims at high profit and low latency. Meanwhile, we use multi-factors to determine the optimization objective so that the orchestration strategy is optimized in terms of total performance from different aspects, such as cost, profit, latency and system overload in our experiment. The experiments shows that the proposed RL-based method can achieve higher profit and lower latency than the greedy method, random selection and balanced-resource method. We demonstrate RL is suitable for CNC orchestration. This paper enlightens the RL application on CNC orchestration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge