Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Mohammad-Javad Davari

Identifying Multiple Interaction Events from Tactile Data during Robot-Human Object Transfer

Sep 15, 2019Figures and Tables:

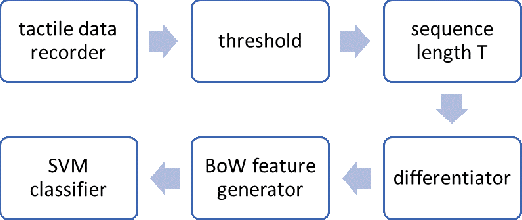

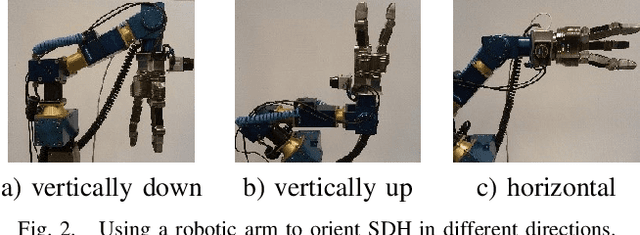

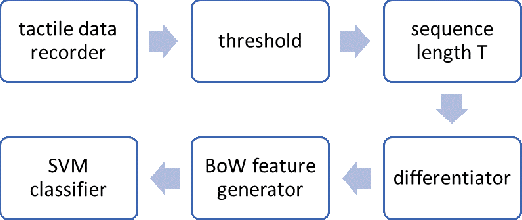

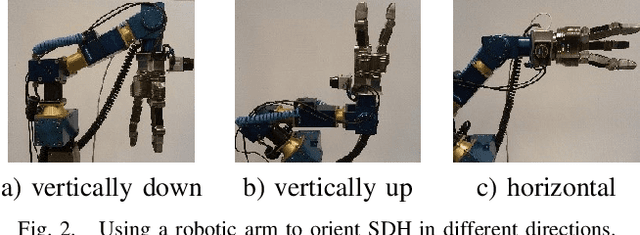

Abstract:During a robot to human object handover task, several intended or unintended events may occur with the object - it may be pulled, pushed, bumped or simply held - by the human receiver. We show that it is possible to differentiate between these events solely via tactile sensors. Training data from tactile sensors were recorded during interaction of human subjects with the object held by a 3-finger robotic hand. A Bag of Words approach was used to automatically extract effective features from the tactile data. A Support Vector Machine was used to distinguish between the four events with over 95 percent average accuracy.

* 7 pages, accepted for 2019 IEEE ROMAN

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge