Mohammad Zubair Khan

Dynamic Logistic Ensembles with Recursive Probability and Automatic Subset Splitting for Enhanced Binary Classification

Nov 27, 2024

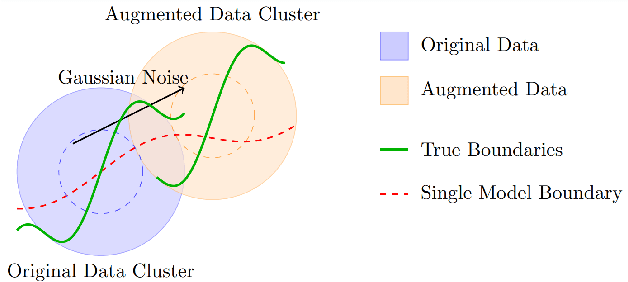

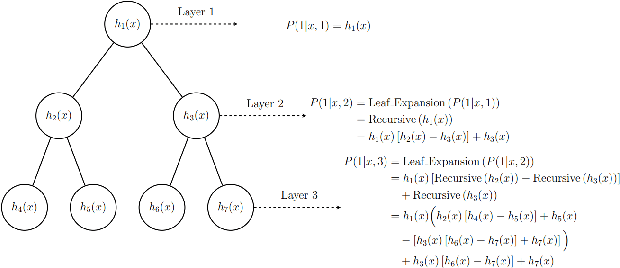

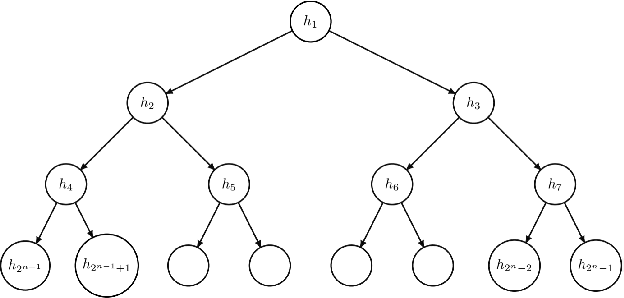

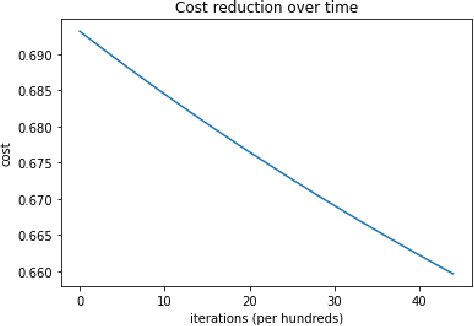

Abstract:This paper presents a novel approach to binary classification using dynamic logistic ensemble models. The proposed method addresses the challenges posed by datasets containing inherent internal clusters that lack explicit feature-based separations. By extending traditional logistic regression, we develop an algorithm that automatically partitions the dataset into multiple subsets, constructing an ensemble of logistic models to enhance classification accuracy. A key innovation in this work is the recursive probability calculation, derived through algebraic manipulation and mathematical induction, which enables scalable and efficient model construction. Compared to traditional ensemble methods such as Bagging and Boosting, our approach maintains interpretability while offering competitive performance. Furthermore, we systematically employ maximum likelihood and cost functions to facilitate the analytical derivation of recursive gradients as functions of ensemble depth. The effectiveness of the proposed approach is validated on a custom dataset created by introducing noise and shifting data to simulate group structures, resulting in significant performance improvements with layers. Implemented in Python, this work balances computational efficiency with theoretical rigor, providing a robust and interpretable solution for complex classification tasks with broad implications for machine learning applications. Code at https://github.com/ensemble-art/Dynamic-Logistic-Ensembles

Multiclass Model for Agriculture development using Multivariate Statistical method

Oct 07, 2020

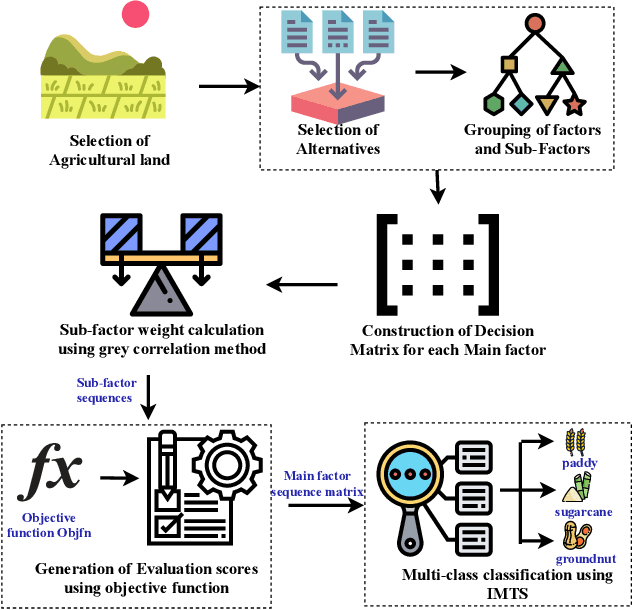

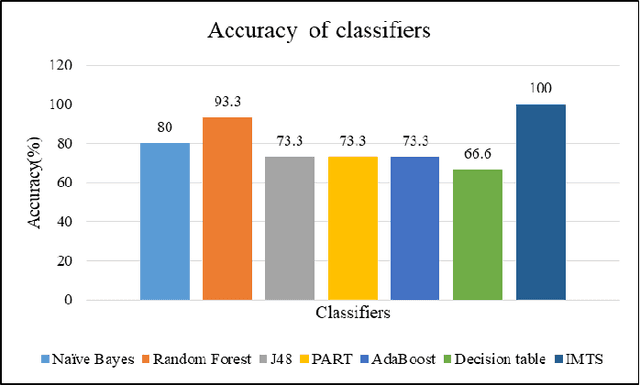

Abstract:Mahalanobis taguchi system (MTS) is a multi-variate statistical method extensively used for feature selection and binary classification problems. The calculation of orthogonal array and signal-to-noise ratio in MTS makes the algorithm complicated when more number of factors are involved in the classification problem. Also the decision is based on the accuracy of normal and abnormal observations of the dataset. In this paper, a multiclass model using Improved Mahalanobis Taguchi System (IMTS) is proposed based on normal observations and Mahalanobis distance for agriculture development. Twenty-six input factors relevant to crop cultivation have been identified and clustered into six main factors for the development of the model. The multiclass model is developed with the consideration of the relative importance of the factors. An objective function is defined for the classification of three crops, namely paddy, sugarcane and groundnut. The classification results are verified against the results obtained from the agriculture experts working in the field. The proposed classifier provides 100% accuracy, recall, precision and 0% error rate when compared with other traditional classifier models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge