Mohammad M Ghassemi

Feature Imitating Networks

Oct 23, 2021

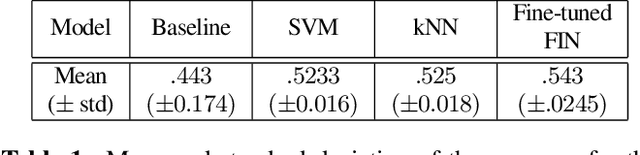

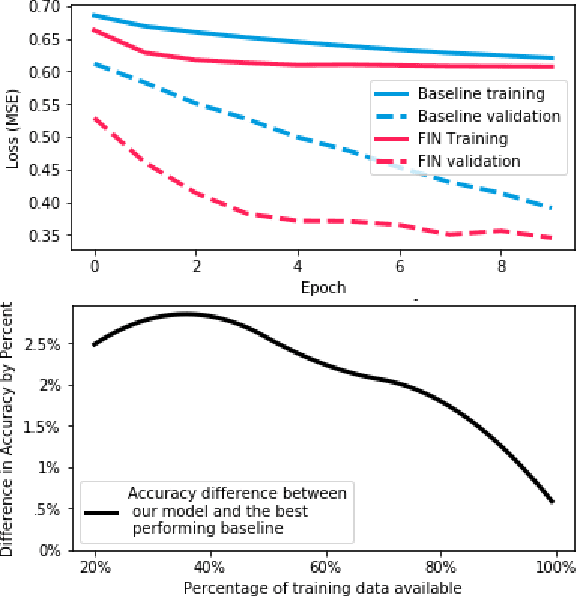

Abstract:In this paper, we introduce a novel approach to neural learning: the Feature-Imitating-Network (FIN). A FIN is a neural network with weights that are initialized to reliably approximate one or more closed-form statistical features, such as Shannon's entropy. In this paper, we demonstrate that FINs (and FIN ensembles) provide best-in-class performance for a variety of downstream signal processing and inference tasks, while using less data and requiring less fine-tuning compared to other networks of similar (or even greater) representational power. We conclude that FINs can help bridge the gap between domain experts and machine learning practitioners by enabling researchers to harness insights from feature-engineering to enhance the performance of contemporary representation learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge