Mohamed Elhelw

Rice Plant Disease Detection and Diagnosis using Deep Convolutional Neural Networks and Multispectral Imaging

Sep 11, 2023Abstract:Rice is considered a strategic crop in Egypt as it is regularly consumed in the Egyptian people's diet. Even though Egypt is the highest rice producer in Africa with a share of 6 million tons per year, it still imports rice to satisfy its local needs due to production loss, especially due to rice disease. Rice blast disease is responsible for 30% loss in rice production worldwide. Therefore, it is crucial to target limiting yield damage by detecting rice crops diseases in its early stages. This paper introduces a public multispectral and RGB images dataset and a deep learning pipeline for rice plant disease detection using multi-modal data. The collected multispectral images consist of Red, Green and Near-Infrared channels and we show that using multispectral along with RGB channels as input archives a higher F1 accuracy compared to using RGB input only.

Robust Real-Time Pedestrian Detection on Embedded Devices

Dec 13, 2020

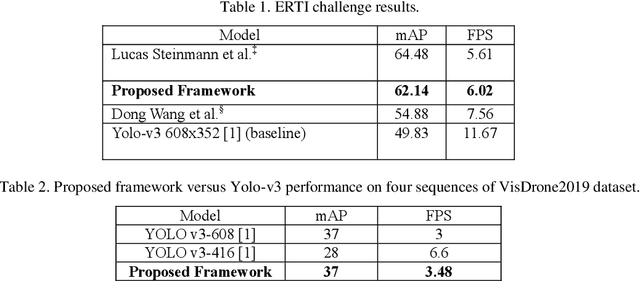

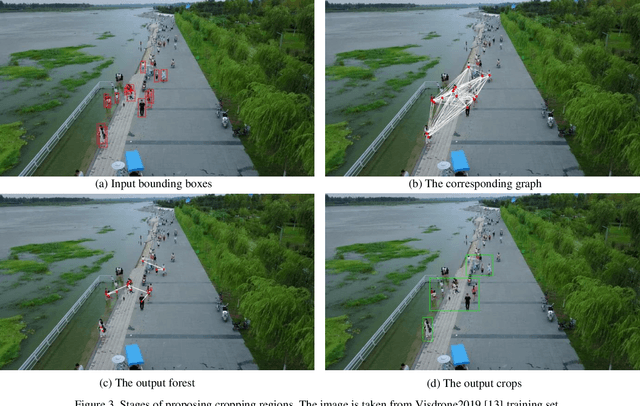

Abstract:Detection of pedestrians on embedded devices, such as those on-board of robots and drones, has many applications including road intersection monitoring, security, crowd monitoring and surveillance, to name a few. However, the problem can be challenging due to continuously-changing camera viewpoint and varying object appearances as well as the need for lightweight algorithms suitable for embedded systems. This paper proposes a robust framework for pedestrian detection in many footages. The framework performs fine and coarse detections on different image regions and exploits temporal and spatial characteristics to attain enhanced accuracy and real time performance on embedded boards. The framework uses the Yolo-v3 object detection [1] as its backbone detector and runs on the Nvidia Jetson TX2 embedded board, however other detectors and/or boards can be used as well. The performance of the framework is demonstrated on two established datasets and its achievement of the second place in CVPR 2019 Embedded Real-Time Inference (ERTI) Challenge.

Multi Projection Fusion for Real-time Semantic Segmentation of 3D LiDAR Point Clouds

Nov 06, 2020

Abstract:Semantic segmentation of 3D point cloud data is essential for enhanced high-level perception in autonomous platforms. Furthermore, given the increasing deployment of LiDAR sensors onboard of cars and drones, a special emphasis is also placed on non-computationally intensive algorithms that operate on mobile GPUs. Previous efficient state-of-the-art methods relied on 2D spherical projection of point clouds as input for 2D fully convolutional neural networks to balance the accuracy-speed trade-off. This paper introduces a novel approach for 3D point cloud semantic segmentation that exploits multiple projections of the point cloud to mitigate the loss of information inherent in single projection methods. Our Multi-Projection Fusion (MPF) framework analyzes spherical and bird's-eye view projections using two separate highly-efficient 2D fully convolutional models then combines the segmentation results of both views. The proposed framework is validated on the SemanticKITTI dataset where it achieved a mIoU of 55.5 which is higher than state-of-the-art projection-based methods RangeNet++ and PolarNet while being 1.6x faster than the former and 3.1x faster than the latter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge